First publish on public

This commit is contained in:

parent

408385002d

commit

7487cd6f11

399

README.md

399

README.md

@ -4,9 +4,14 @@

|

||||

|

||||

## 📚 从本项目你能学到什么

|

||||

|

||||

- 学会主流的 Java Web 开发技术和框架

|

||||

- 积累一个真实的 Web 项目开发经验

|

||||

- 掌握本项目中涉及的常见面试题的答题策略

|

||||

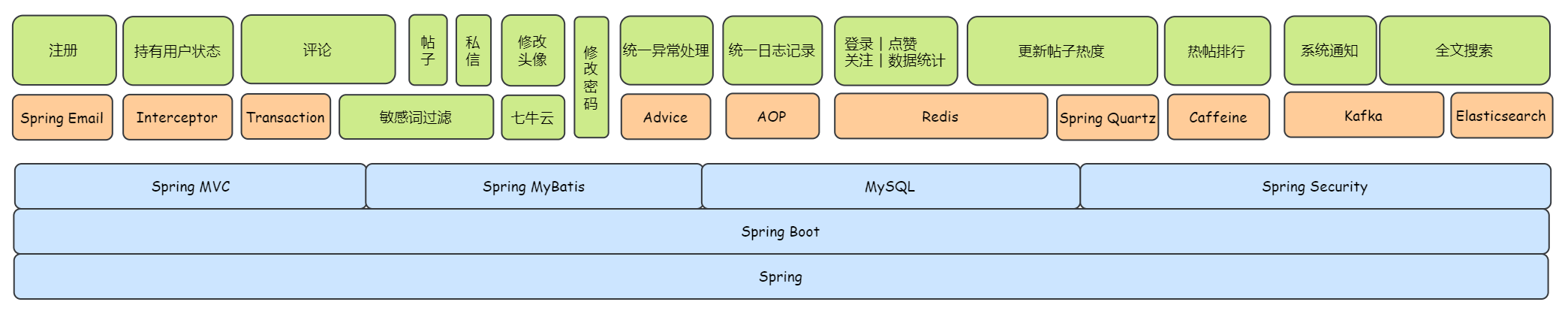

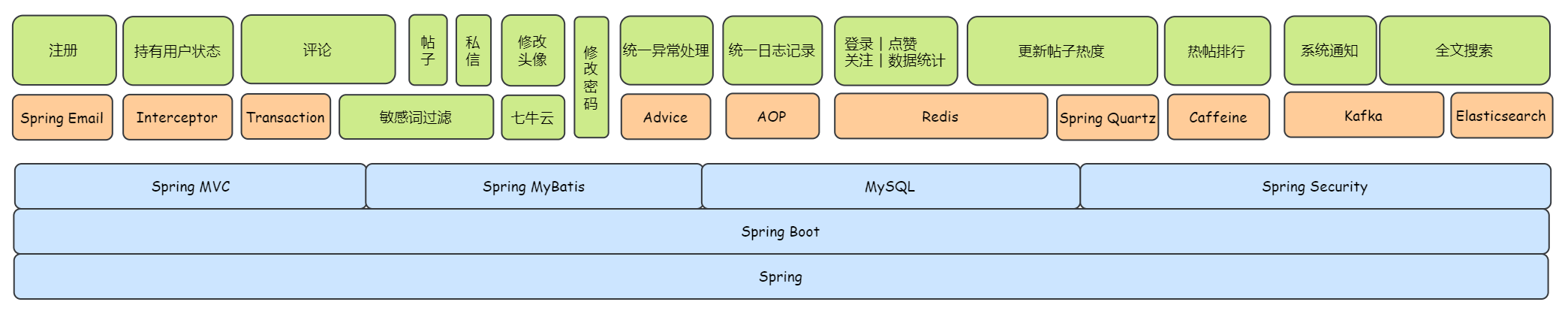

- 学会主流的 Java Web 开发技术和框架(Spring、SpringBoot、Spring MVC、MyBatis、MySQL、Redis、Kafka、Elasticsearch 等)

|

||||

- 了解一个真实的 Web 项目从开发到部署的整个流程(本项目配套有大量图例和详细教程,以帮助小伙伴快速上手)

|

||||

- 掌握本项目中涉及的核心技术点以及常见面试题和解析

|

||||

|

||||

## 🏄 在线体验与文档地址

|

||||

|

||||

- 在线体验:项目已经部署到腾讯云服务器,各位小伙伴们可直接线上体验:[http://1.15.127.74/](http://1.15.127.74/)

|

||||

- 文档地址:文档通过 Vuepress + Gitee Pages 生成,在线访问地址:

|

||||

|

||||

## 💻 核心技术栈

|

||||

|

||||

@ -17,14 +22,14 @@

|

||||

- Spring MVC

|

||||

- ORM:MyBatis

|

||||

- 数据库:MySQL 5.7

|

||||

- 日志:SLF4J(日志接口) + Logback(日志实现)

|

||||

- 缓存:Redis

|

||||

- 分布式缓存:Redis

|

||||

- 本地缓存:Caffeine

|

||||

- 消息队列:Kafka 2.13-2.7.0

|

||||

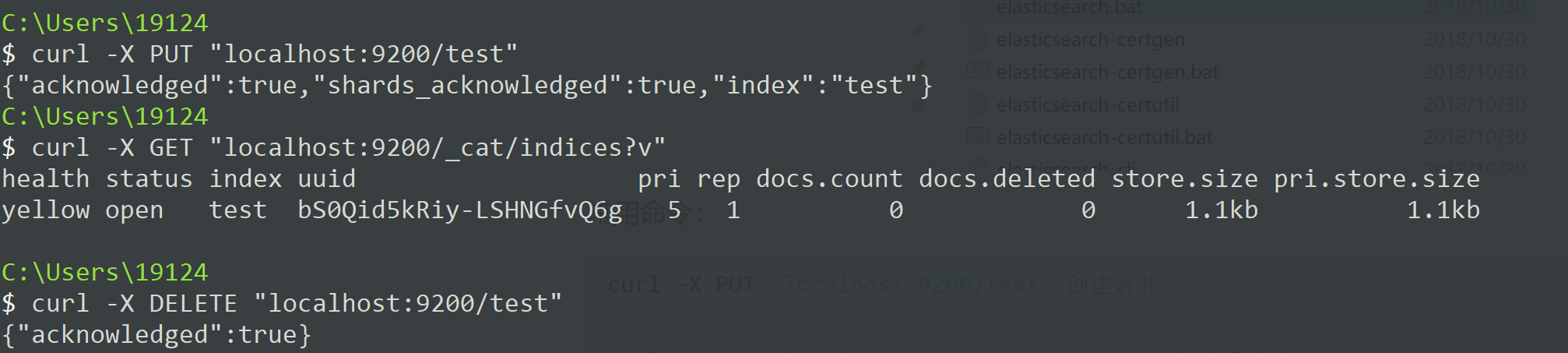

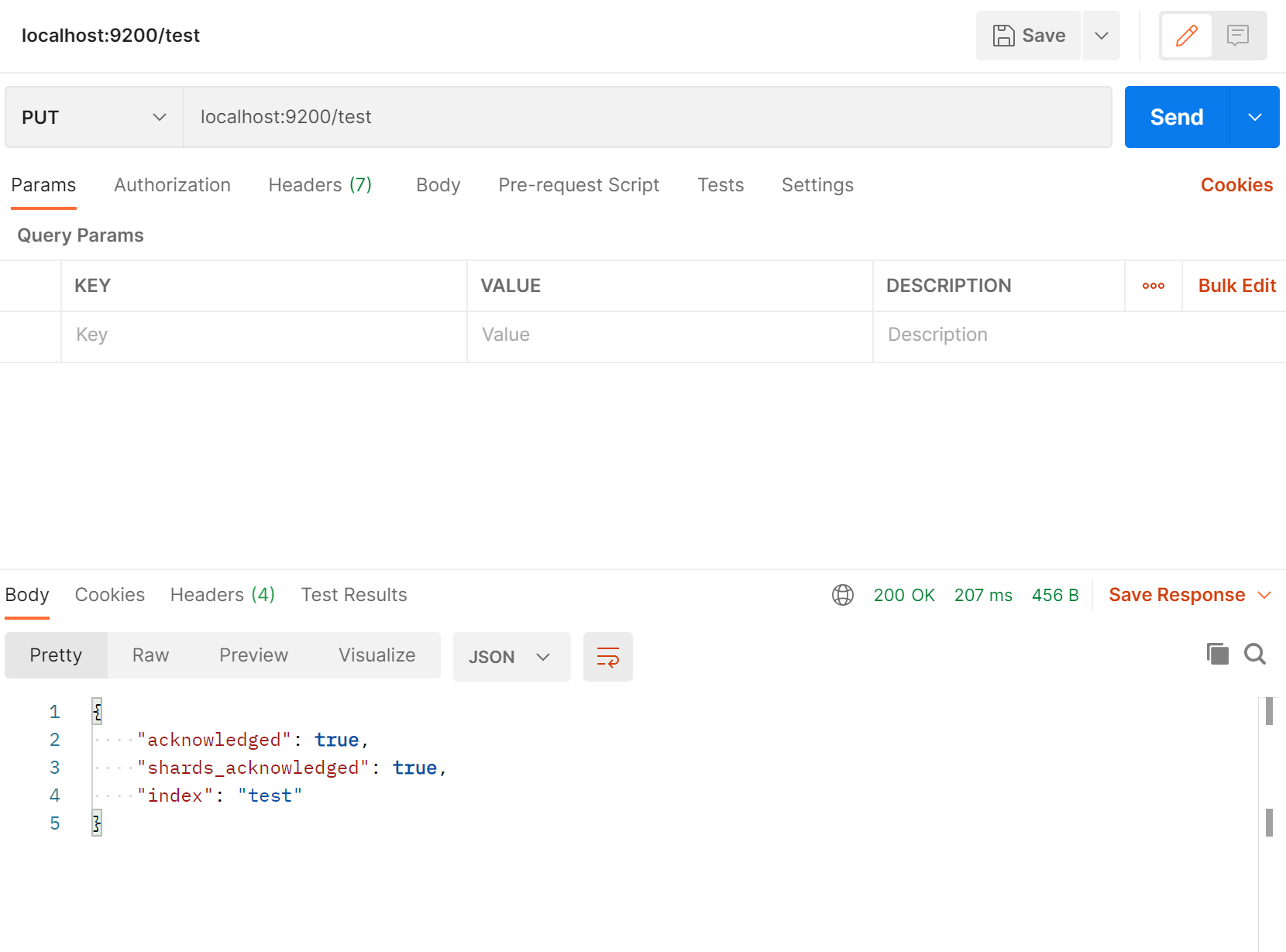

- 搜索引擎:Elasticsearch 6.4.3

|

||||

- 安全:Spring Security

|

||||

- 邮件:Spring Mail

|

||||

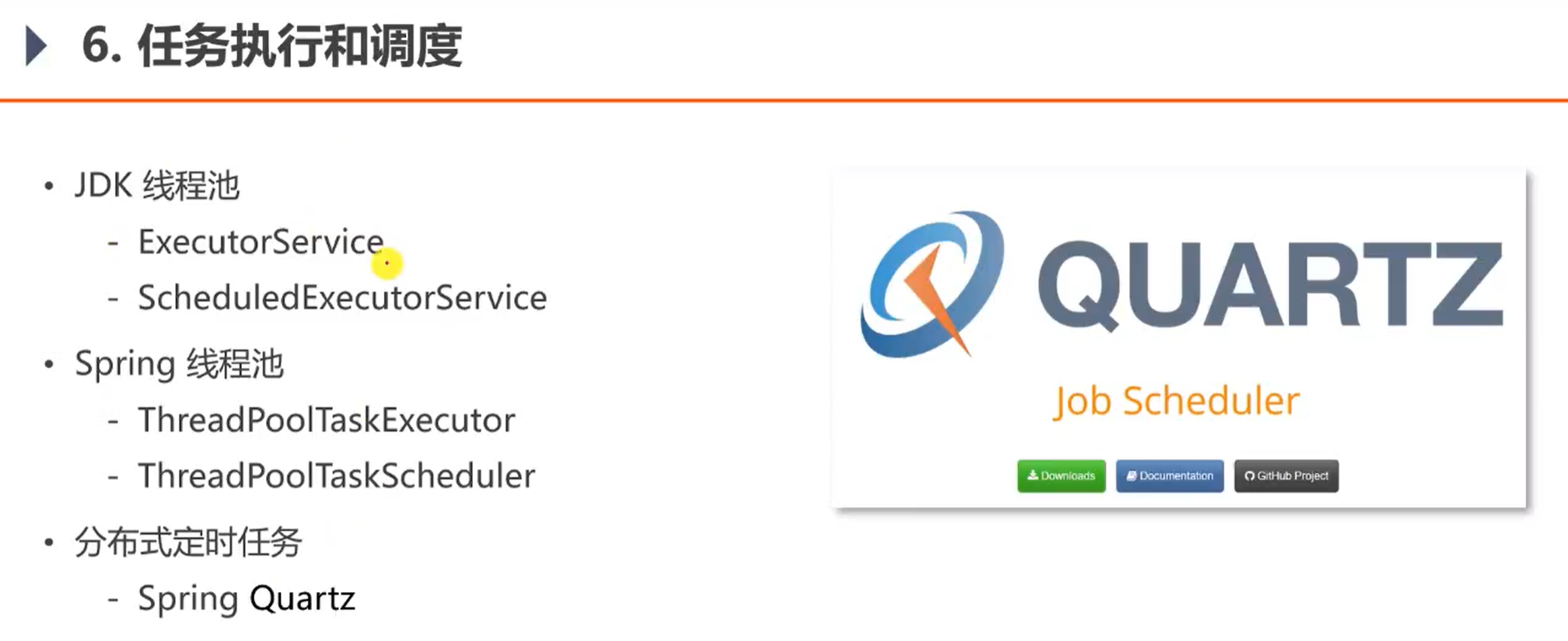

- 分布式定时任务:Spring Quartz

|

||||

- 监控:Spring Actuator

|

||||

- 日志:SLF4J(日志接口) + Logback(日志实现)

|

||||

|

||||

前端:

|

||||

|

||||

@ -38,39 +43,89 @@

|

||||

- 操作系统:Windows 10

|

||||

- 构建工具:Apache Maven

|

||||

- 集成开发工具:Intellij IDEA

|

||||

- 数据库:MySQL 5.7

|

||||

- 应用服务器:Apache Tomcat

|

||||

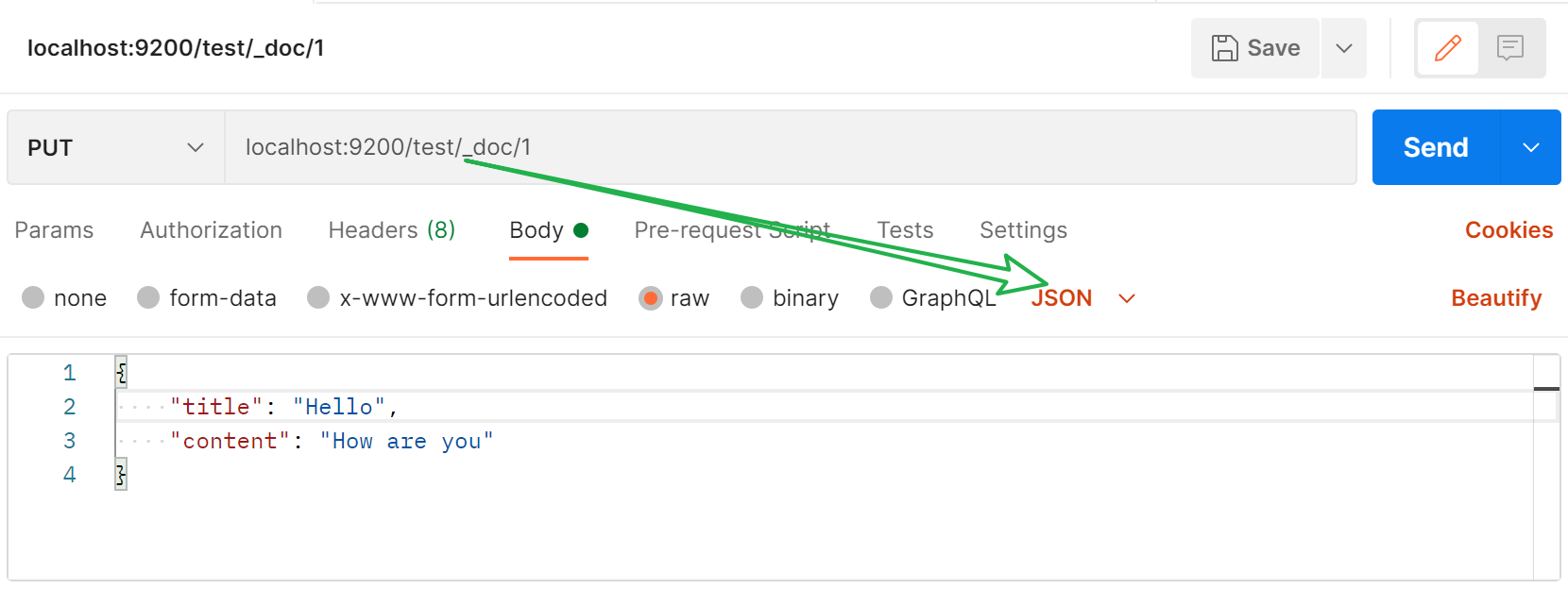

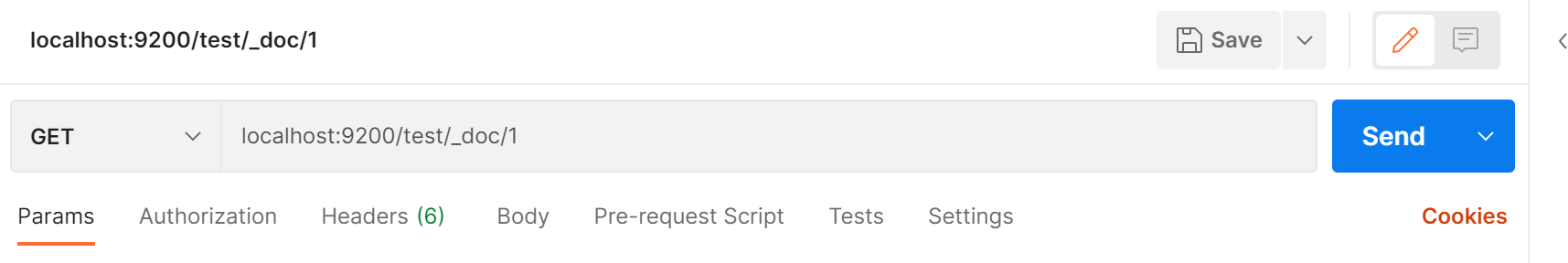

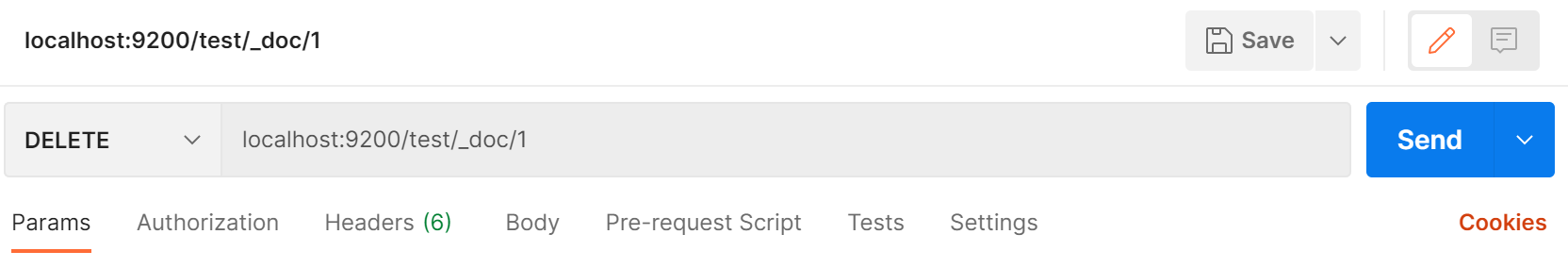

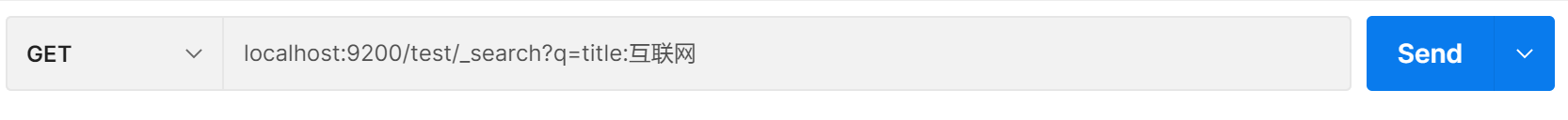

- 接口测试工具:Postman

|

||||

- 压力测试工具:Apache JMeter

|

||||

- 版本控制工具:Git

|

||||

- Java 版本:8

|

||||

|

||||

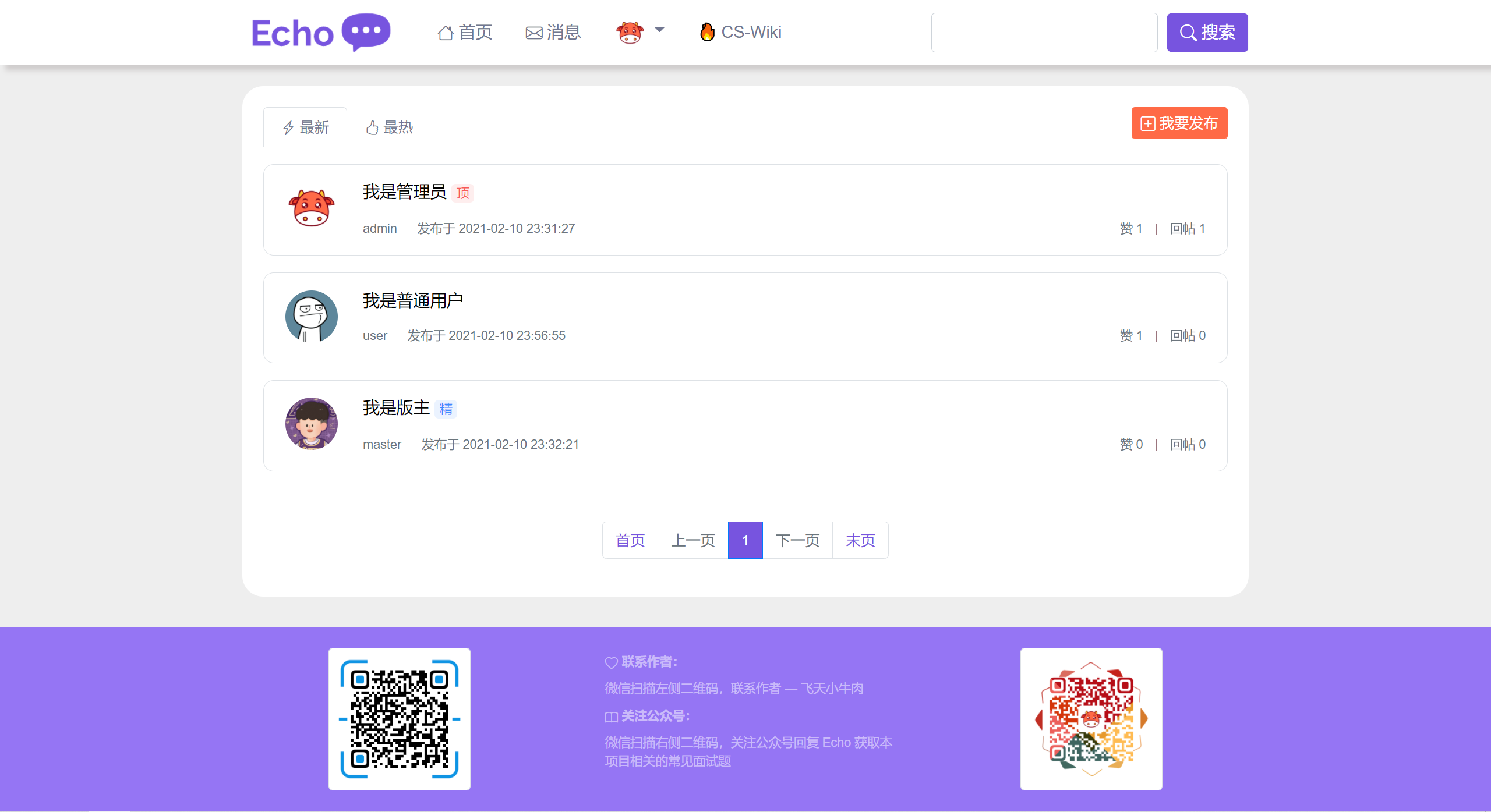

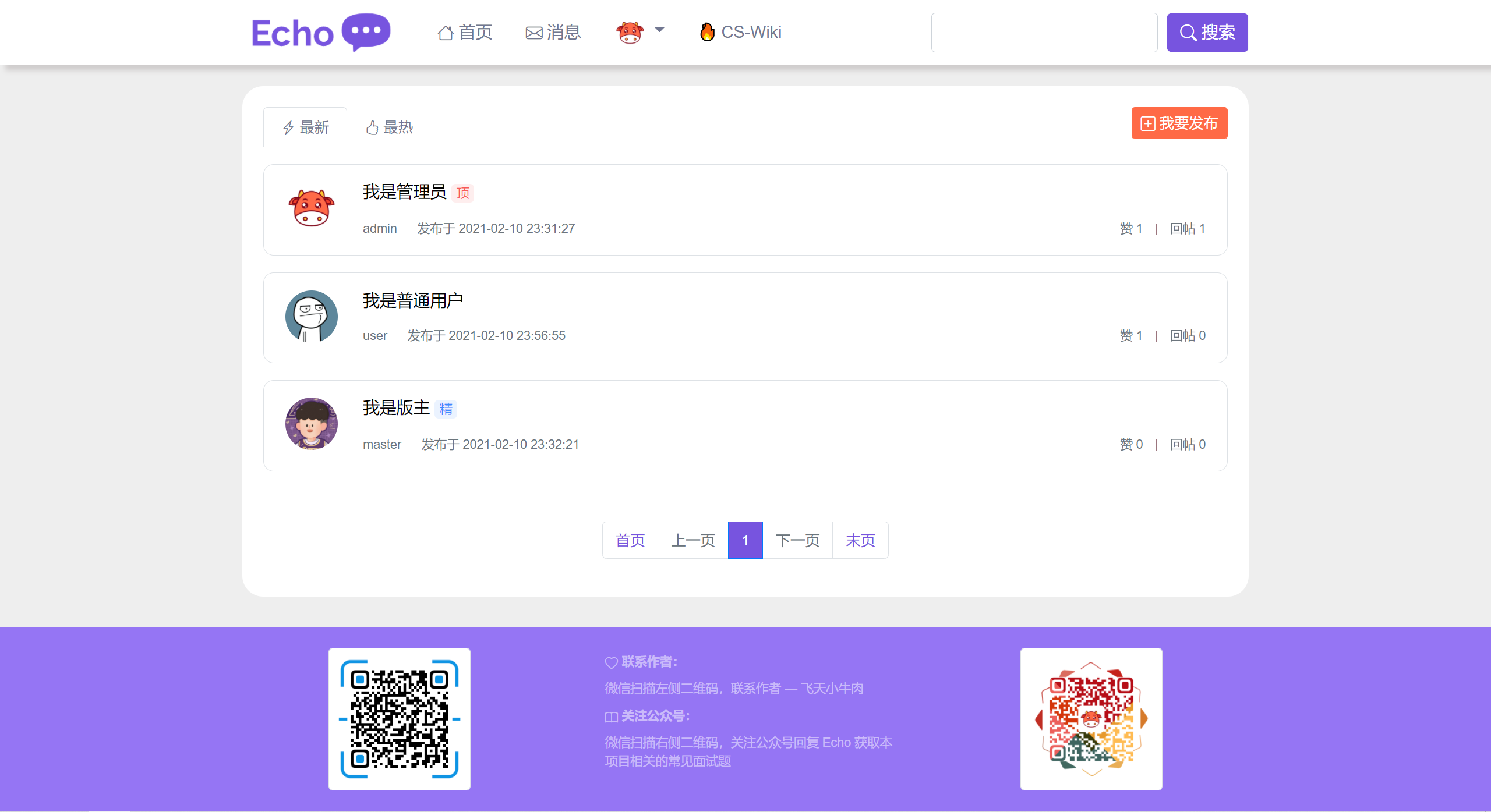

## 🎀 界面展示

|

||||

|

||||

首页:

|

||||

|

||||

|

||||

|

||||

登录页:

|

||||

|

||||

|

||||

|

||||

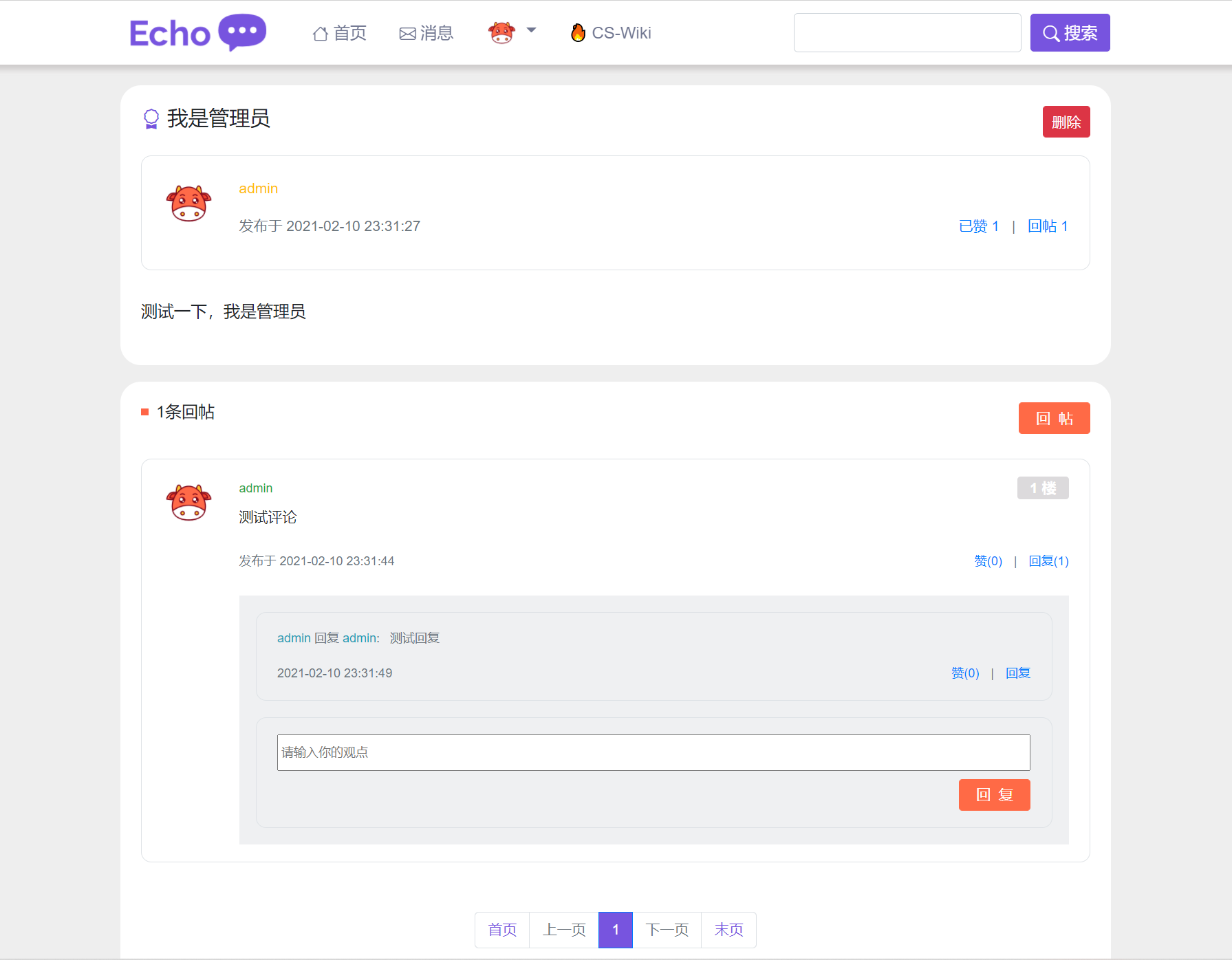

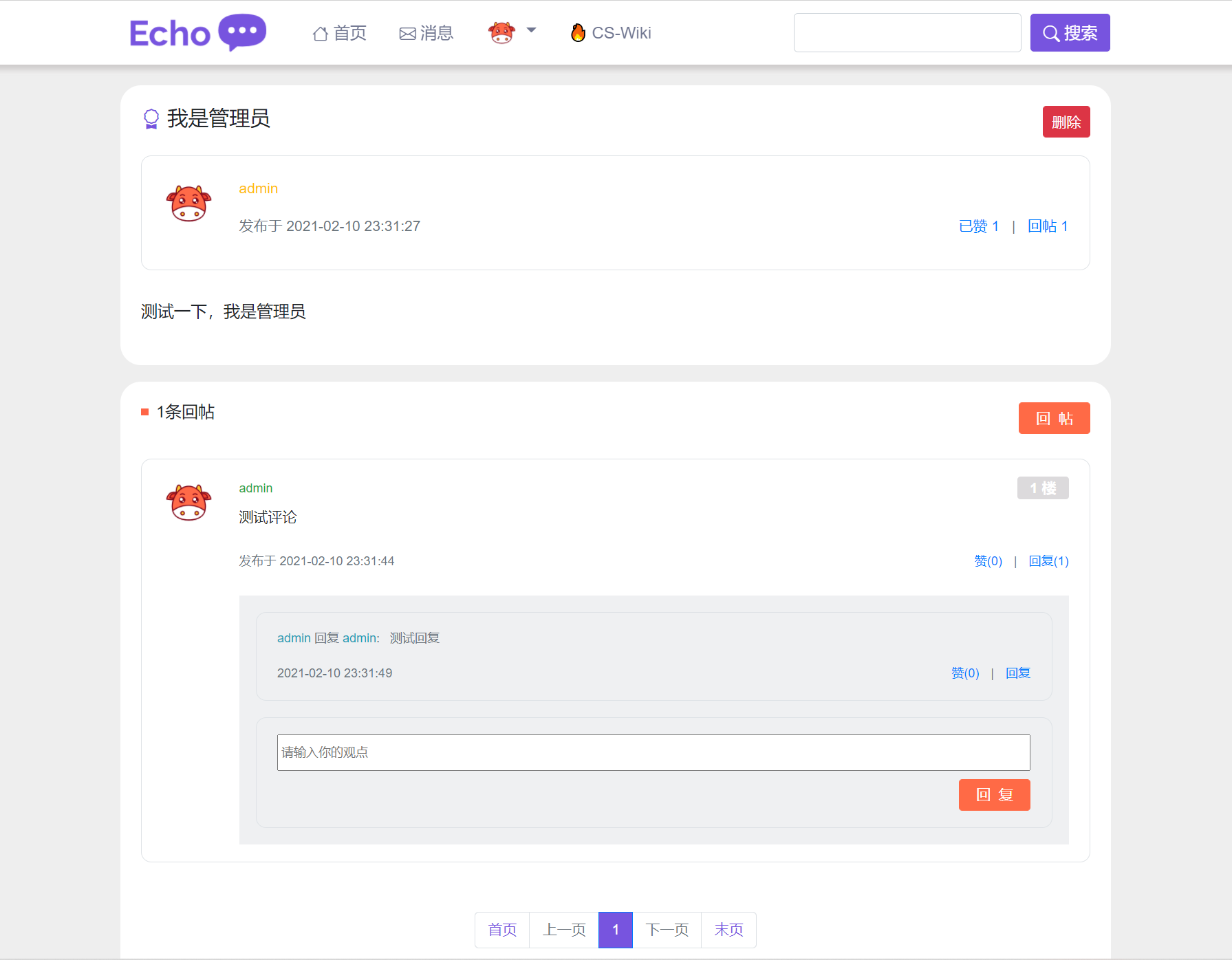

帖子详情页:

|

||||

|

||||

|

||||

|

||||

个人主页:

|

||||

|

||||

|

||||

|

||||

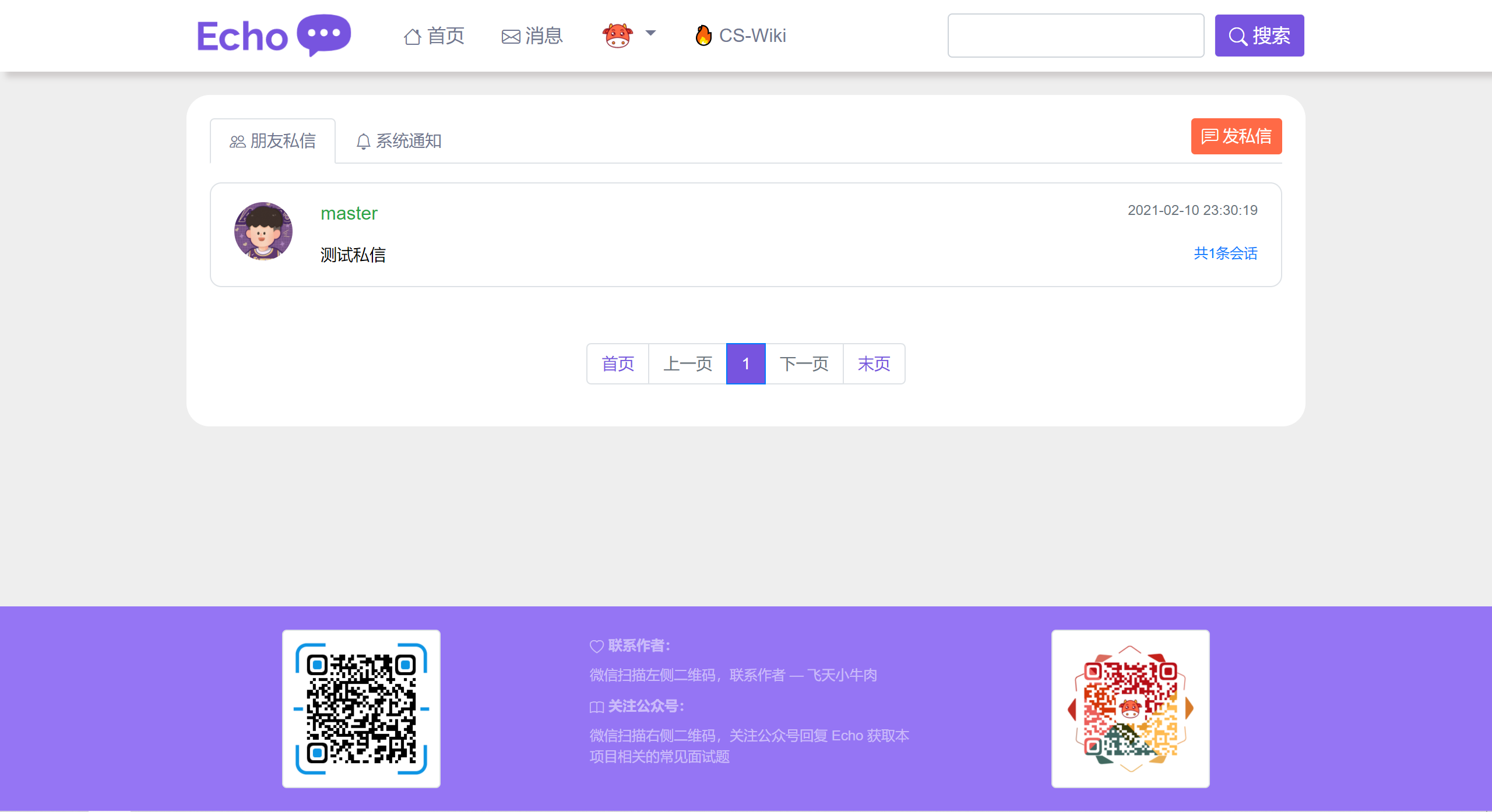

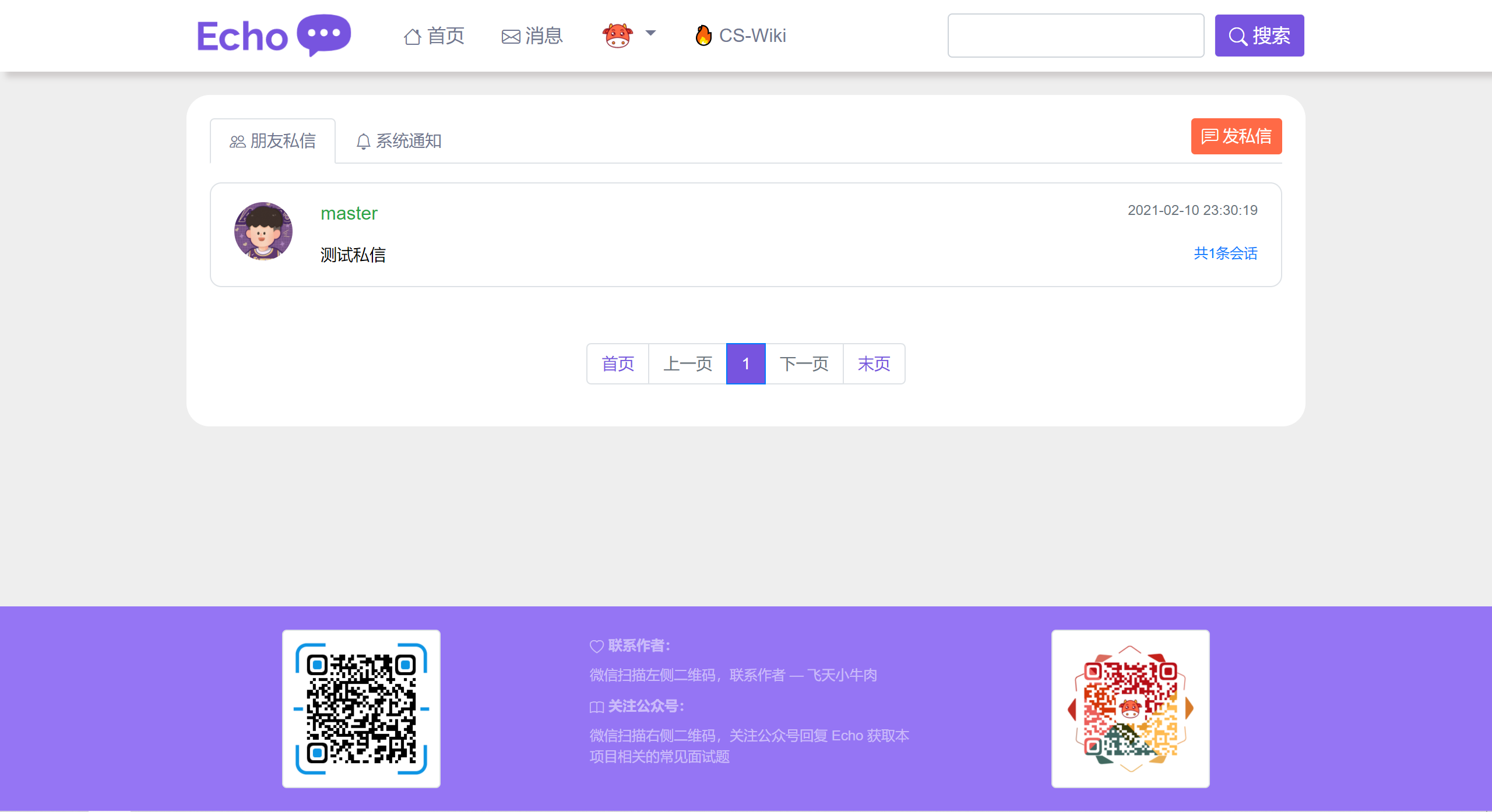

朋友私信页:

|

||||

|

||||

|

||||

|

||||

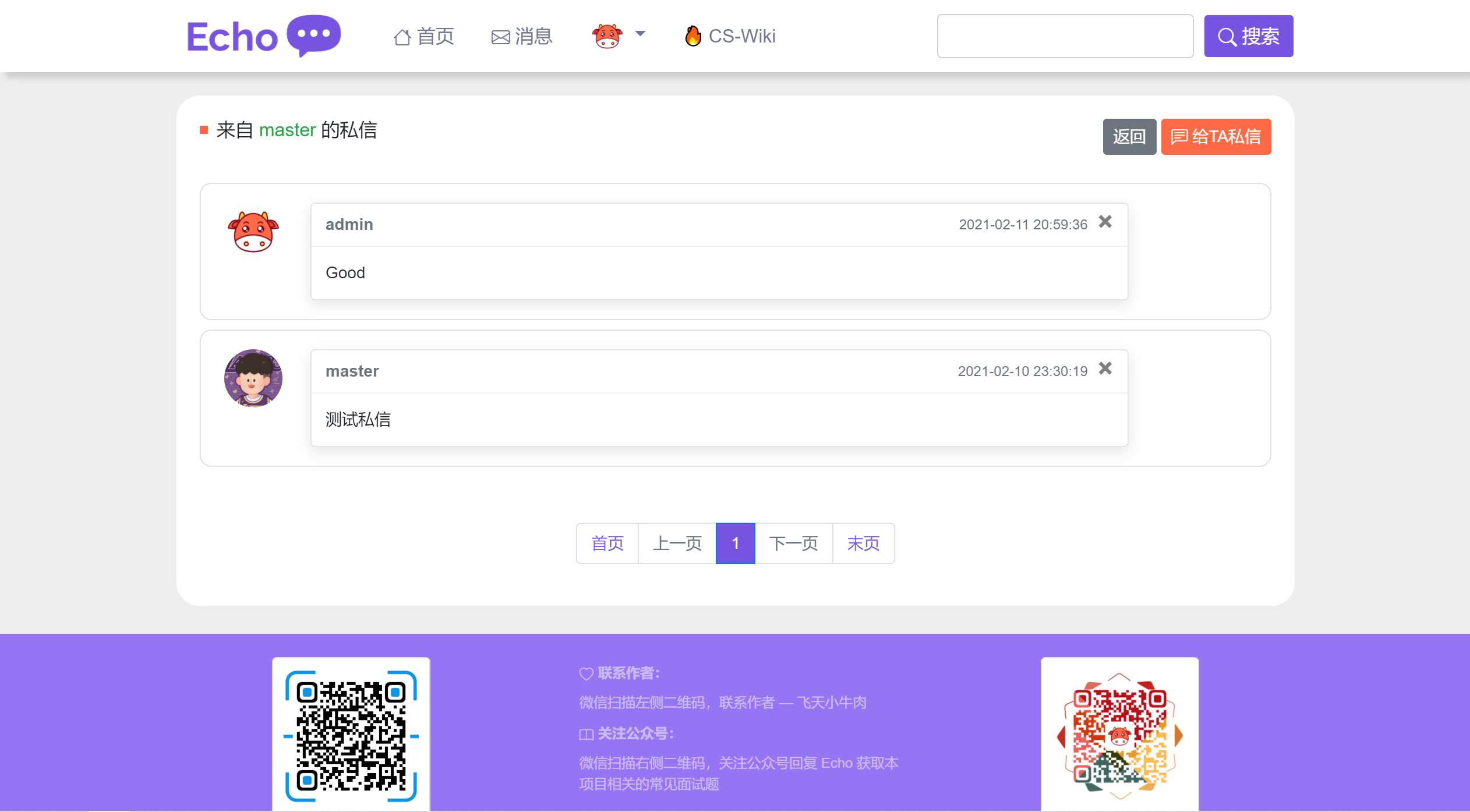

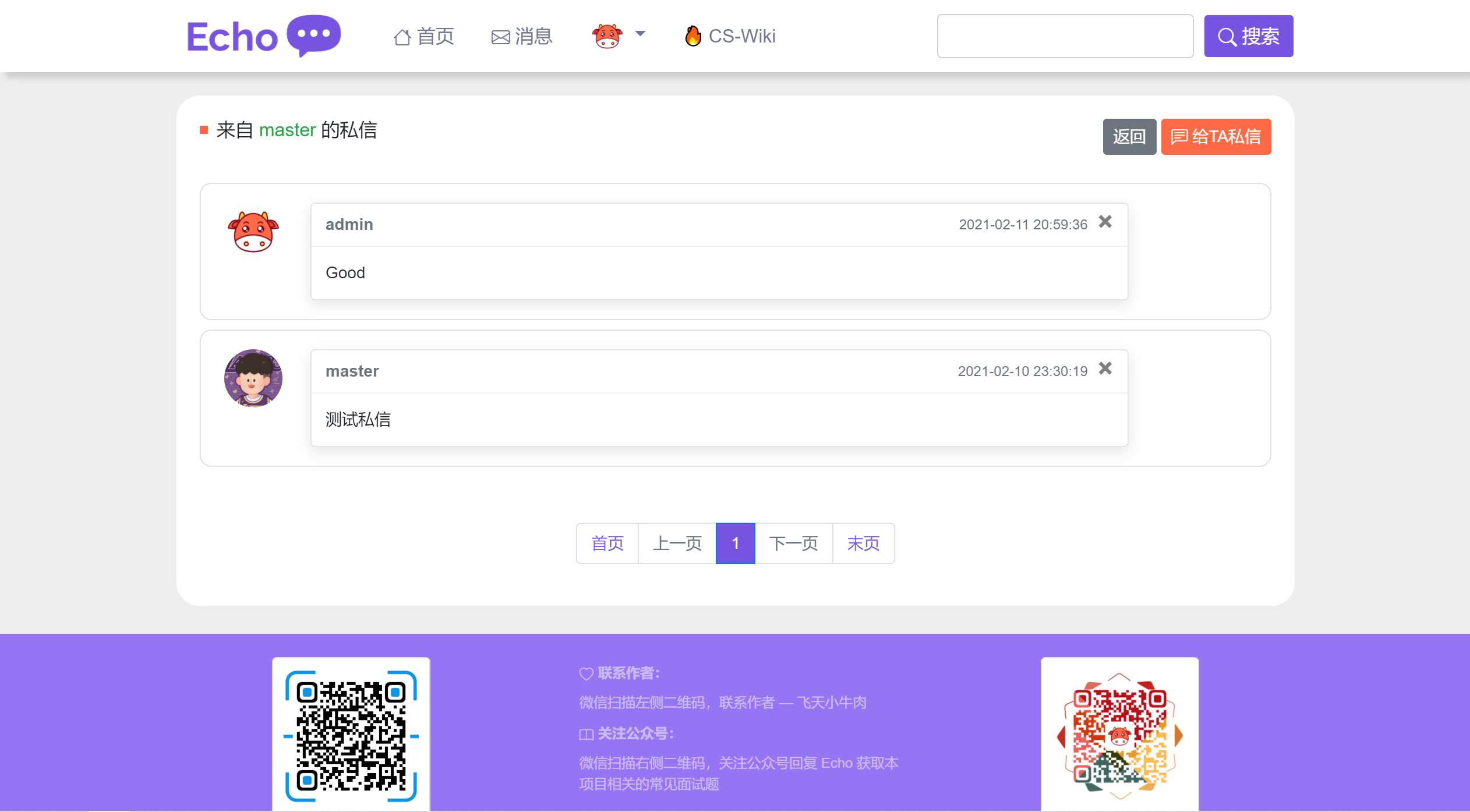

私信详情页:

|

||||

|

||||

|

||||

|

||||

系统通知页:

|

||||

|

||||

|

||||

|

||||

通知详情页:

|

||||

|

||||

|

||||

|

||||

账号设置页:

|

||||

|

||||

|

||||

|

||||

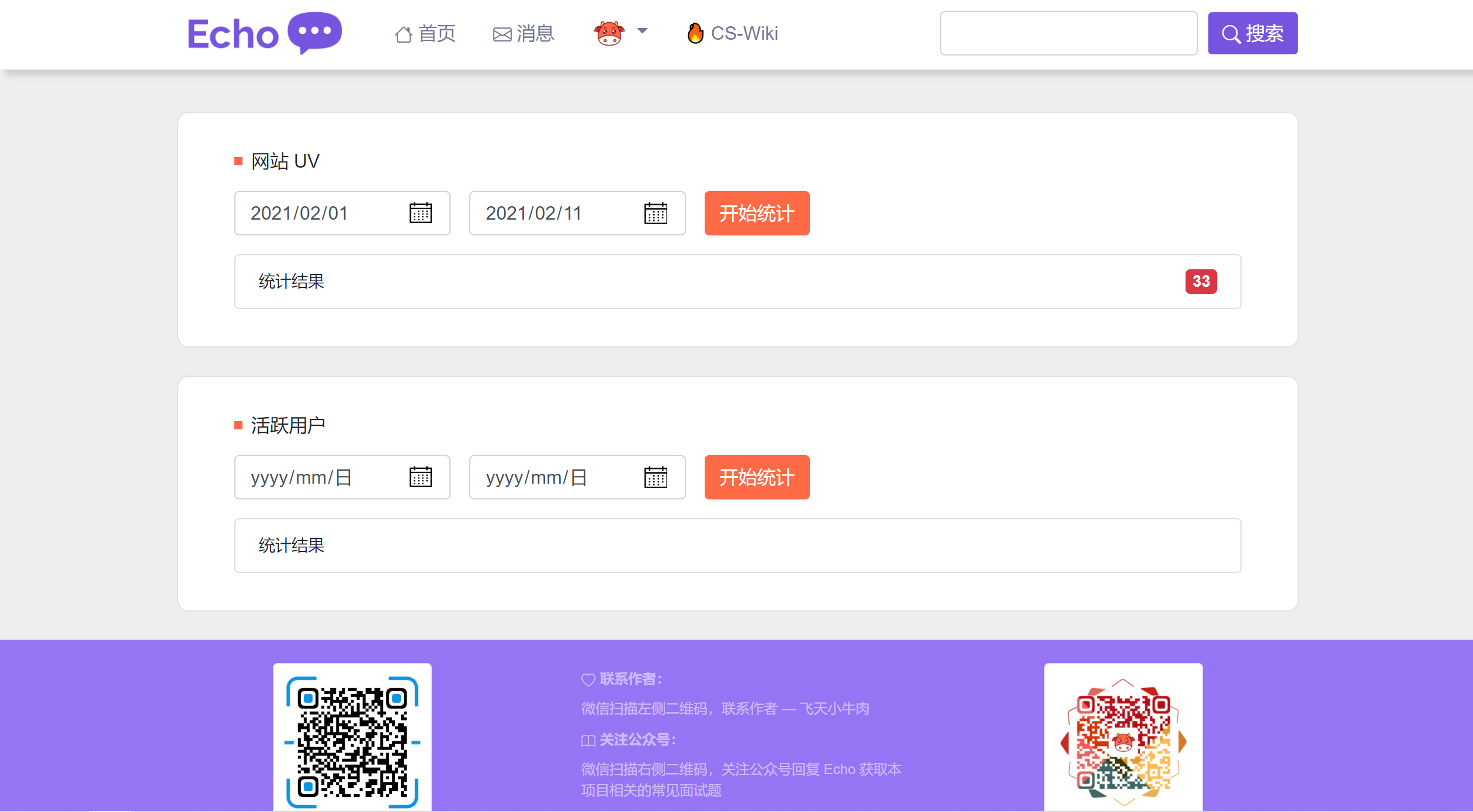

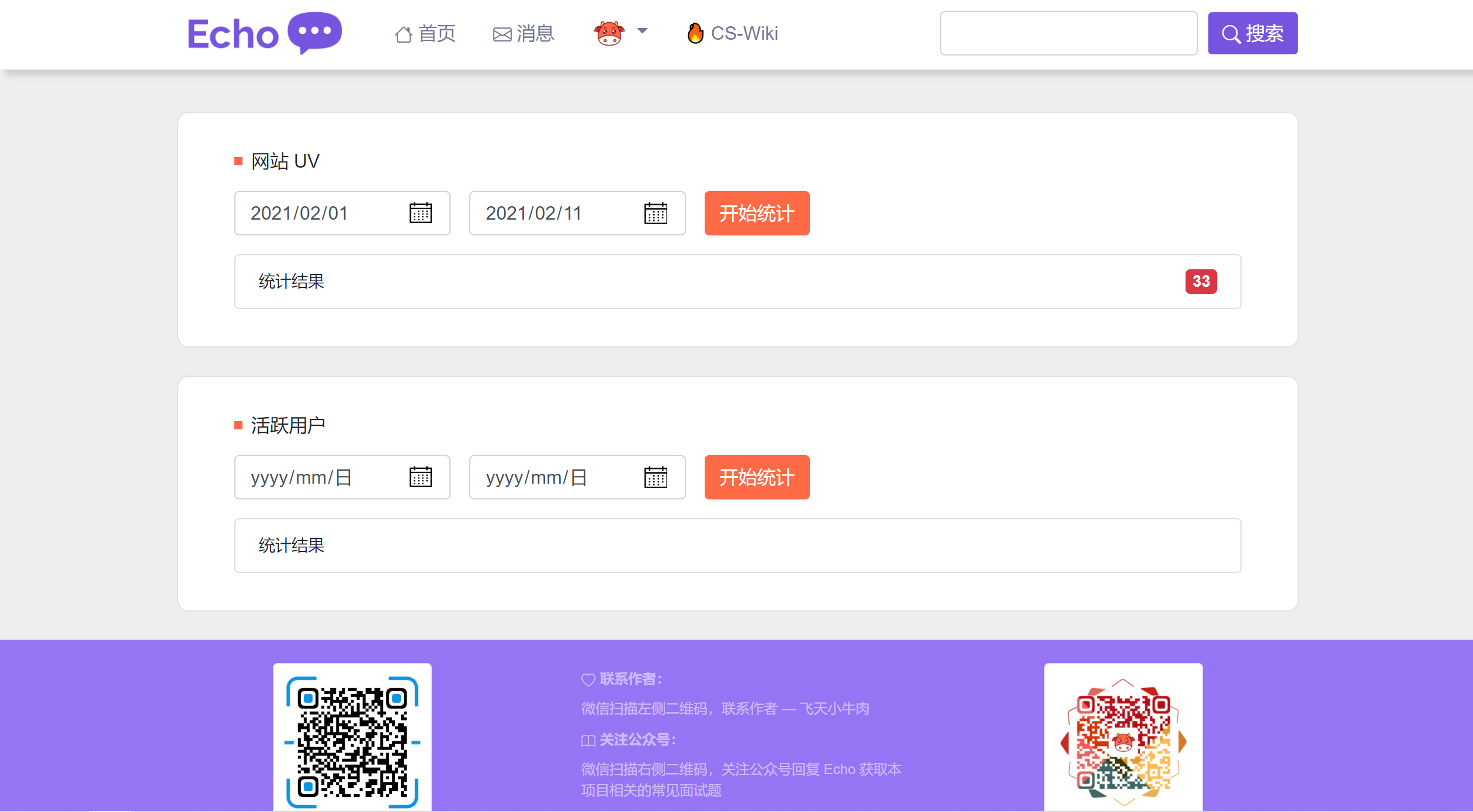

数据统计页:

|

||||

|

||||

|

||||

|

||||

搜索详情页:

|

||||

|

||||

|

||||

|

||||

## 🎨 功能列表

|

||||

|

||||

- [x] **注册**(MySQL)

|

||||

|

||||

|

||||

|

||||

- [x] **注册**

|

||||

|

||||

- 用户注册成功,将用户信息存入 MySQL,但此时该用户状态为未激活

|

||||

- 向用户发送激活邮件,用户点击链接则激活账号(Spring Mail)

|

||||

|

||||

- [x] **登录 | 登出**(MySQL、Redis)

|

||||

|

||||

|

||||

- [x] **登录 | 登出**

|

||||

|

||||

- 进入登录界面,动态生成验证码,并将验证码短暂存入 Redis(60 秒)

|

||||

|

||||

|

||||

- 用户登录成功(验证用户名、密码、验证码),生成登录凭证且设置状态为有效,并将登录凭证存入 Redis

|

||||

|

||||

|

||||

注意:登录凭证存在有效期,在所有的请求执行之前,都会检查凭证是否有效和是否过期,只要该用户的凭证有效并在有效期时间内,本次请求就会一直持有该用户信息(使用 ThreadLocal 持有用户信息)

|

||||

|

||||

|

||||

- 勾选记住我,则延长登录凭证有效时间

|

||||

|

||||

|

||||

- 用户登录成功,将用户信息短暂存入 Redis(1 小时)

|

||||

|

||||

|

||||

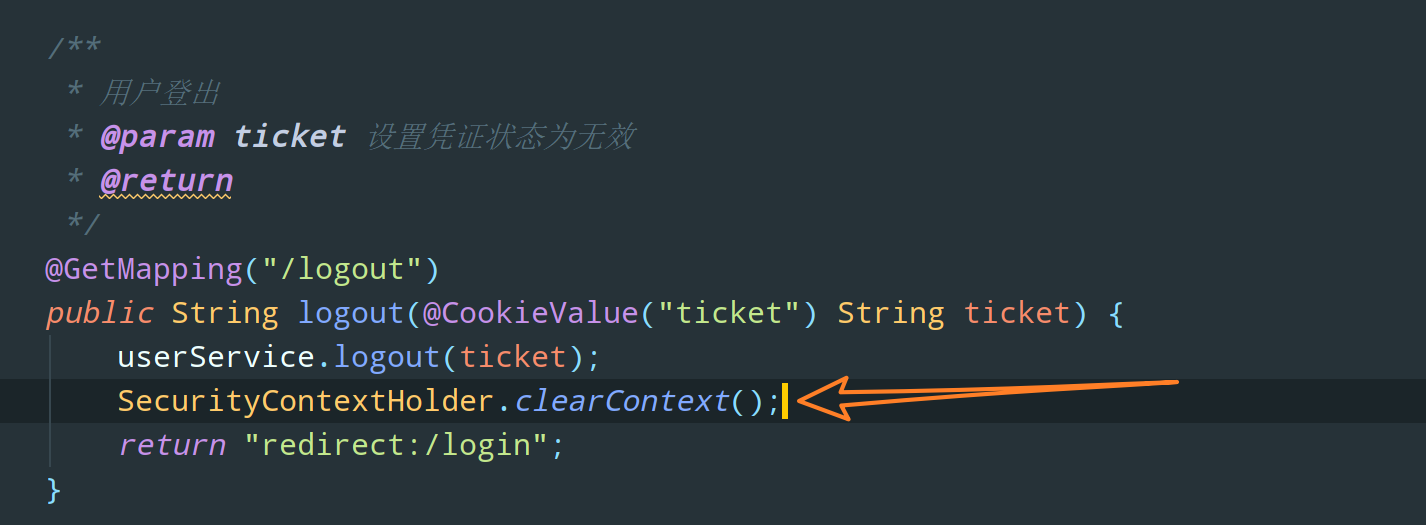

- 用户登出,将凭证状态设为无效,并更新 Redis 中该用户的登录凭证信息

|

||||

|

||||

- [x] **账号设置**(MySQL)

|

||||

|

||||

|

||||

- [x] **账号设置**

|

||||

|

||||

- 修改头像

|

||||

- 将用户选择的头像图片文件上传至七牛云服务器

|

||||

- 修改密码

|

||||

|

||||

- [x] **帖子模块**(MySQL)

|

||||

|

||||

|

||||

- [x] **帖子模块**

|

||||

|

||||

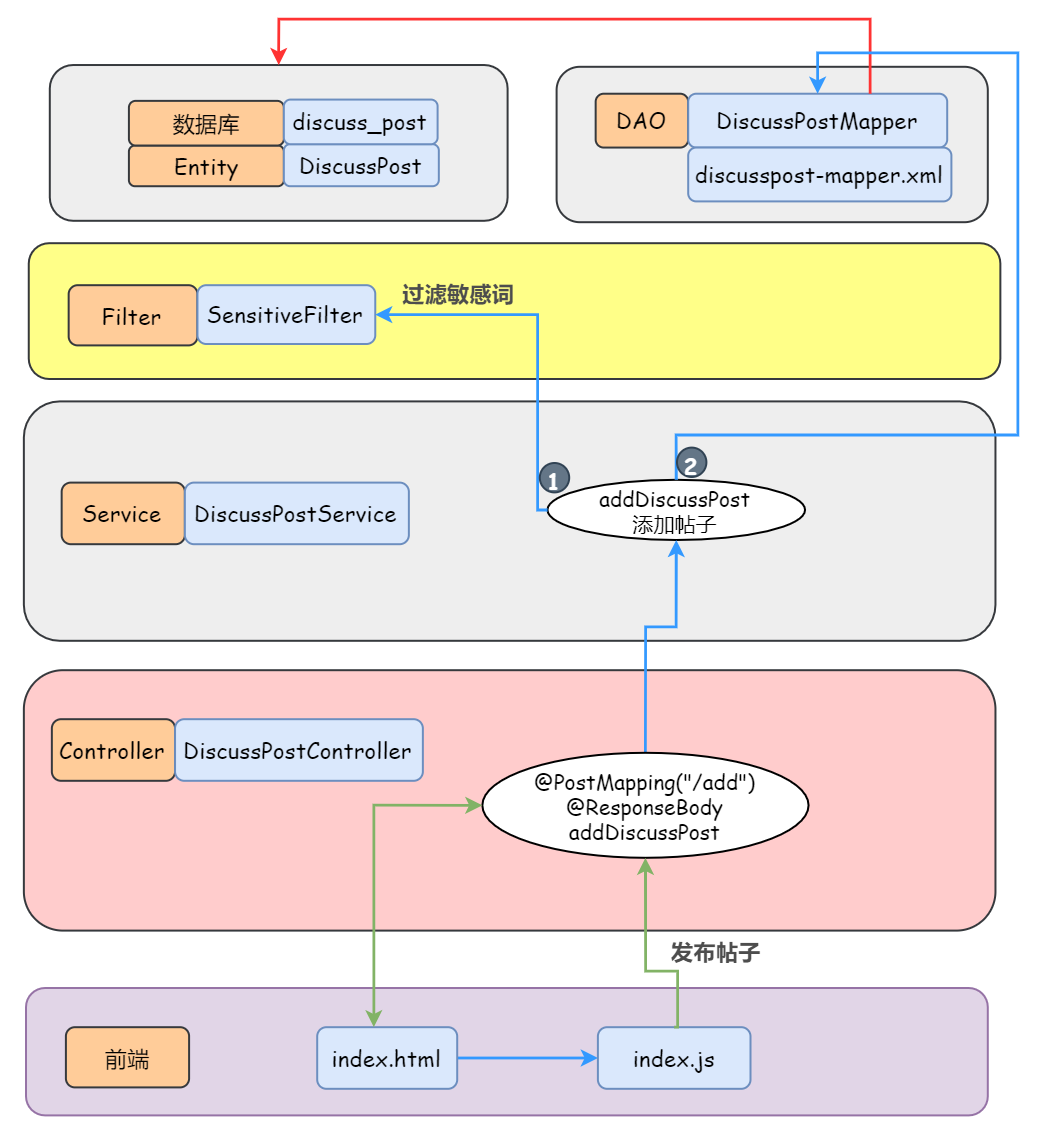

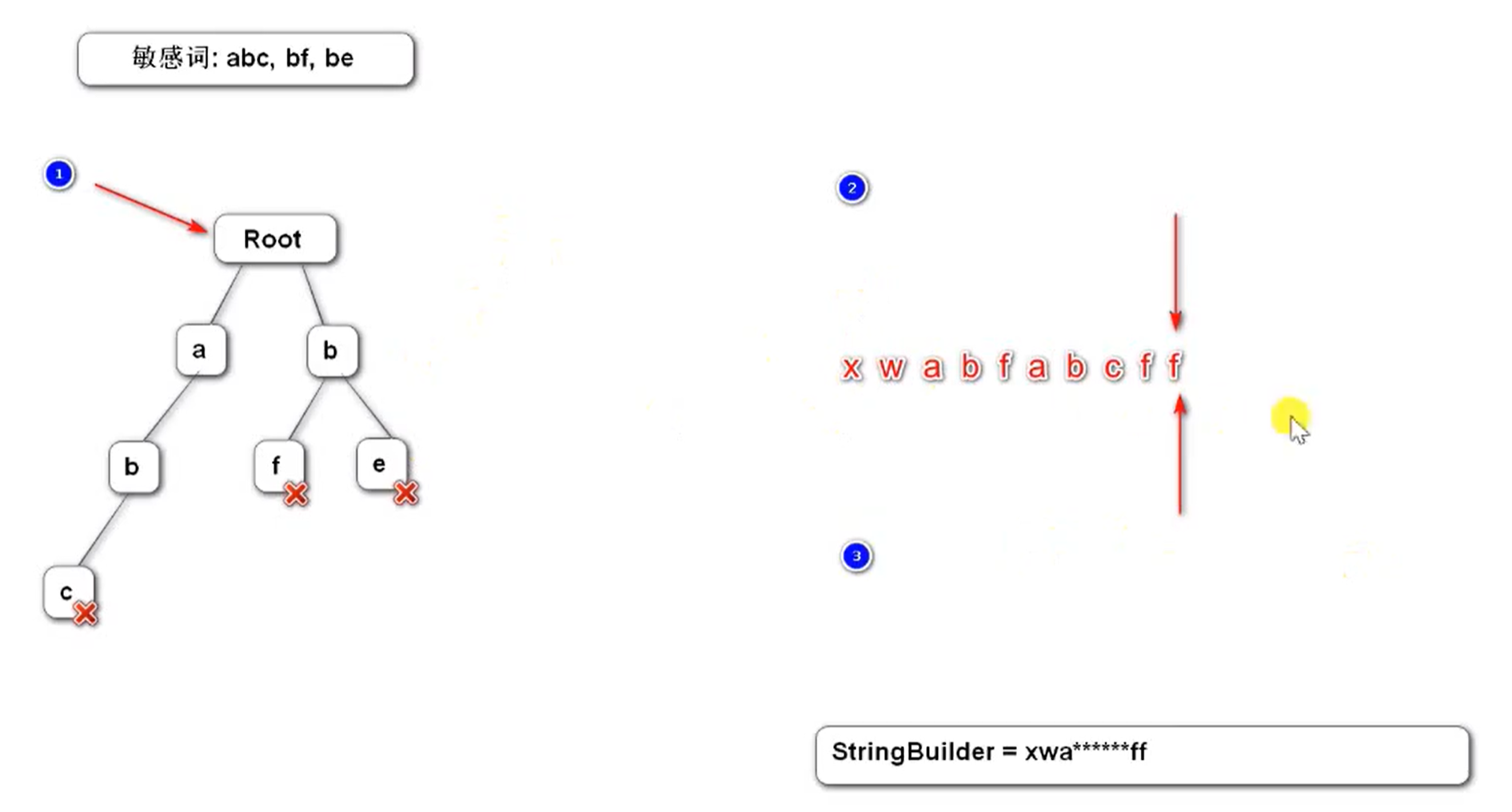

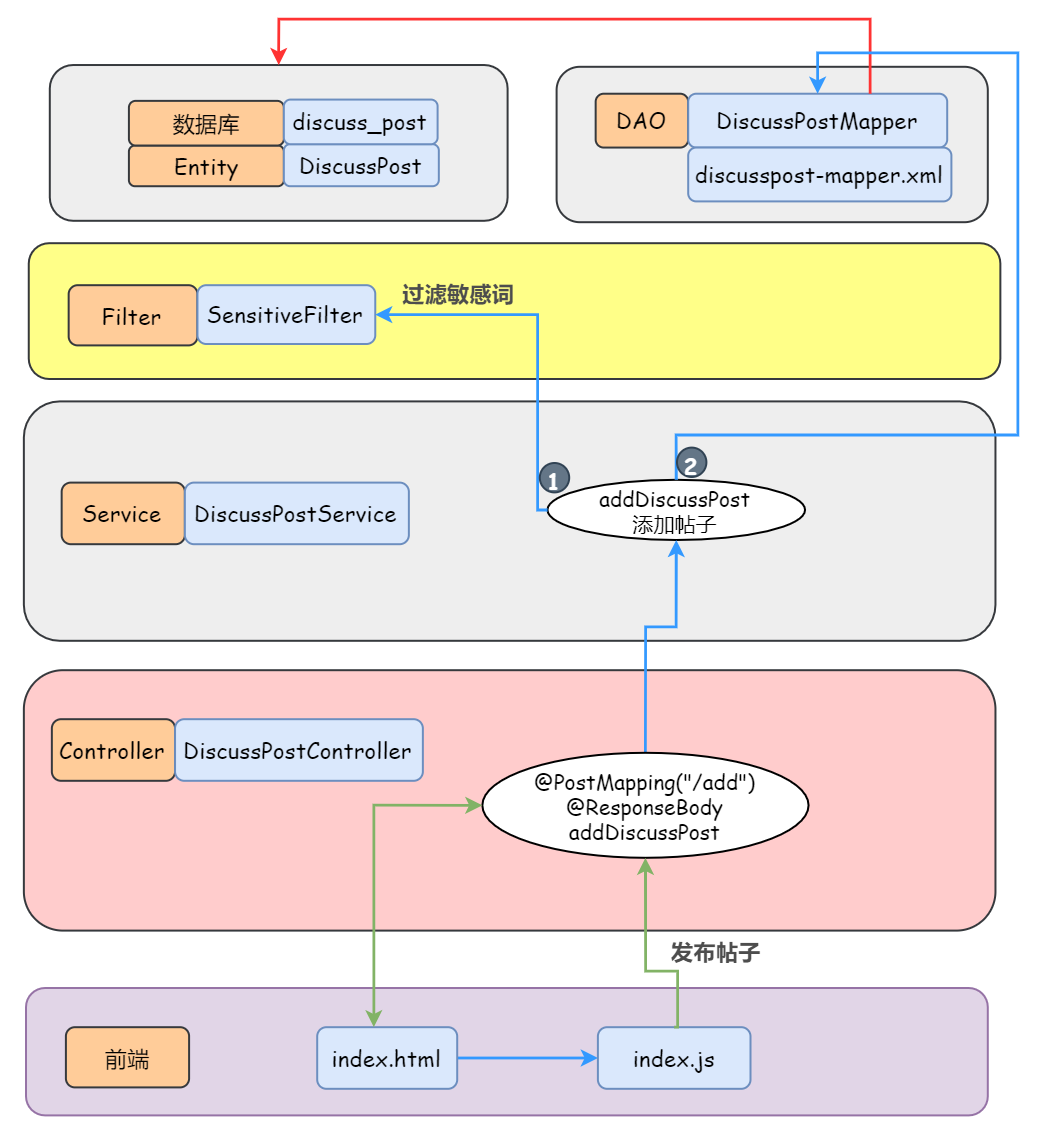

- 发布帖子(过滤敏感词),将其存入 MySQL

|

||||

- 分页显示所有的帖子

|

||||

- 支持按照 “发帖时间” 显示

|

||||

@ -81,17 +136,17 @@

|

||||

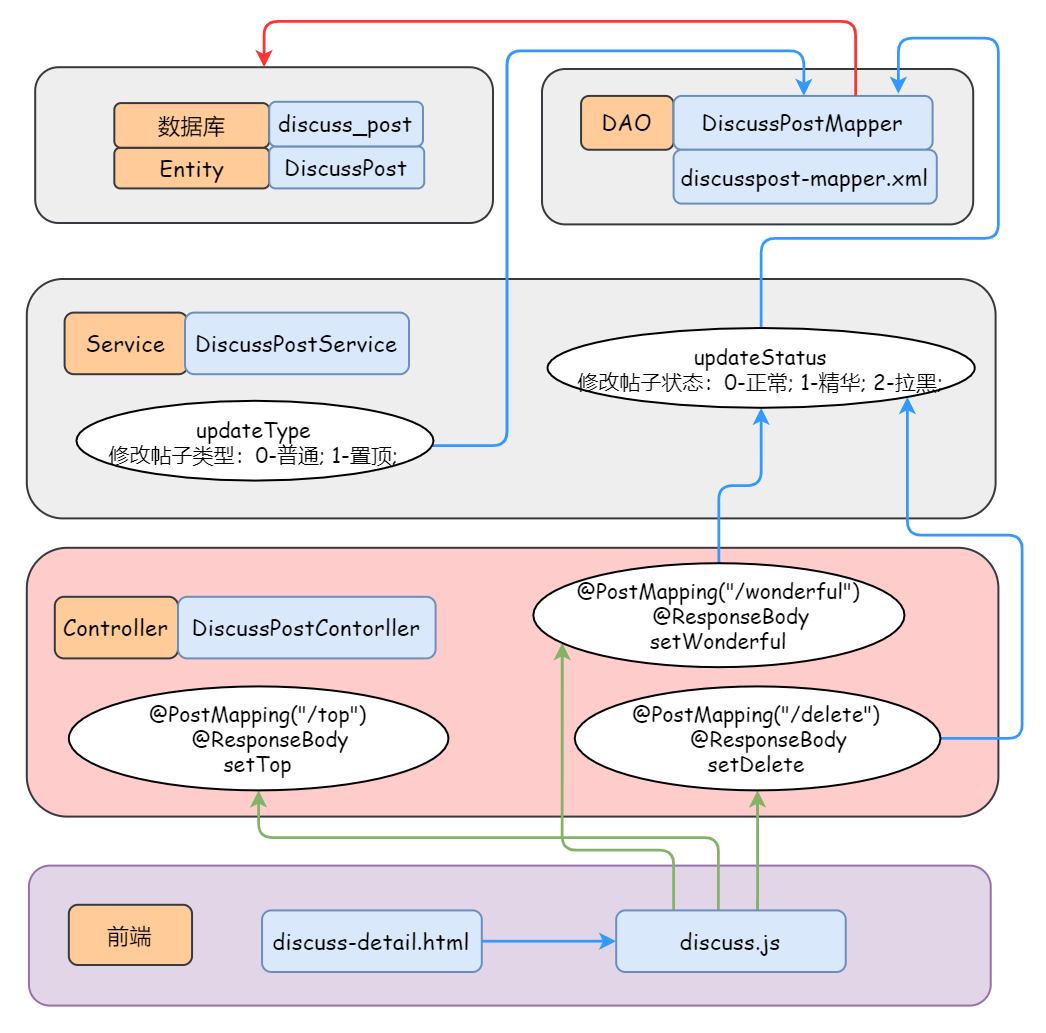

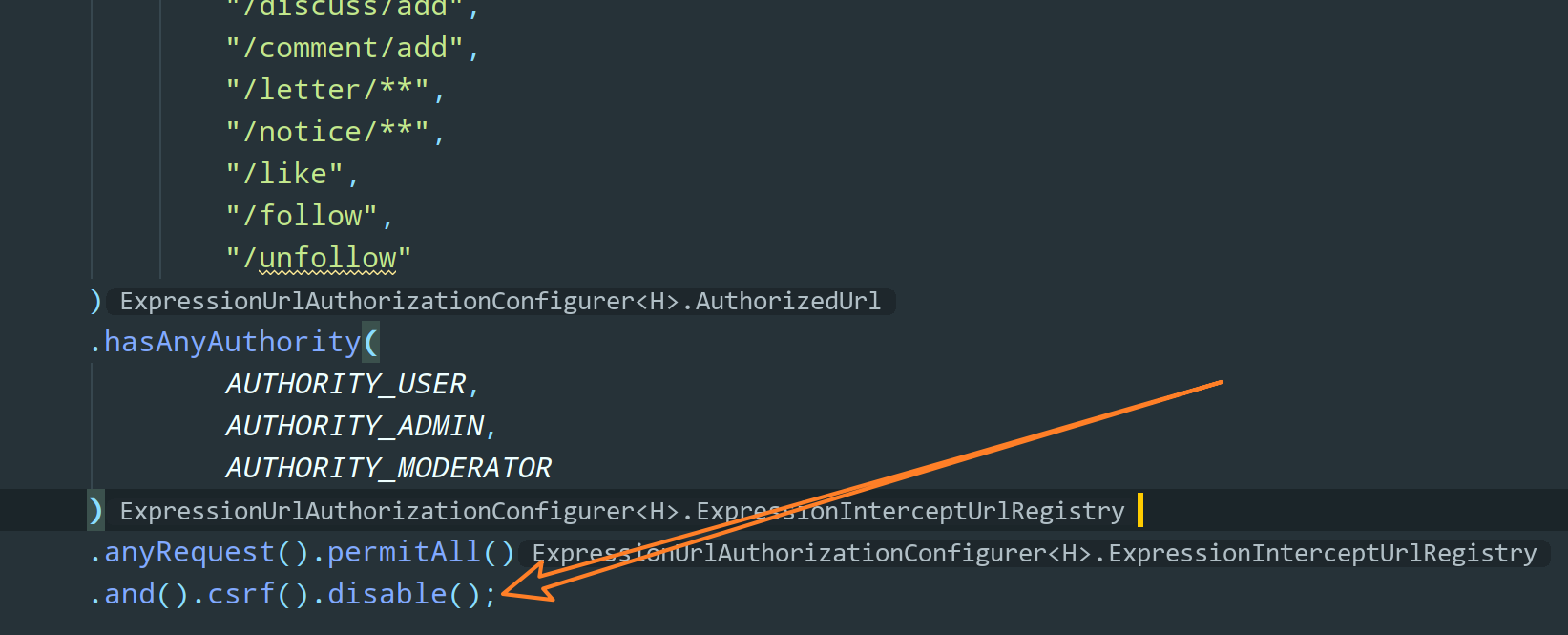

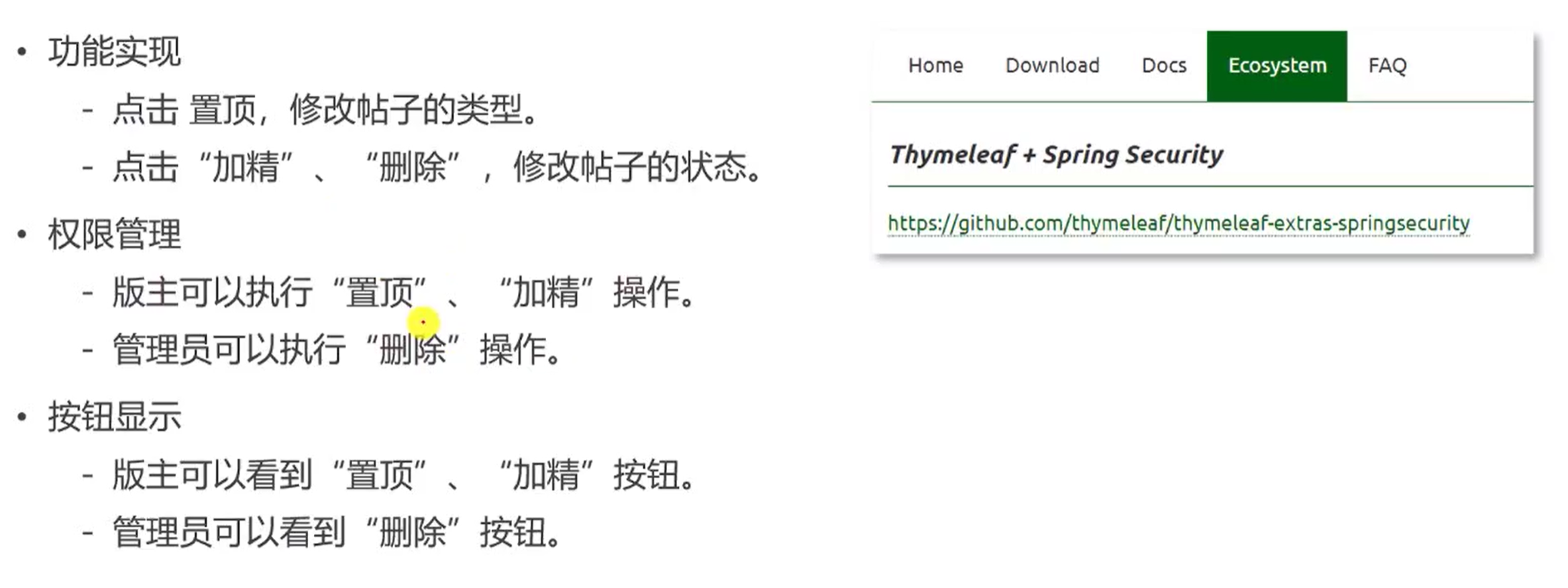

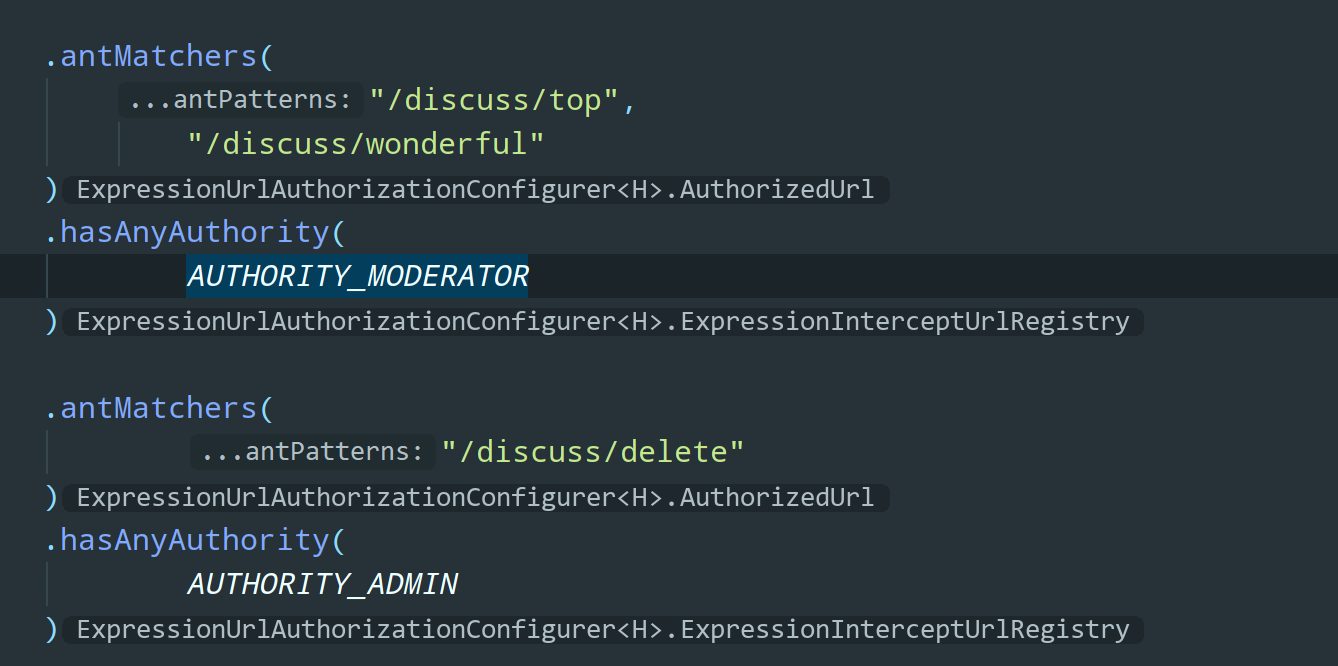

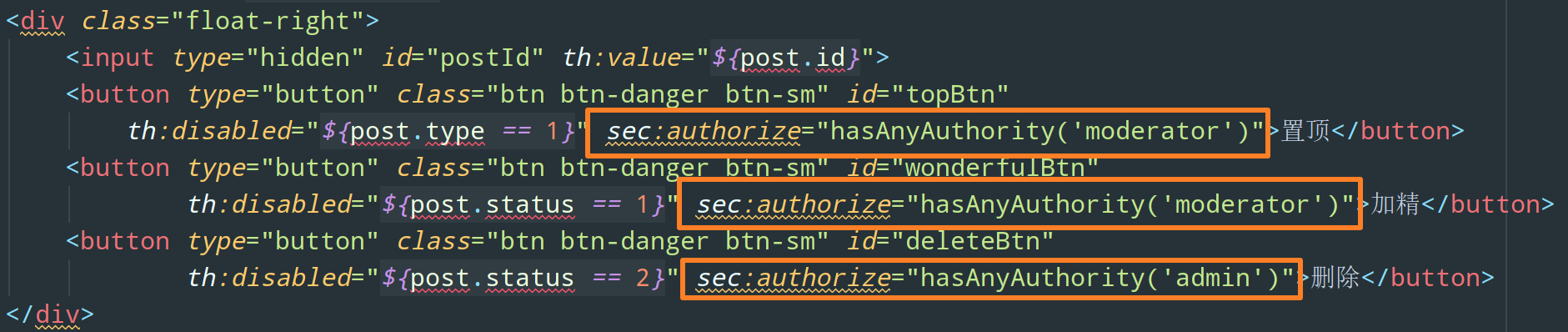

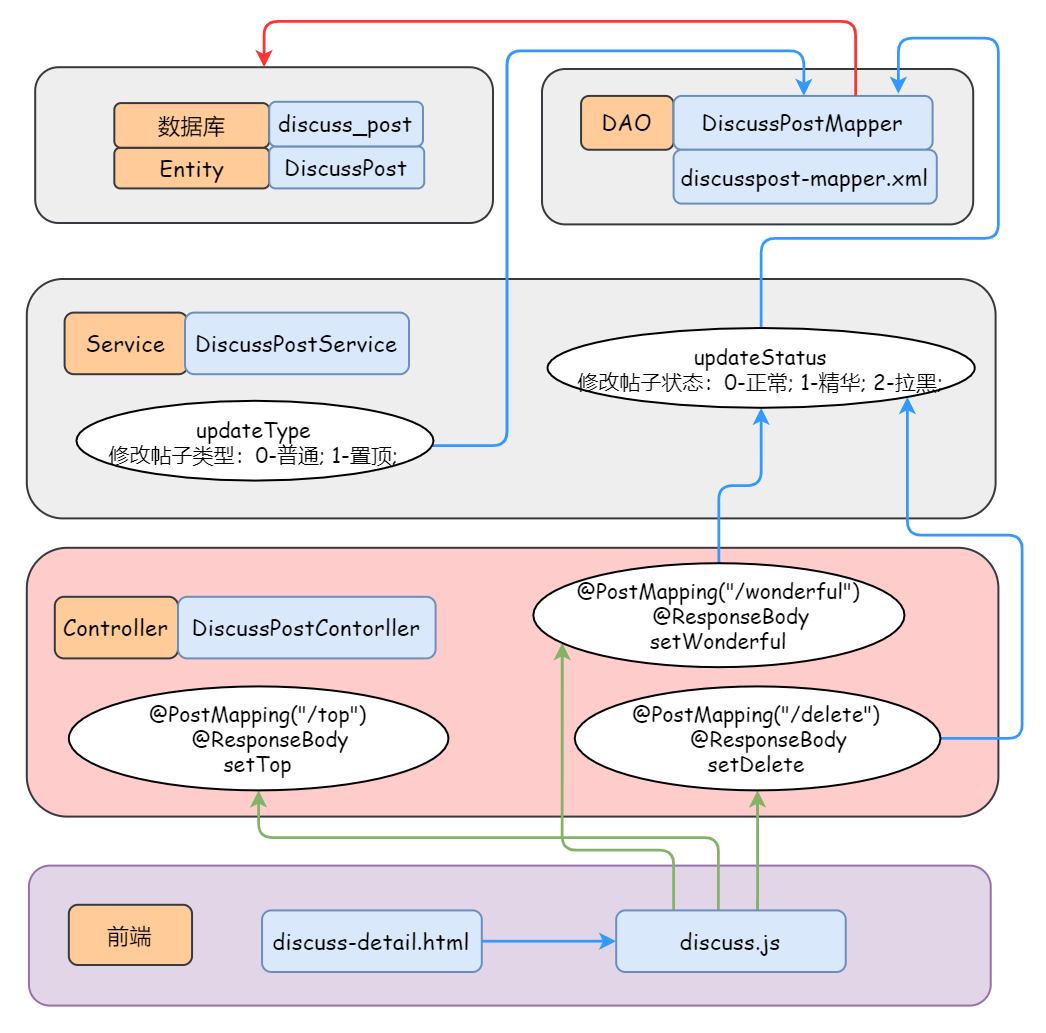

- “版主” 可以看到帖子的置顶和加精按钮并执行相应操作

|

||||

- “管理员” 可以看到帖子的删除按钮并执行相应操作

|

||||

- “普通用户” 无法看到帖子的置顶、加精、删除按钮,也无法执行相应操作

|

||||

|

||||

- [x] **评论模块**(MySQL)

|

||||

|

||||

|

||||

- [x] **评论模块**

|

||||

|

||||

- 发布对帖子的评论(过滤敏感词),将其存入 MySQL

|

||||

- 分页显示评论

|

||||

- 发布对评论的回复(过滤敏感词)

|

||||

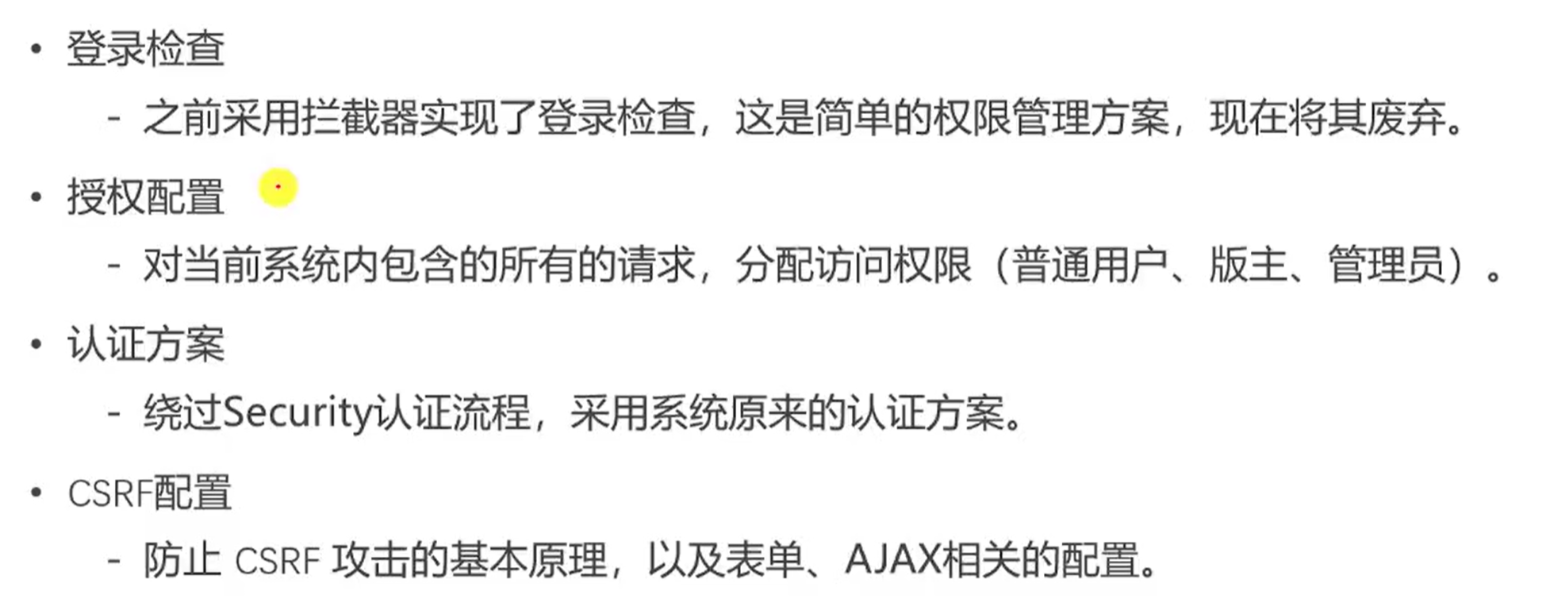

- 权限管理(Spring Security)

|

||||

- 未登录用户无法使用评论功能

|

||||

|

||||

- [x] **私信模块**(MySQL)

|

||||

|

||||

|

||||

- [x] **私信模块**

|

||||

|

||||

- 发送私信(过滤敏感词)

|

||||

- 私信列表

|

||||

- 查询当前用户的会话列表

|

||||

@ -103,33 +158,38 @@

|

||||

- 支持分页显示

|

||||

- 权限管理(Spring Security)

|

||||

- 未登录用户无法使用私信功能

|

||||

|

||||

- [x] **统一处理异常**(404、500)

|

||||

|

||||

|

||||

- [x] **统一处理 404 / 500 异常**

|

||||

|

||||

- 普通请求异常

|

||||

- 异步请求异常

|

||||

|

||||

|

||||

- [x] **统一记录日志**

|

||||

|

||||

- [x] **点赞模块**(Redis)

|

||||

|

||||

- 点赞

|

||||

- 获赞

|

||||

- [x] **点赞模块**

|

||||

|

||||

- 支持对帖子、评论/回复点赞

|

||||

- 第 1 次点赞,第 2 次取消点赞

|

||||

- 首页统计帖子的点赞数量

|

||||

- 详情页统计帖子和评论/回复的点赞数量

|

||||

- 详情页显示当前登录用户的点赞状态(赞过了则显示已赞)

|

||||

|

||||

- 统计我的获赞数量

|

||||

- 权限管理(Spring Security)

|

||||

- 未登录用户无法使用点赞相关功能

|

||||

|

||||

- [x] **关注模块**(Redis)

|

||||

|

||||

|

||||

- [x] **关注模块**

|

||||

|

||||

- 关注功能

|

||||

- 取消关注功能

|

||||

- 统计用户的关注数和粉丝数

|

||||

- 关注列表(查询某个用户关注的人),支持分页

|

||||

- 粉丝列表(查询某个用户的粉丝),支持分页

|

||||

- 我的关注列表(查询某个用户关注的人),支持分页

|

||||

- 我的粉丝列表(查询某个用户的粉丝),支持分页

|

||||

- 权限管理(Spring Security)

|

||||

- 未登录用户无法使用关注相关功能

|

||||

|

||||

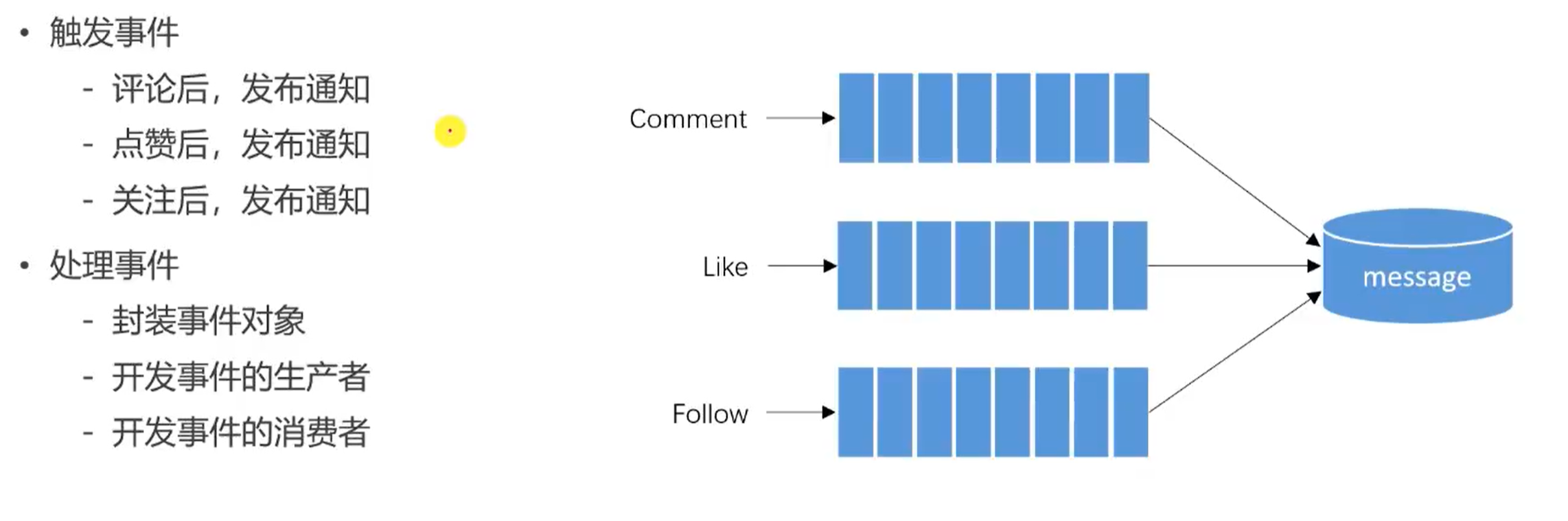

- [x] **系统通知模块**(Kafka)

|

||||

|

||||

|

||||

- [x] **系统通知模块**

|

||||

|

||||

- 通知列表

|

||||

- 显示评论、点赞、关注三种类型的通知

|

||||

- 通知详情

|

||||

@ -141,8 +201,8 @@

|

||||

- 导航栏显示所有消息的未读数量(未读私信 + 未读系统通知)

|

||||

- 权限管理(Spring Security)

|

||||

- 未登录用户无法使用系统通知功能

|

||||

|

||||

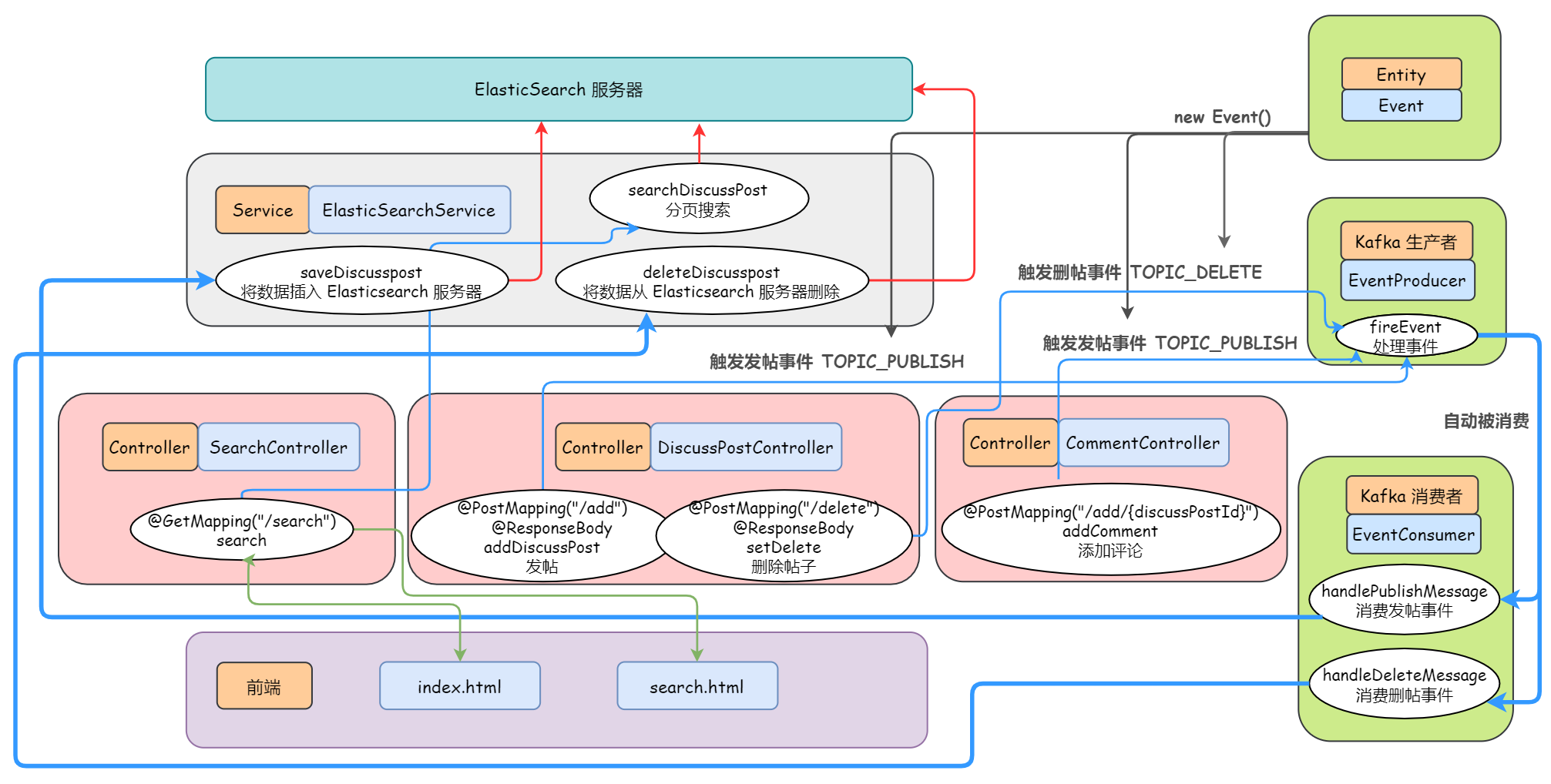

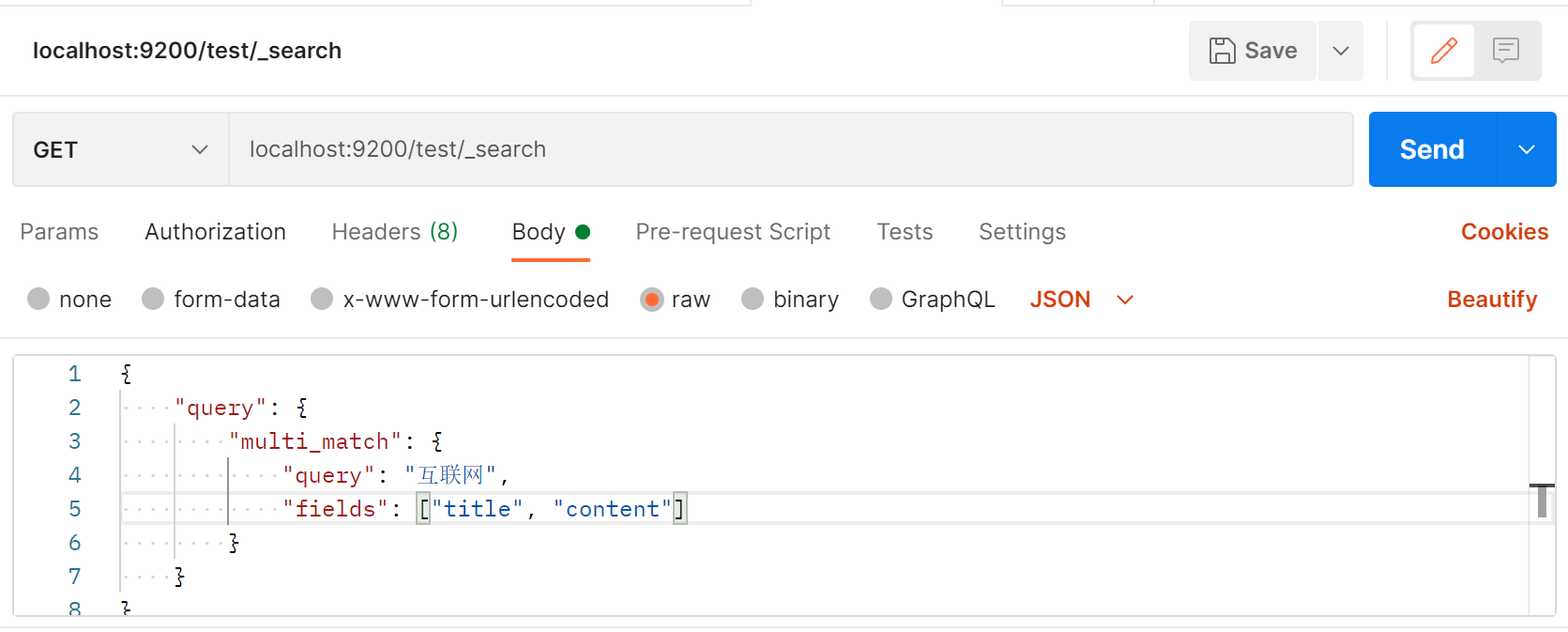

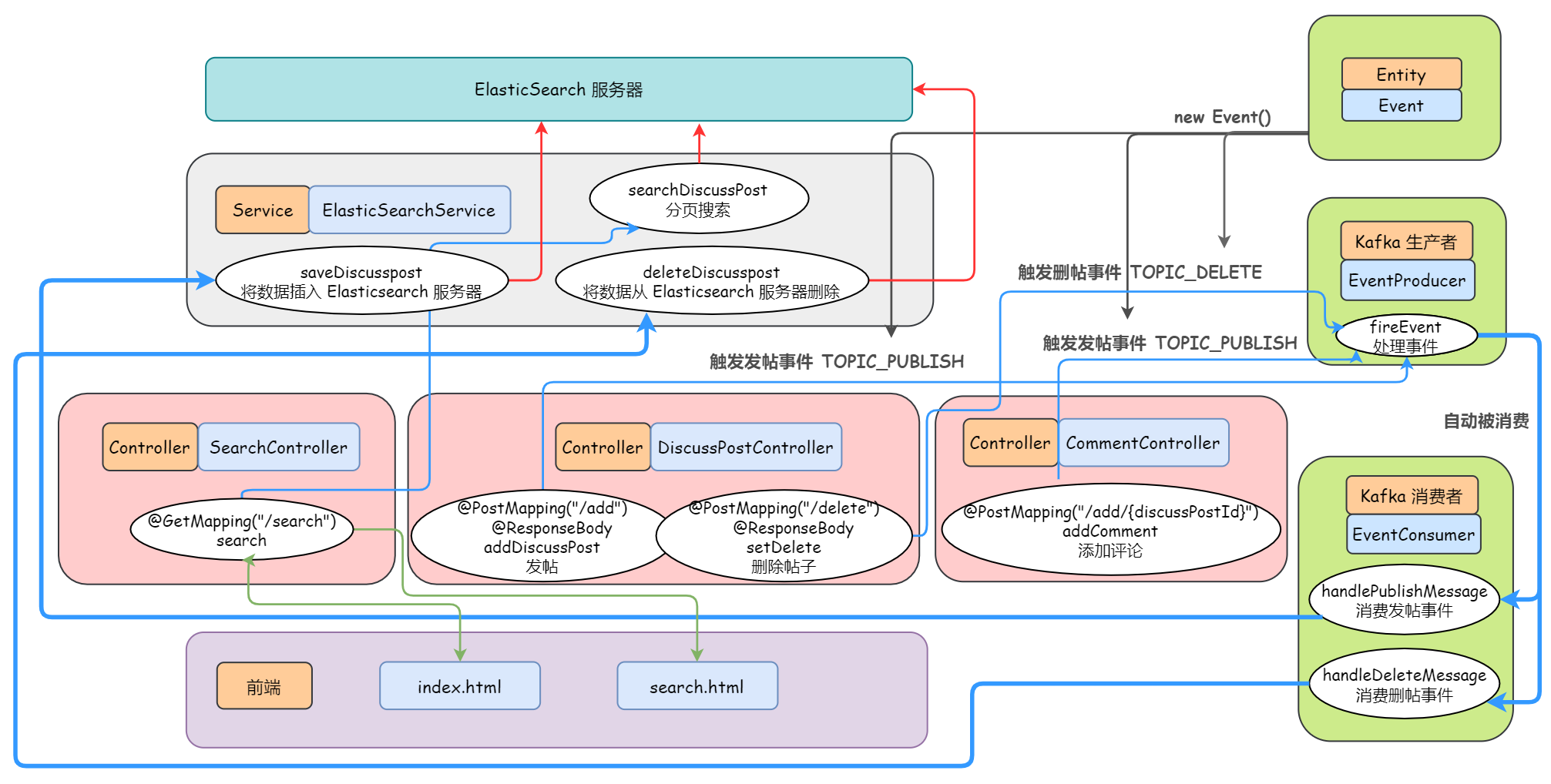

- [x] **搜索模块**(Elasticsearch + Kafka)

|

||||

|

||||

- [x] **搜索模块**

|

||||

|

||||

- 发布事件

|

||||

- 发布帖子时,通过消息队列将帖子异步地提交到 Elasticsearch 服务器

|

||||

@ -163,20 +223,59 @@

|

||||

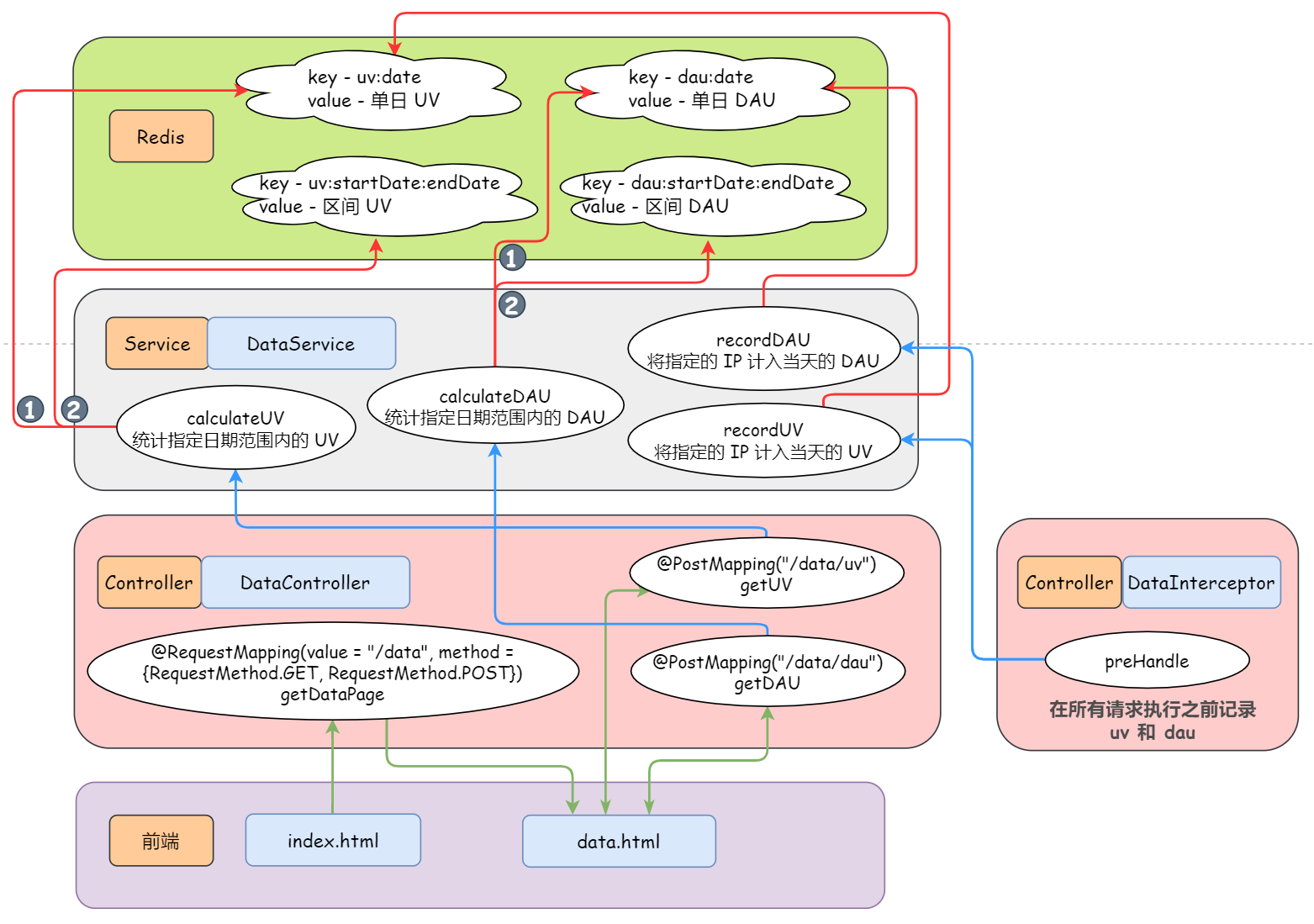

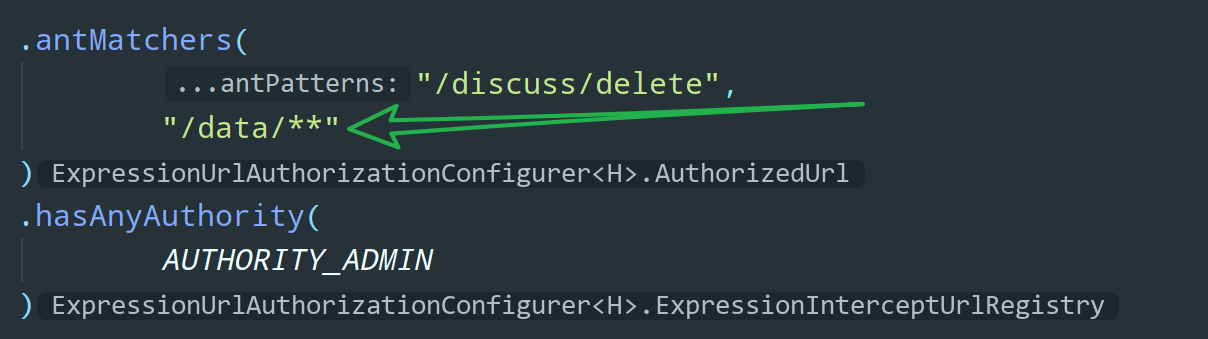

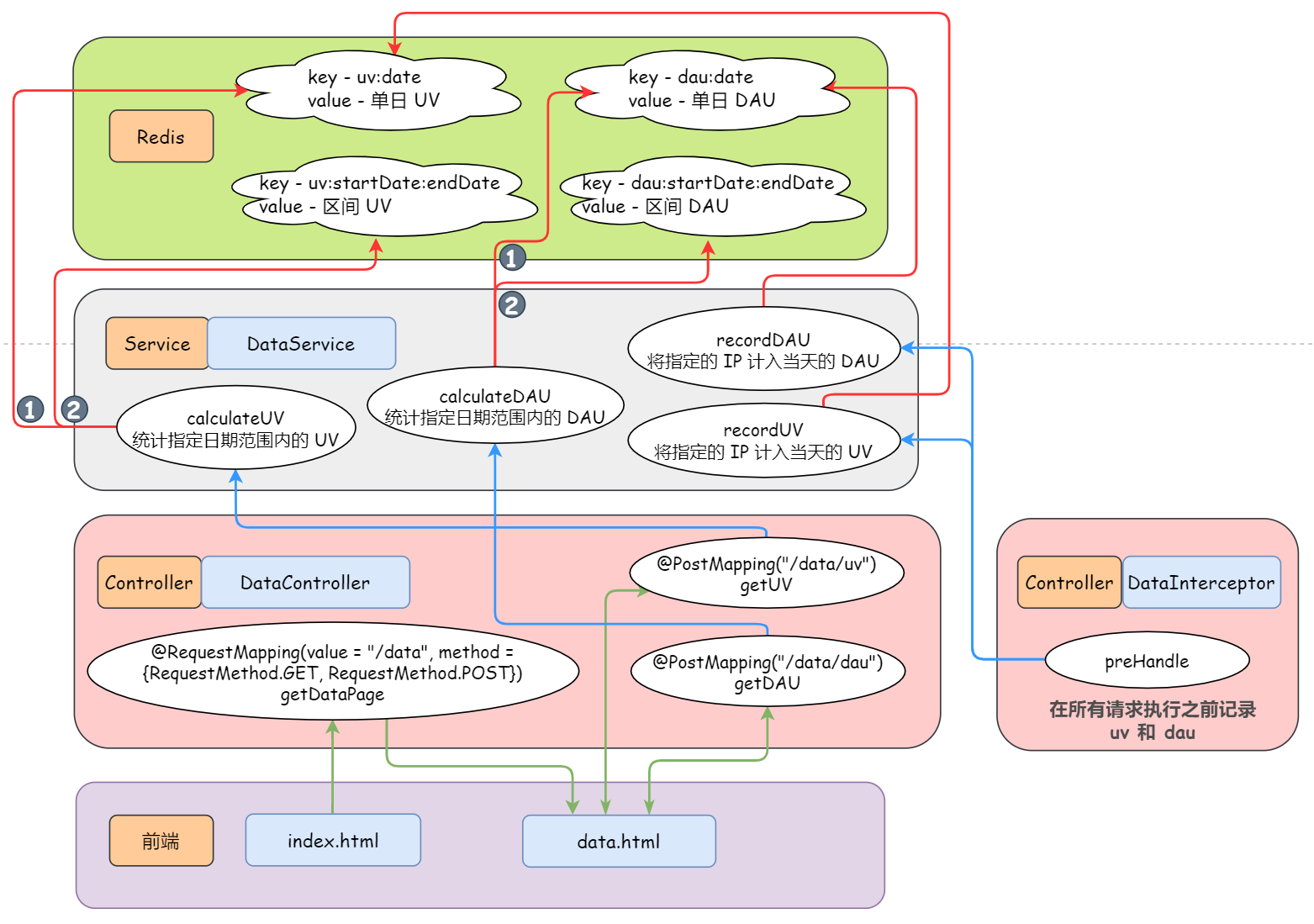

- 权限管理(Spring Security)

|

||||

- 只有管理员可以查看网站数据统计

|

||||

|

||||

- [ ] 文件上传

|

||||

- [x] 优化网站性能

|

||||

|

||||

- [ ] 优化网站性能

|

||||

- 使用本地缓存 Caffeine 缓存热帖列表以及所有用户帖子的总数

|

||||

|

||||

## 🔐 待实现及优化

|

||||

|

||||

- [ ] 修改用户名

|

||||

- [ ] 查询我的帖子

|

||||

- [ ] 查询我的评论

|

||||

以下是我个人发现的本项目存在的问题,但是暂时没有头绪无法解决,集思广益,欢迎各位小伙伴提 PR 解决:

|

||||

|

||||

- [ ] 注册模块无法正常跳转到操作提示界面(本地运行没有问题)

|

||||

- [ ] 评论功能的前端显示部分存在 Bug

|

||||

- [ ] 查询我的评论(未完善)

|

||||

|

||||

以下是我觉得本项目还可以添加的功能,同样欢迎各位小伙伴提 issue 指出还可以增加哪些功能,或者直接提 PR 实现该功能:

|

||||

|

||||

- [ ] 忘记密码(发送邮件找回密码)

|

||||

- [ ] 查询我的点赞

|

||||

- [ ] 管理员对帖子的二次点击取消置顶功能

|

||||

- [ ] 管理员对已删除帖子的恢复功能

|

||||

- [ ] 管理员对已删除帖子的恢复功能(本项目中的删除帖子并未将其从数据库中删除,只是将其状态设置为了拉黑)

|

||||

|

||||

## 🎀 界面展示

|

||||

## 🌱 本地运行

|

||||

|

||||

各位如果需要将项目部署在本地进行测试,以下环境请提前备好:

|

||||

|

||||

- Java 8

|

||||

- MySQL 5.7

|

||||

- Redis

|

||||

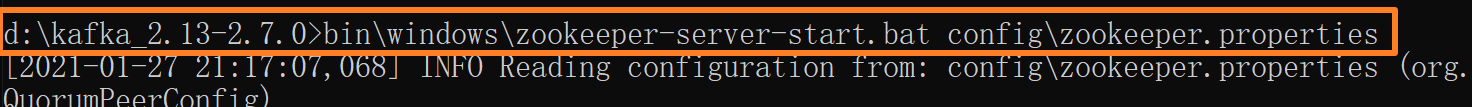

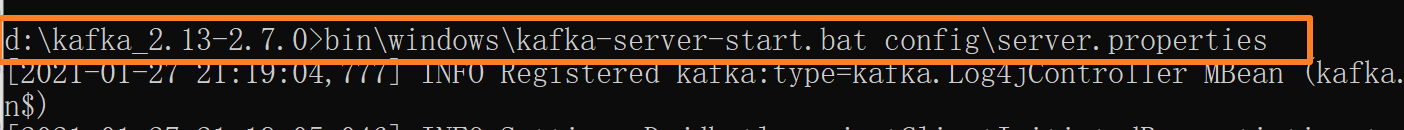

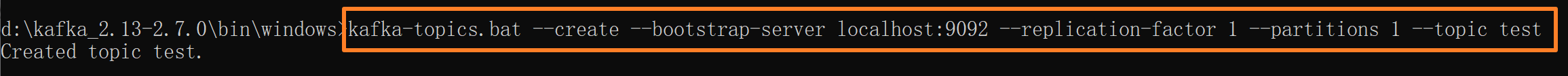

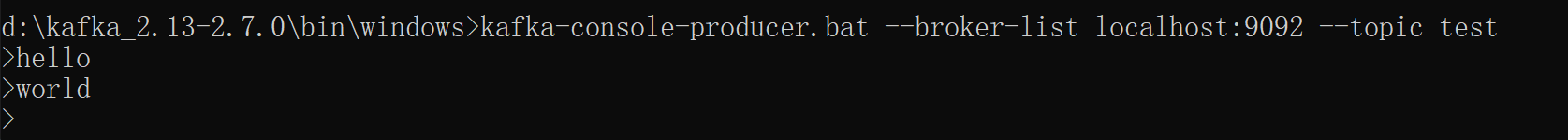

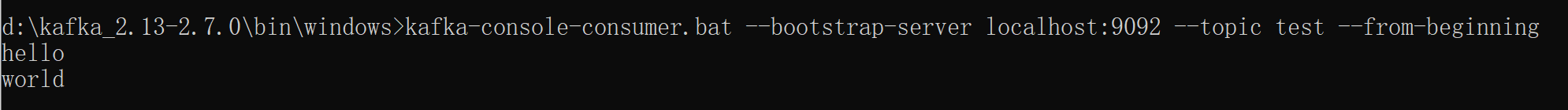

- Kafka 2.13-2.7.0

|

||||

- Elasticsearch 6.4.3

|

||||

|

||||

然后**修改配置文件中的信息为你自己的本地环境,直接运行是运行不了的**,而且相关私密信息我全部用 xxxxxxx 代替了。

|

||||

|

||||

本地运行需要修改的配置文件信息如下:

|

||||

|

||||

1)`application-develop.properties`:

|

||||

|

||||

- MySQL

|

||||

- Spring Mail(邮箱需要开启 SMTP 服务)

|

||||

- Kafka:consumer.group-id(该字段见 Kafka 安装包中的 consumer.proerties,可自行修改, 修改完毕后需要重启 Kafka)

|

||||

- Elasticsearch:cluster-name(该字段见 Elasticsearch 安装包中的 elasticsearch.yml,可自行修改)

|

||||

- 七牛云(需要新建一个七牛云的对象存储空间,用来存放上传的头像图片)

|

||||

|

||||

2)`logback-spring-develop.xml`:

|

||||

|

||||

- LOG_PATH:日志存放的位置

|

||||

|

||||

每次运行需要打开:

|

||||

|

||||

- MySQL

|

||||

- Redis

|

||||

- Elasticsearch

|

||||

- Kafka

|

||||

|

||||

另外,还需要事件建好数据库表,详细见下文。

|

||||

|

||||

## 📜 数据库设计

|

||||

|

||||

@ -240,22 +339,6 @@ CREATE TABLE `comment` (

|

||||

) ENGINE=InnoDB AUTO_INCREMENT=247 DEFAULT CHARSET=utf8;

|

||||

```

|

||||

|

||||

登录凭证 `login_ticket`(废弃,使用 Redis 存储):

|

||||

|

||||

```sql

|

||||

DROP TABLE IF EXISTS `login_ticket`;

|

||||

SET character_set_client = utf8mb4 ;

|

||||

CREATE TABLE `login_ticket` (

|

||||

`id` int(11) NOT NULL AUTO_INCREMENT,

|

||||

`user_id` int(11) NOT NULL,

|

||||

`ticket` varchar(45) NOT NULL COMMENT '凭证',

|

||||

`status` int(11) DEFAULT '0' COMMENT '凭证状态:0-有效; 1-无效;',

|

||||

`expired` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '凭证到期时间',

|

||||

PRIMARY KEY (`id`),

|

||||

KEY `index_ticket` (`ticket`(20))

|

||||

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

|

||||

```

|

||||

|

||||

私信 `message`:

|

||||

|

||||

```sql

|

||||

@ -276,5 +359,179 @@ CREATE TABLE `message` (

|

||||

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

|

||||

```

|

||||

|

||||

## 📖 常见面试题

|

||||

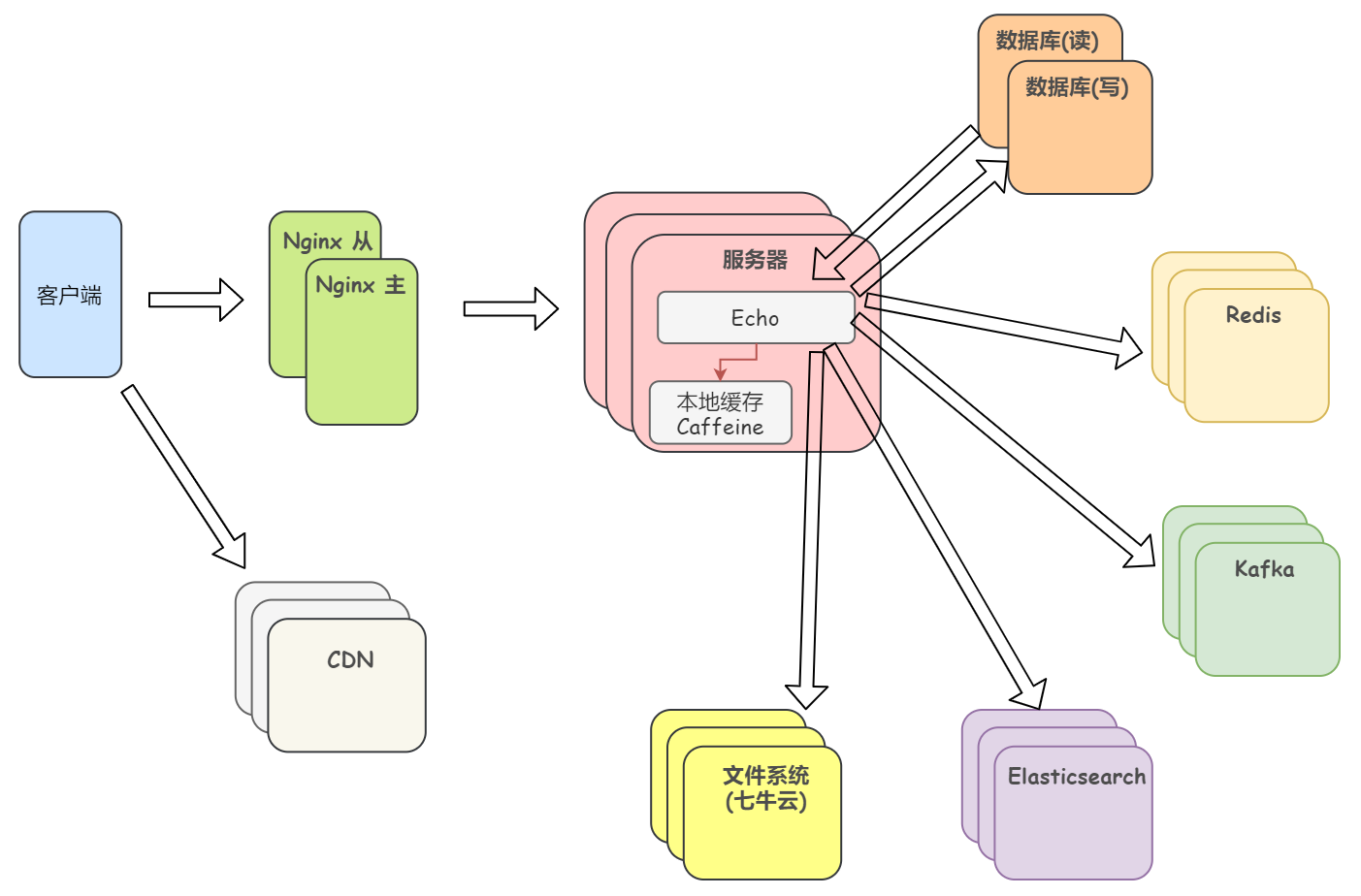

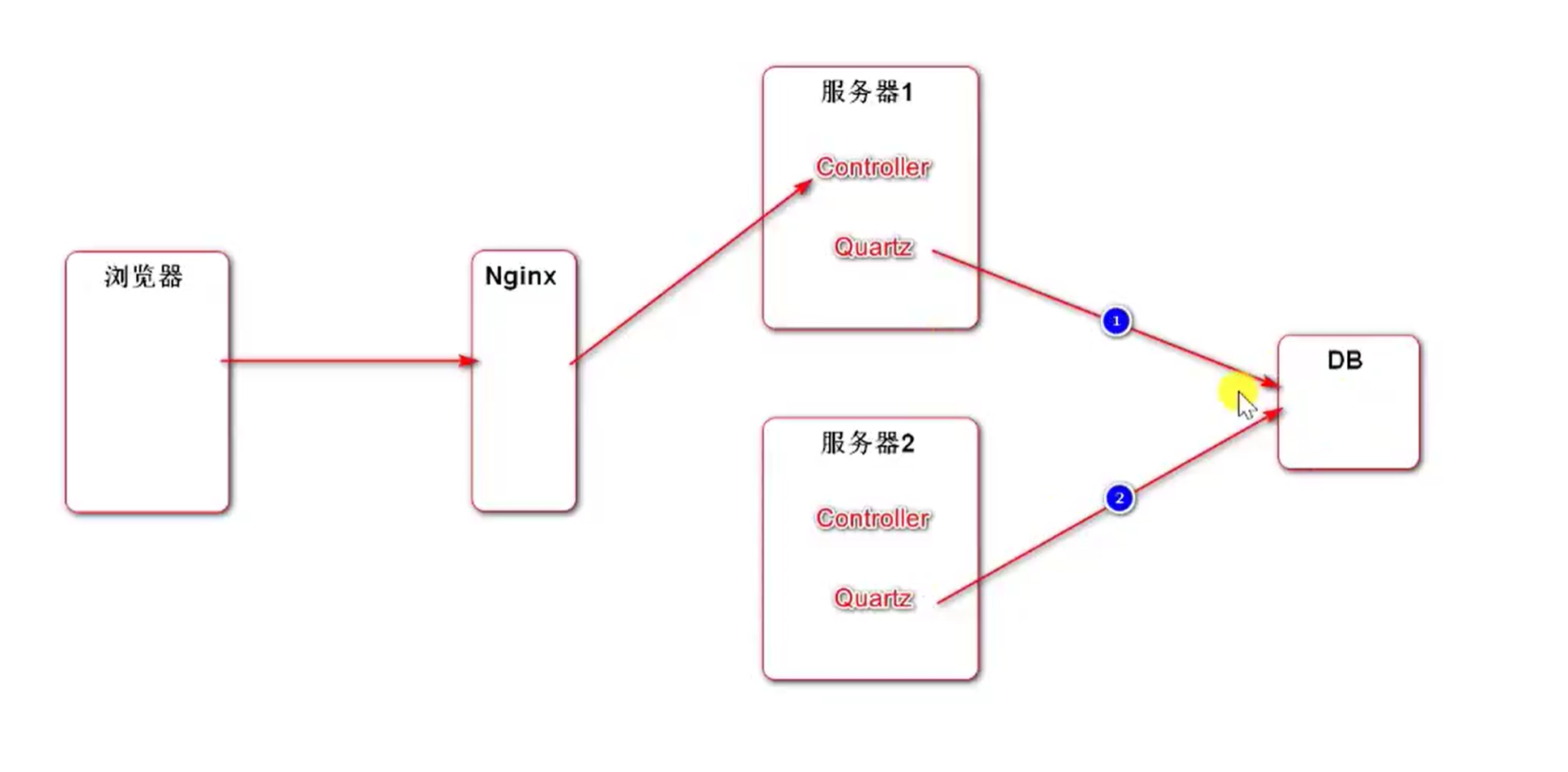

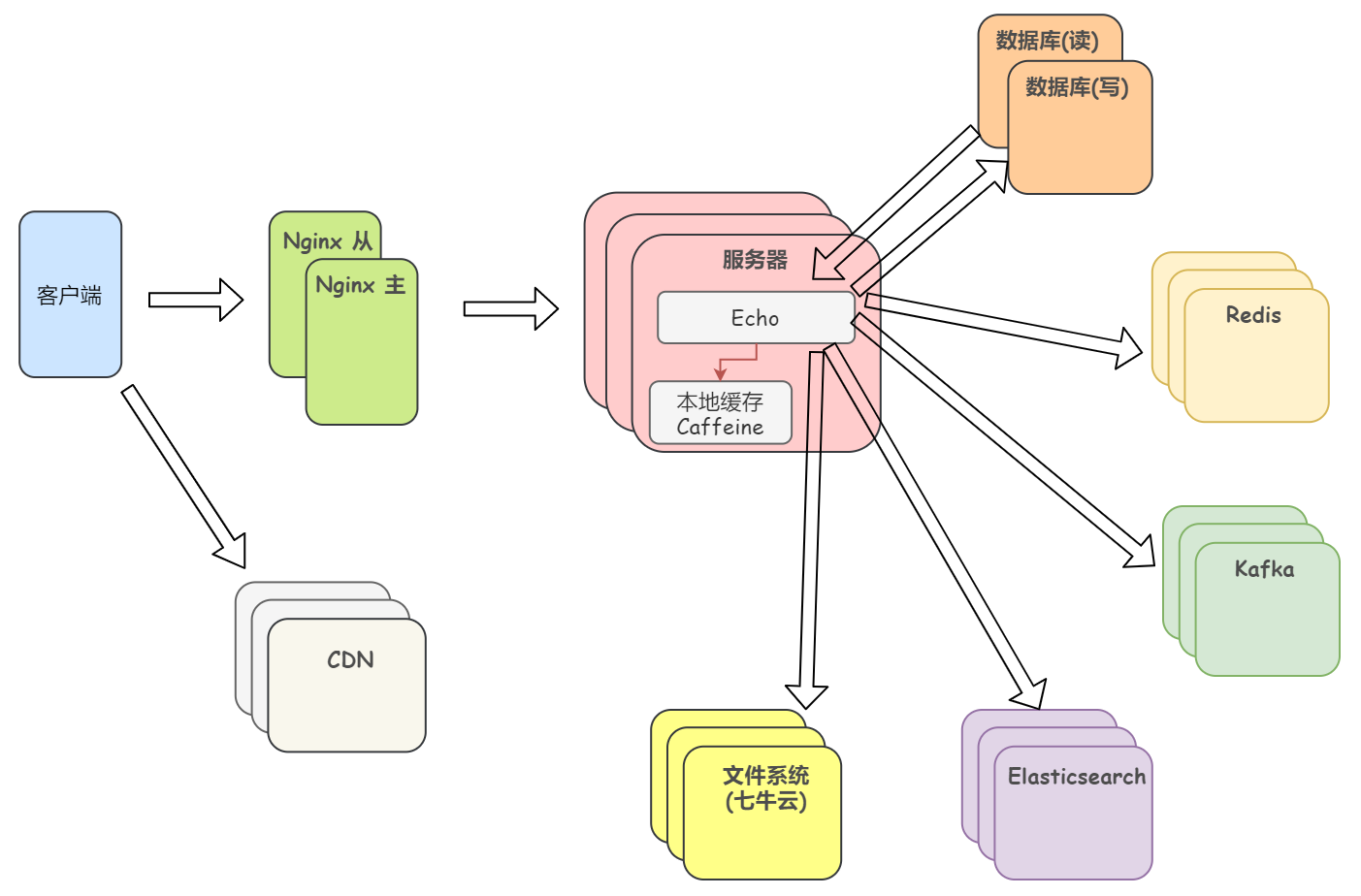

## 🌌 理想的部署架构

|

||||

|

||||

我每个都只部署了一台,以下是理想的部署架构:

|

||||

|

||||

|

||||

|

||||

## 🎯 功能逻辑图

|

||||

|

||||

画了一些不是那么严谨的图帮助各位小伙伴理清思绪。

|

||||

|

||||

> 单向绿色箭头:

|

||||

>

|

||||

> - 前端模板 -> Controller:表示这个前端模板中有一个超链接是由这个 Controller 处理的

|

||||

> - Controller -> 前端模板:表示这个 Controller 会像该前端模板传递数据或者跳转

|

||||

>

|

||||

> 双向绿色箭头:表示 Controller 和前端模板之间进行参数的相互传递或使用

|

||||

>

|

||||

> 单向蓝色箭头: A -> B,表示 A 方法调用了 B 方法

|

||||

>

|

||||

> 单向红色箭头:数据库或缓存操作

|

||||

|

||||

### 注册

|

||||

|

||||

- 用户注册成功,将用户信息存入 MySQL,但此时该用户状态为未激活

|

||||

- 向用户发送激活邮件,用户点击链接则激活账号(Spring Mail)

|

||||

|

||||

|

||||

|

||||

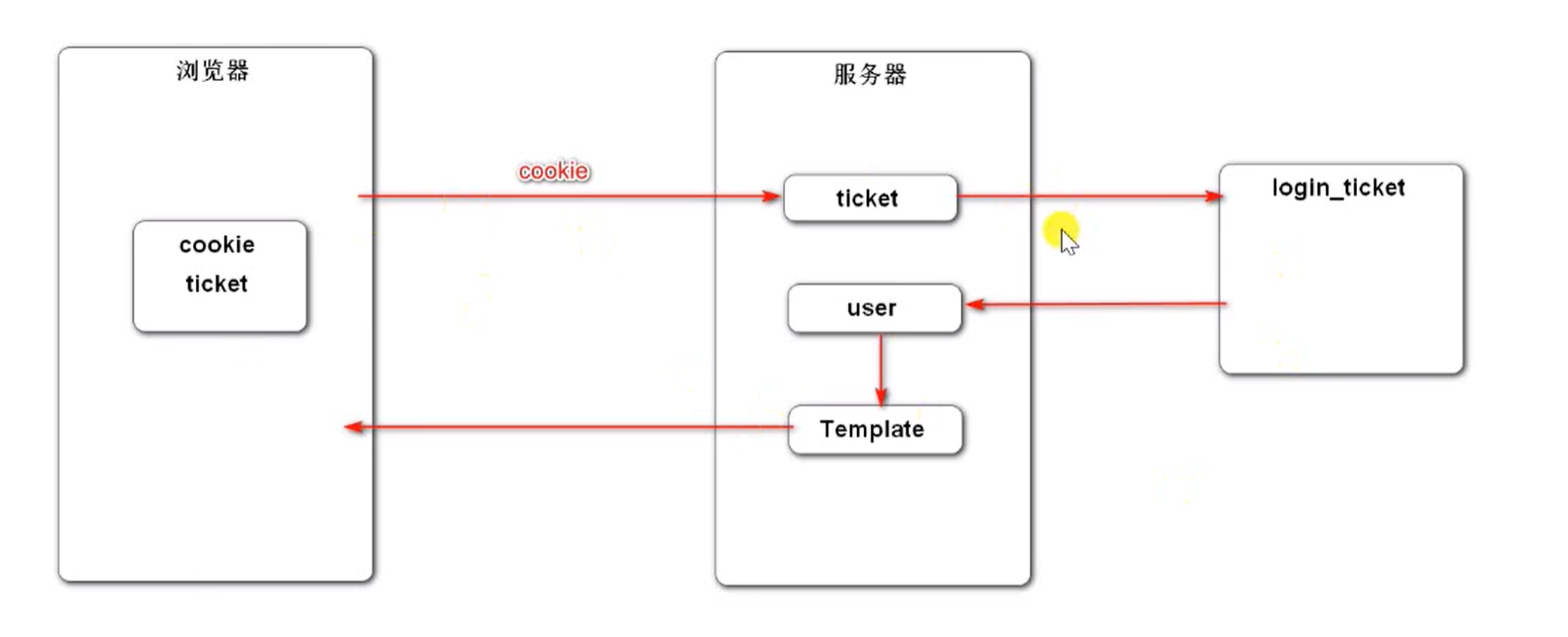

### 登录 | 登出

|

||||

|

||||

- 进入登录界面,动态生成验证码,并将验证码短暂存入 Redis(60 秒)

|

||||

|

||||

- 用户登录成功(验证用户名、密码、验证码),生成登录凭证且设置状态为有效,并将登录凭证存入 Redis

|

||||

|

||||

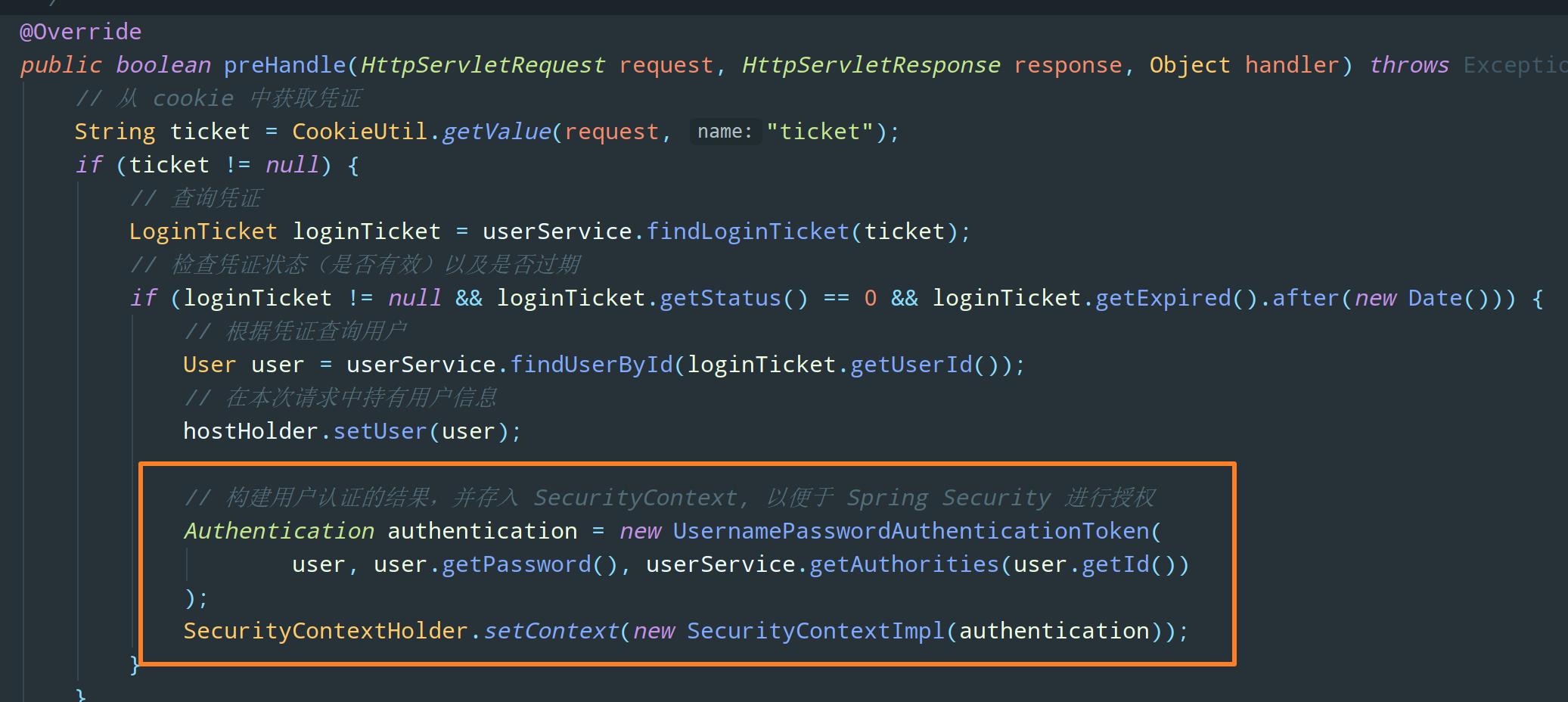

注意:登录凭证存在有效期,在所有的请求执行之前,都会检查凭证是否有效和是否过期,只要该用户的凭证有效并在有效期时间内,本次请求就会一直持有该用户信息(使用 ThreadLocal 持有用户信息)

|

||||

|

||||

- 勾选记住我,则延长登录凭证有效时间

|

||||

|

||||

- 用户登录成功,将用户信息短暂存入 Redis(1 小时)

|

||||

|

||||

- 用户登出,将凭证状态设为无效,并更新 Redis 中该用户的登录凭证信息

|

||||

|

||||

下图是登录模块的功能逻辑图,并没有使用 Spring Security 提供的认证逻辑(我觉得这个模块是最复杂的,这张图其实很多细节还没有画全)

|

||||

|

||||

|

||||

|

||||

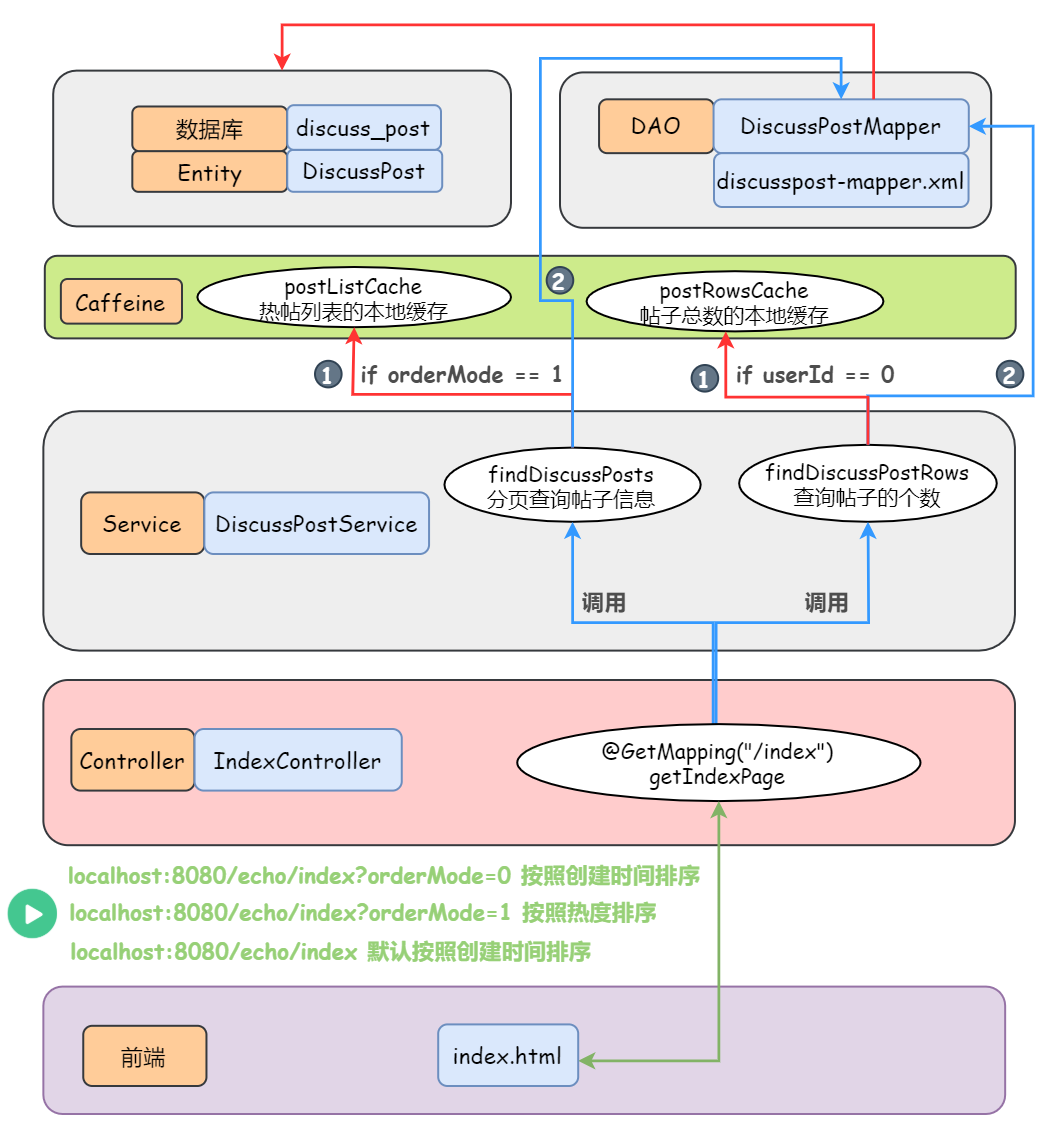

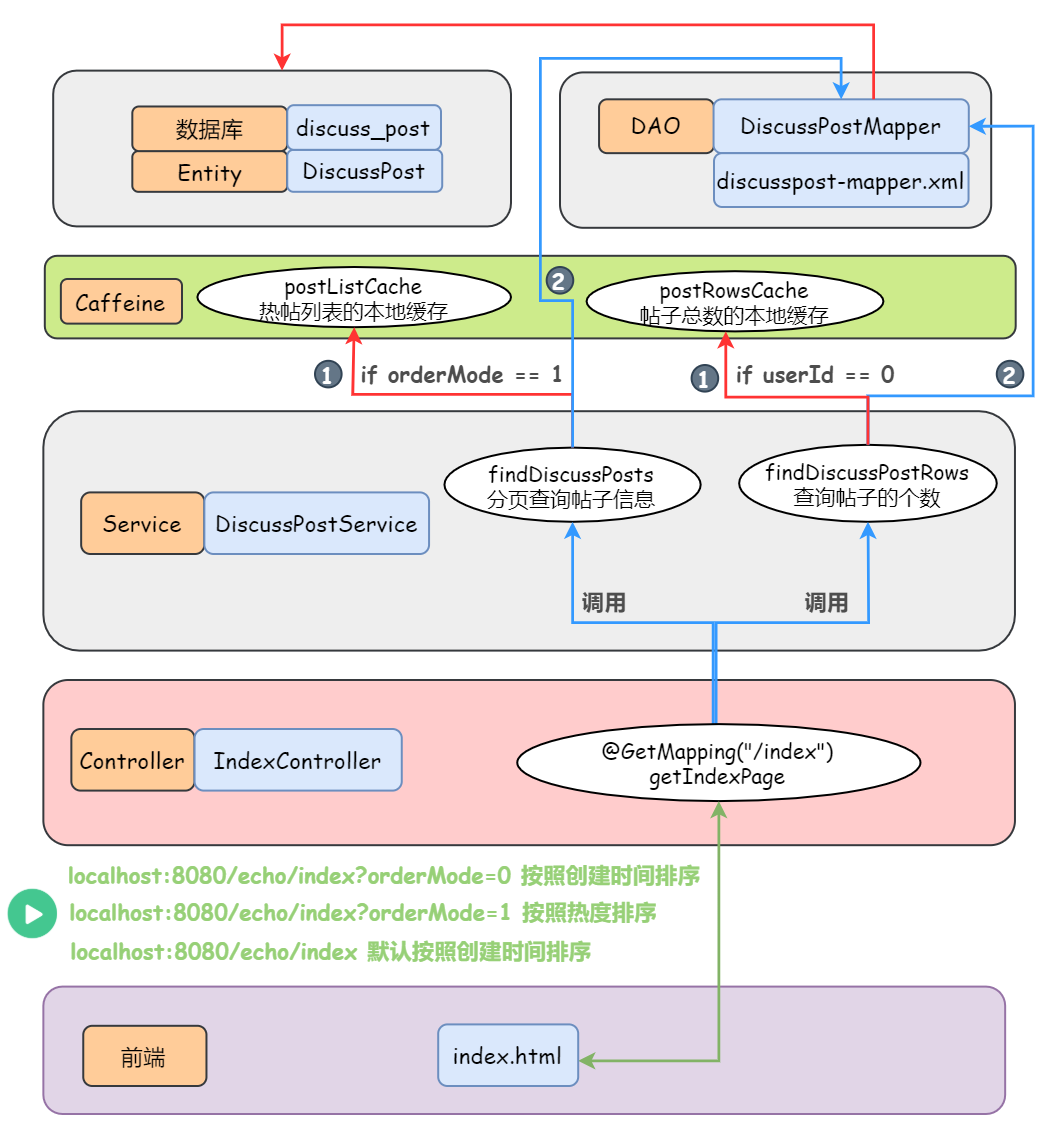

### 分页显示所有的帖子

|

||||

|

||||

- 支持按照 “发帖时间” 显示

|

||||

- 支持按照 “热度排行” 显示(Spring Quartz)

|

||||

- 将热帖列表和所有帖子的总数存入本地缓存 Caffeine(利用分布式定时任务 Spring Quartz 每隔一段时间就刷新计算帖子的热度/分数 — 见下文,而 Caffeine 里的数据更新不用我们操心,它天生就会自动的更新它拥有的数据,给它一个初始化方法就完事儿)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

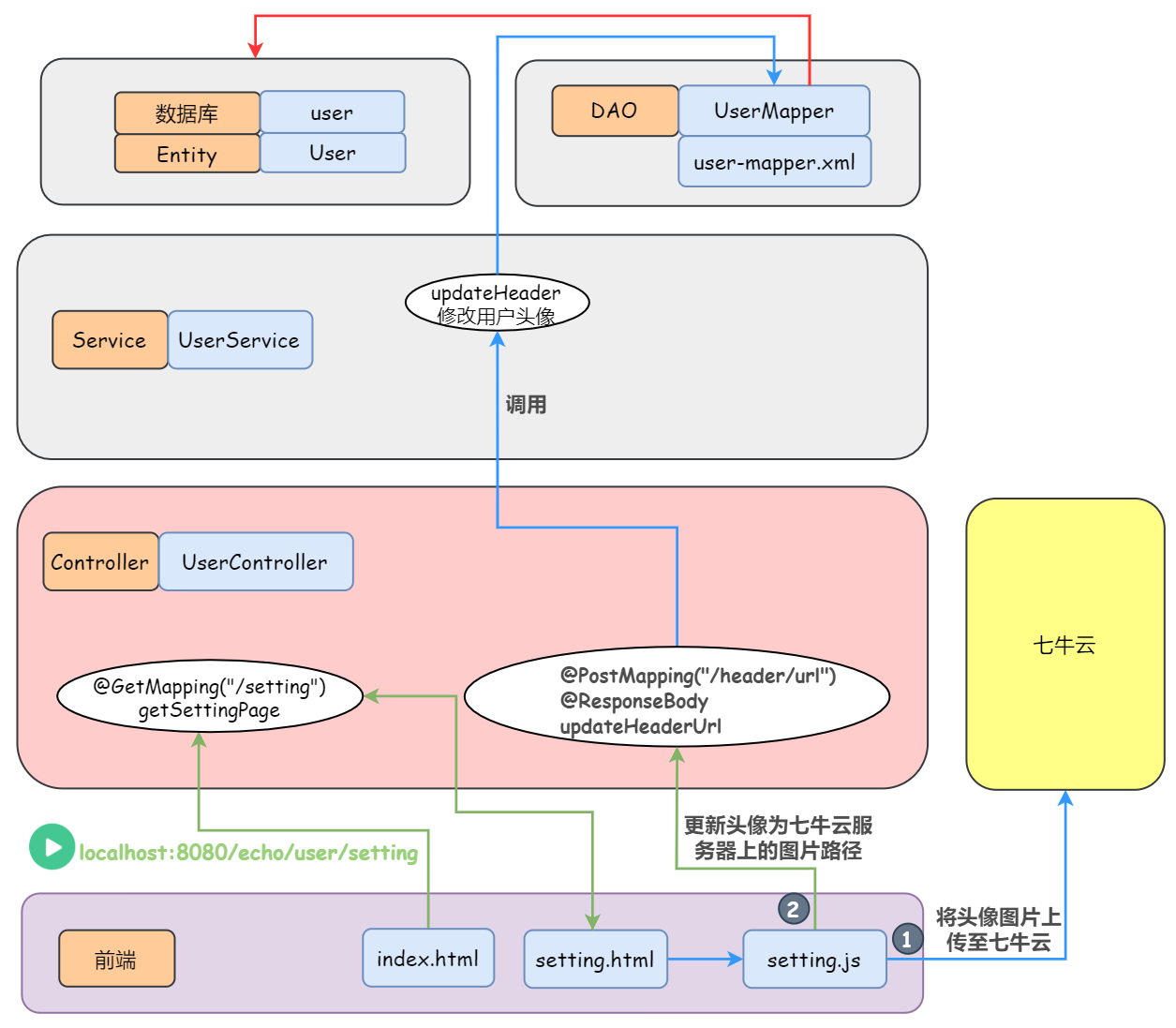

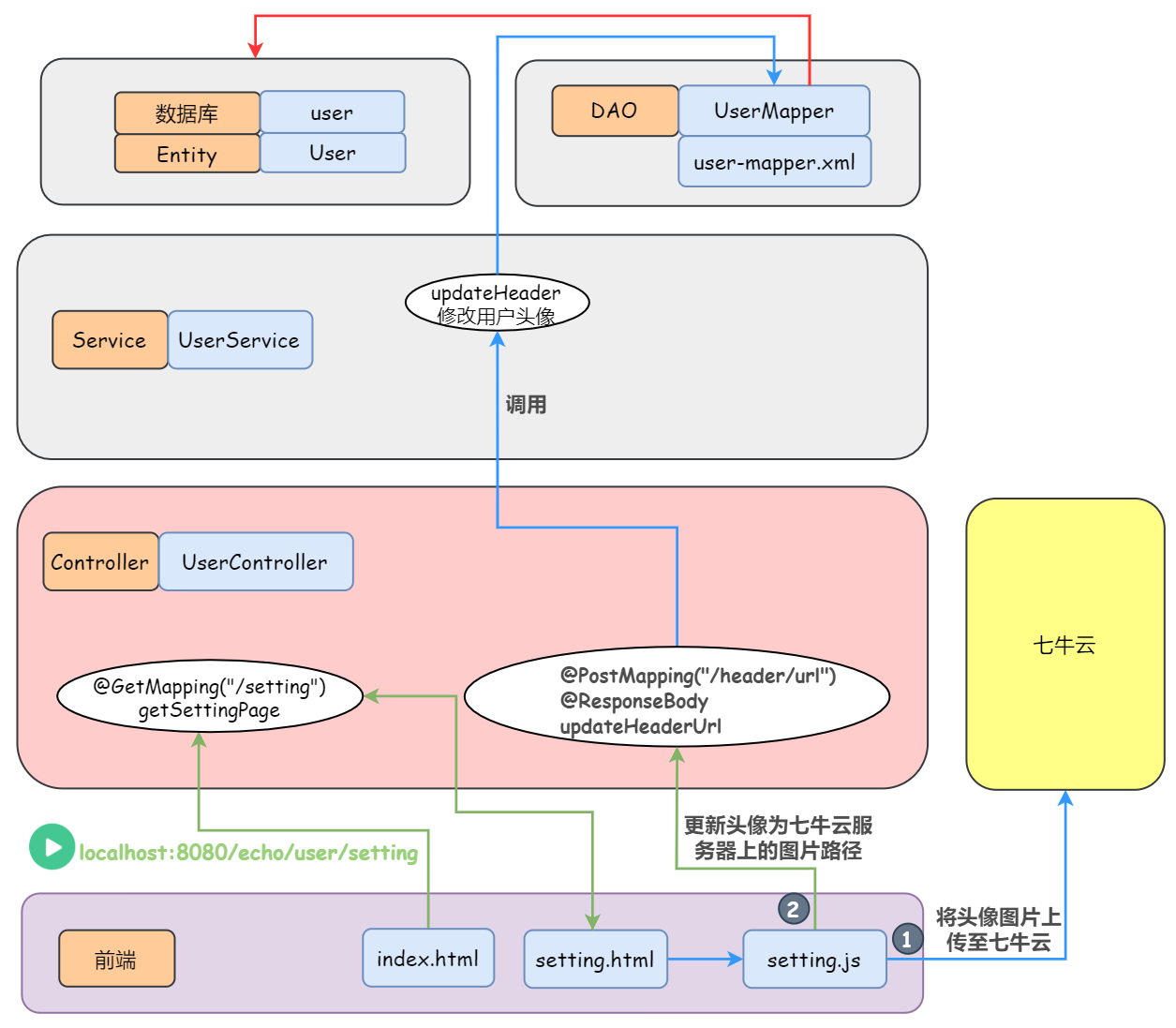

### 账号设置

|

||||

|

||||

- 修改头像(异步请求)

|

||||

- 将用户选择的头像图片文件上传至七牛云服务器

|

||||

- 修改密码

|

||||

|

||||

此处只画出修改头像:

|

||||

|

||||

|

||||

|

||||

### 发布帖子(异步请求)

|

||||

|

||||

|

||||

|

||||

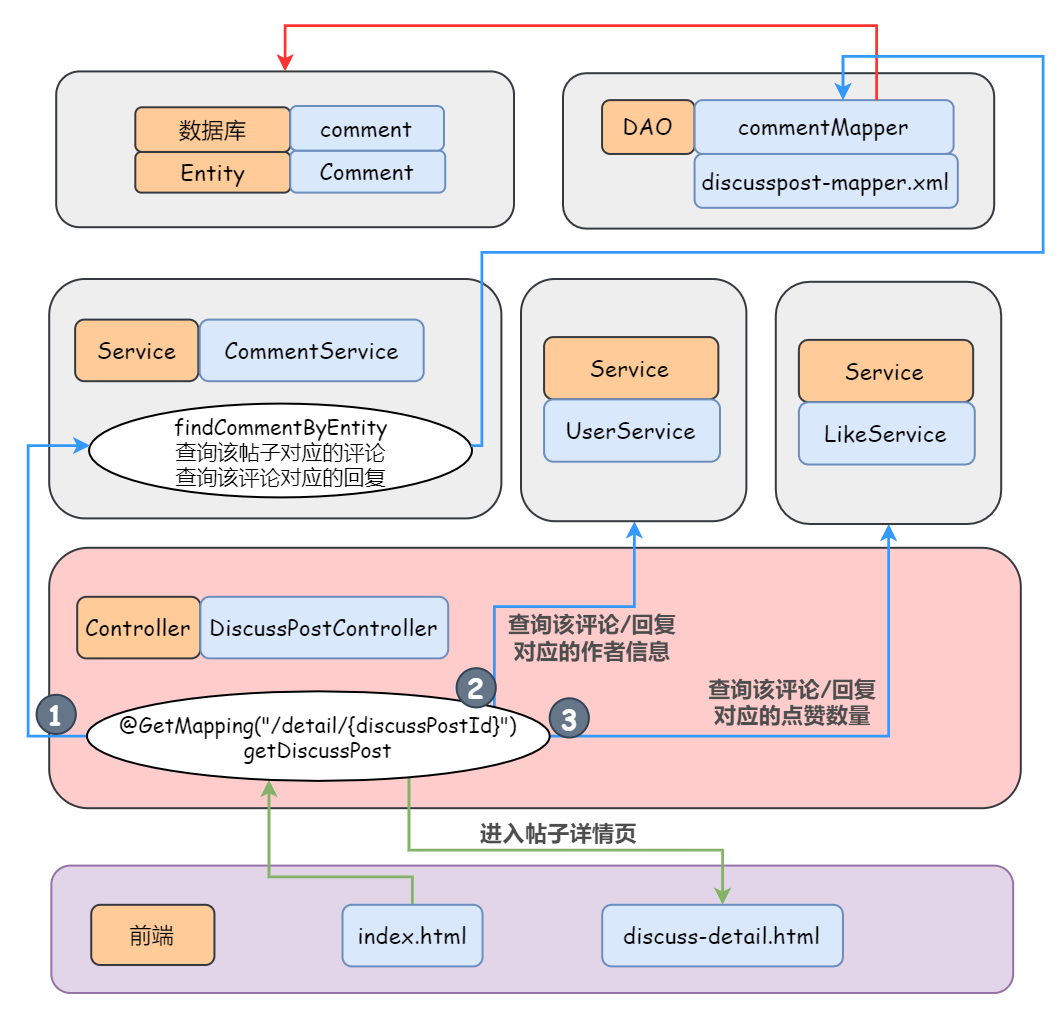

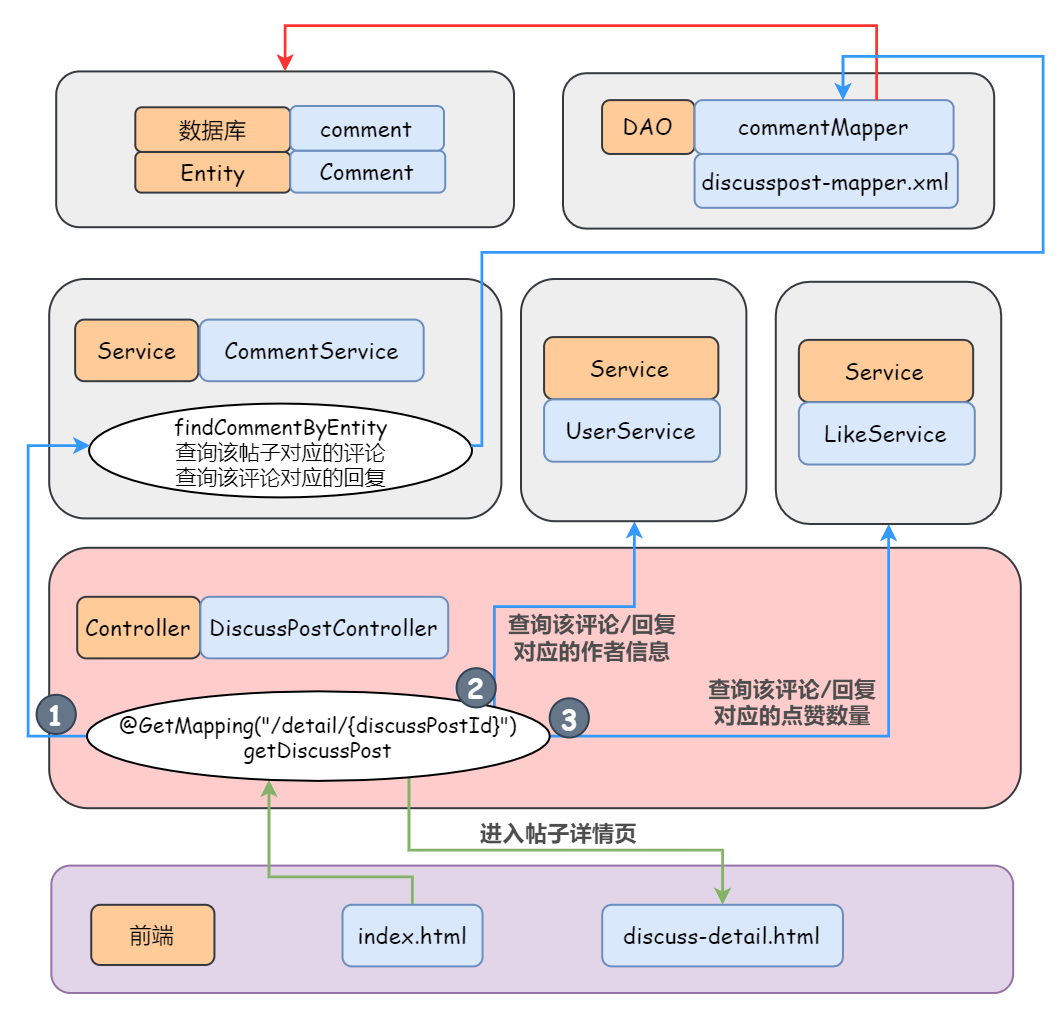

### 显示评论及相关信息

|

||||

|

||||

> 评论部分前端的名称显示有些缺陷,有兴趣的小伙伴欢迎提 PR 解决~

|

||||

|

||||

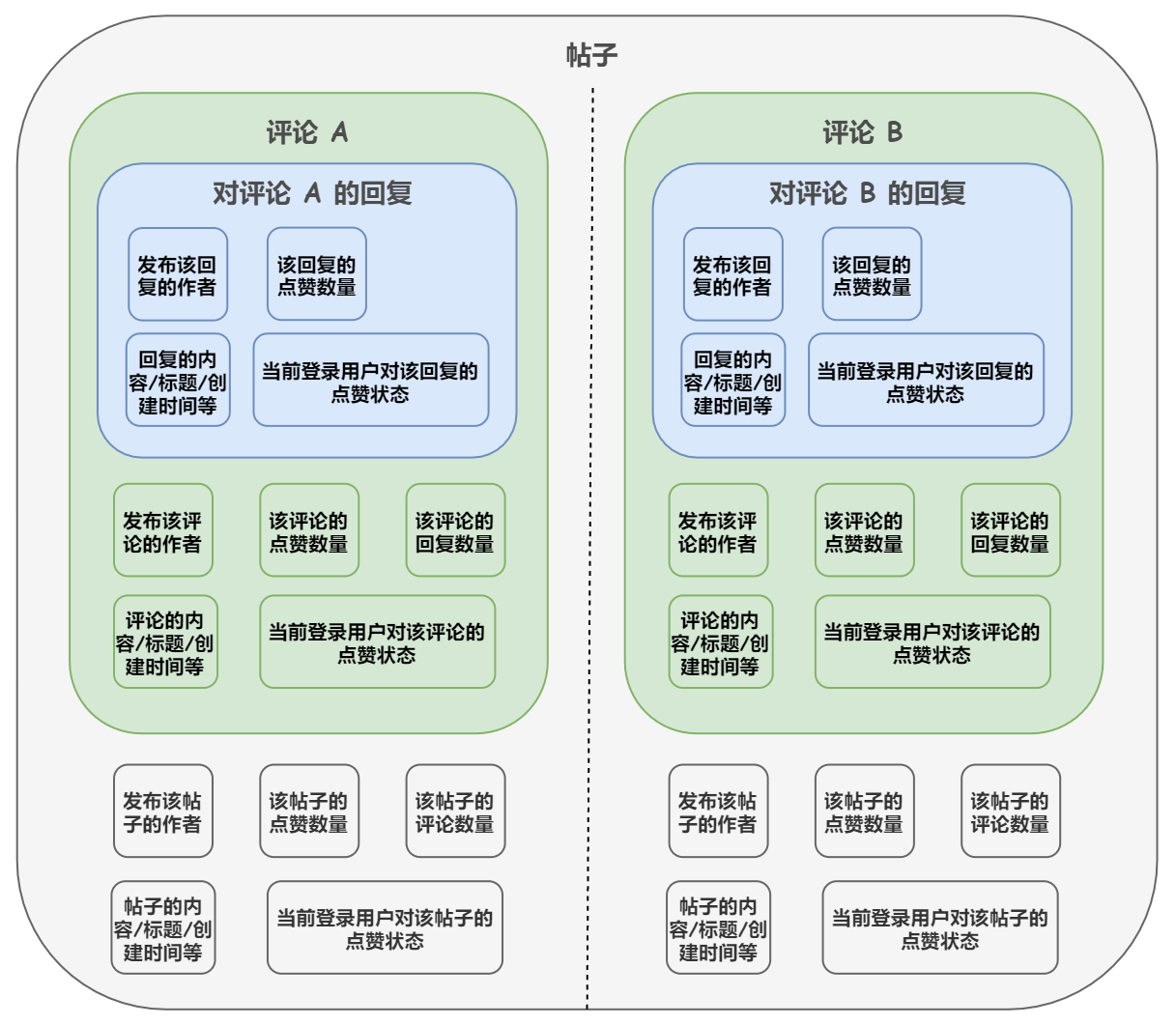

关于评论模块需要注意的就是评论表的设计,把握其中字段的含义,才能透彻了解这个功能的逻辑。

|

||||

|

||||

评论 Comment 的目标类型(帖子,评论) entityType 和 entityId 以及对哪个用户进行评论/回复 targetId 是由前端传递给 DiscussPostController 的

|

||||

|

||||

|

||||

|

||||

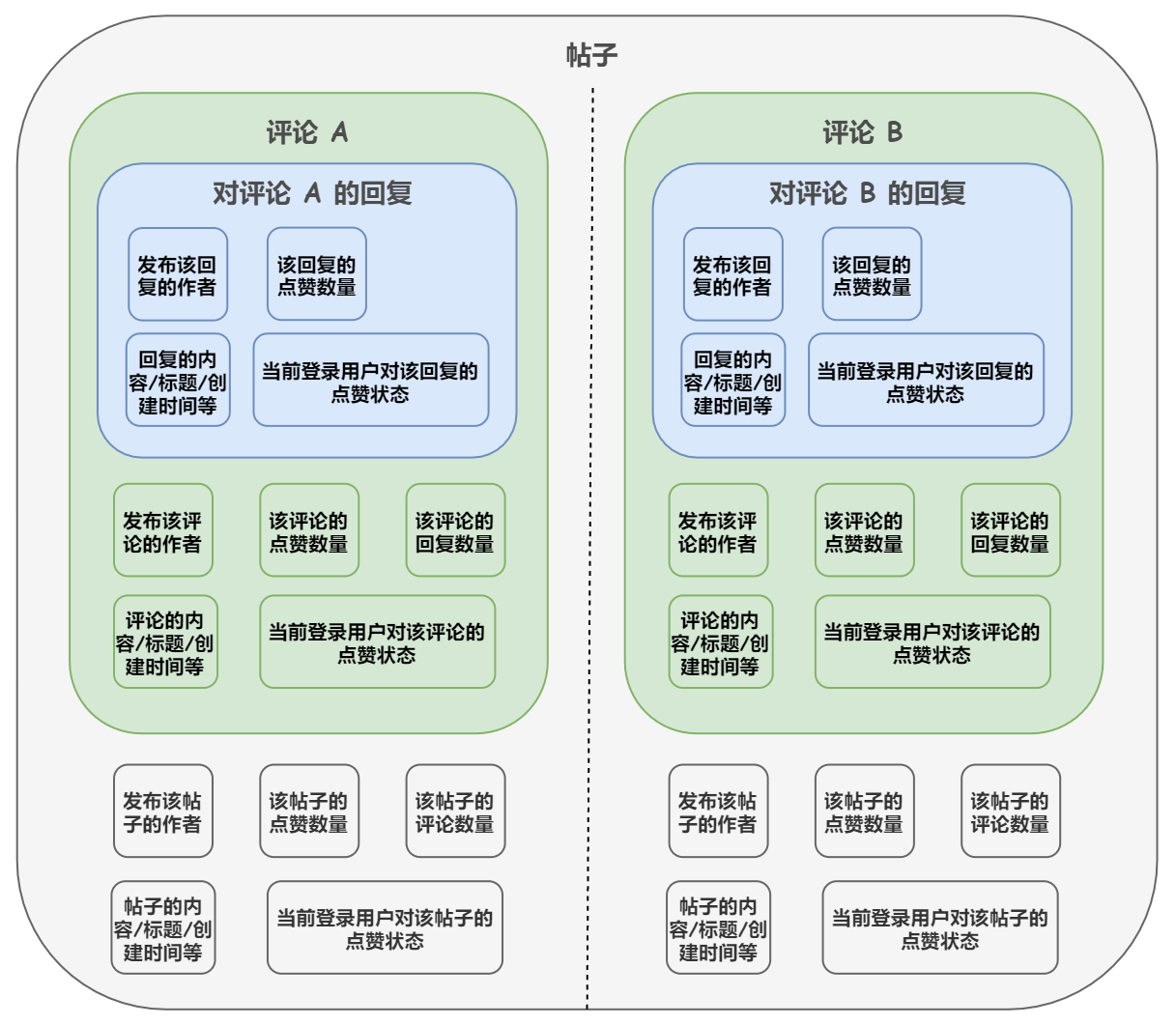

一个帖子的详情页需要封装的信息大概如下:

|

||||

|

||||

|

||||

|

||||

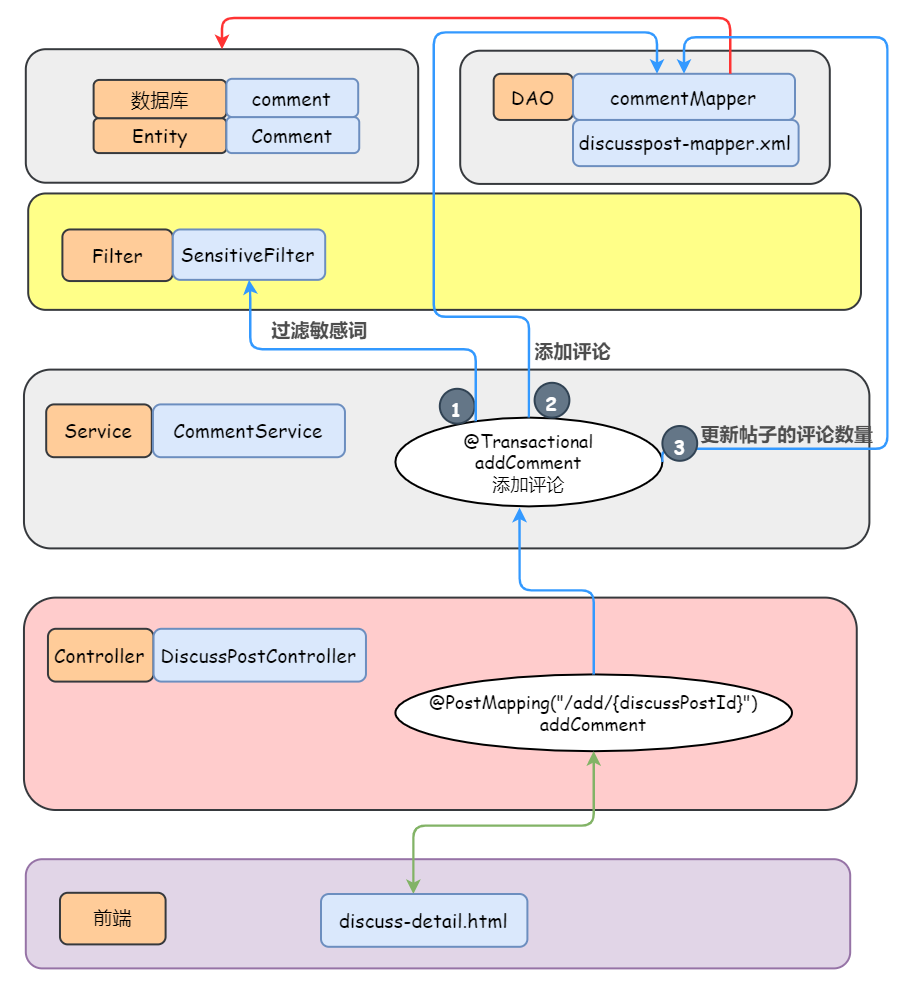

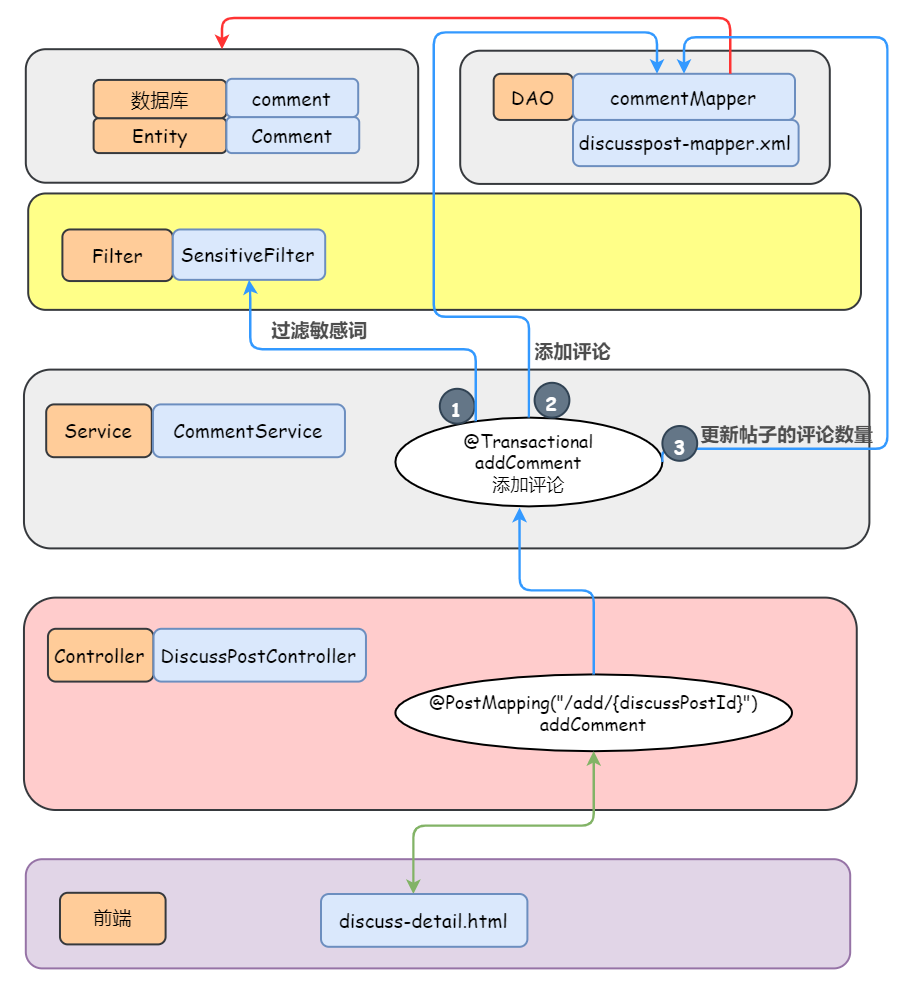

### 添加评论(事务管理)

|

||||

|

||||

|

||||

|

||||

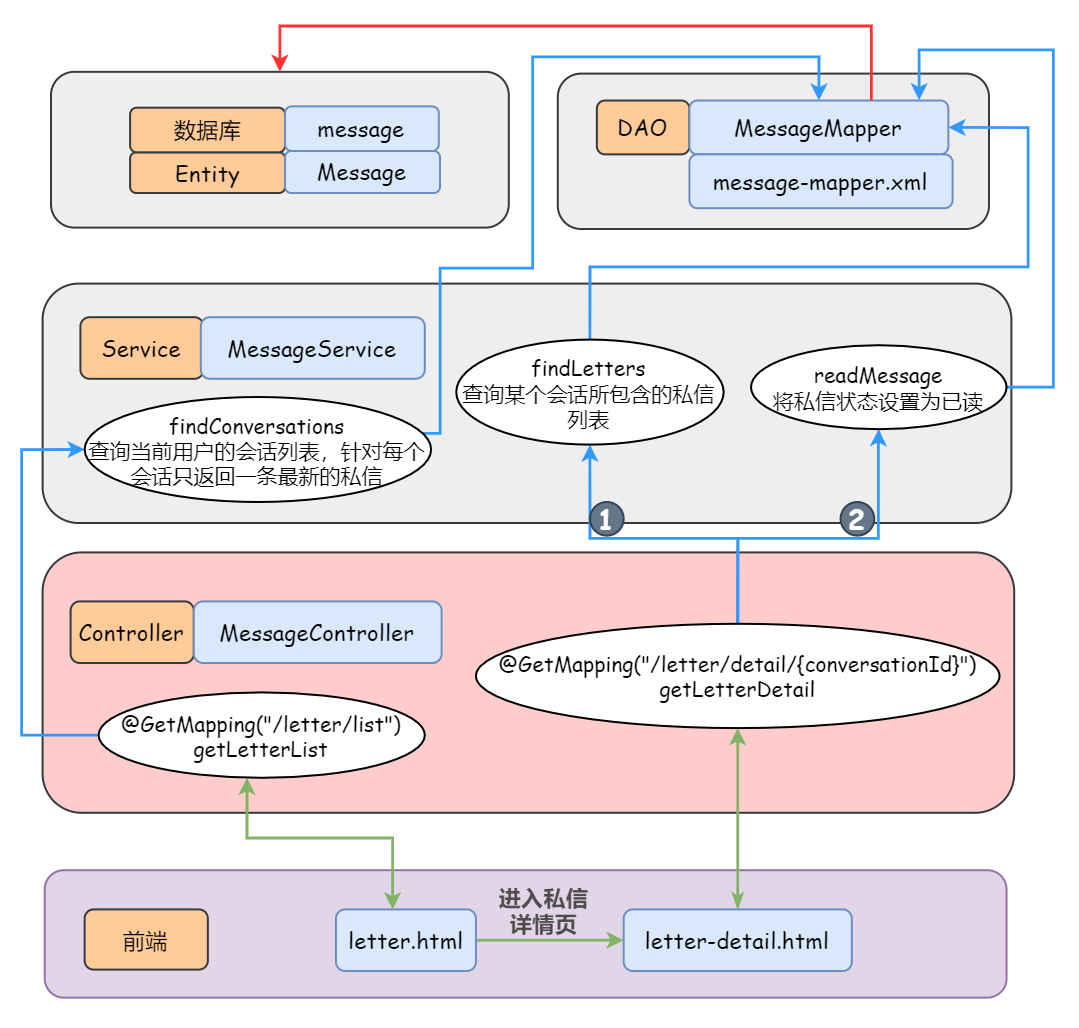

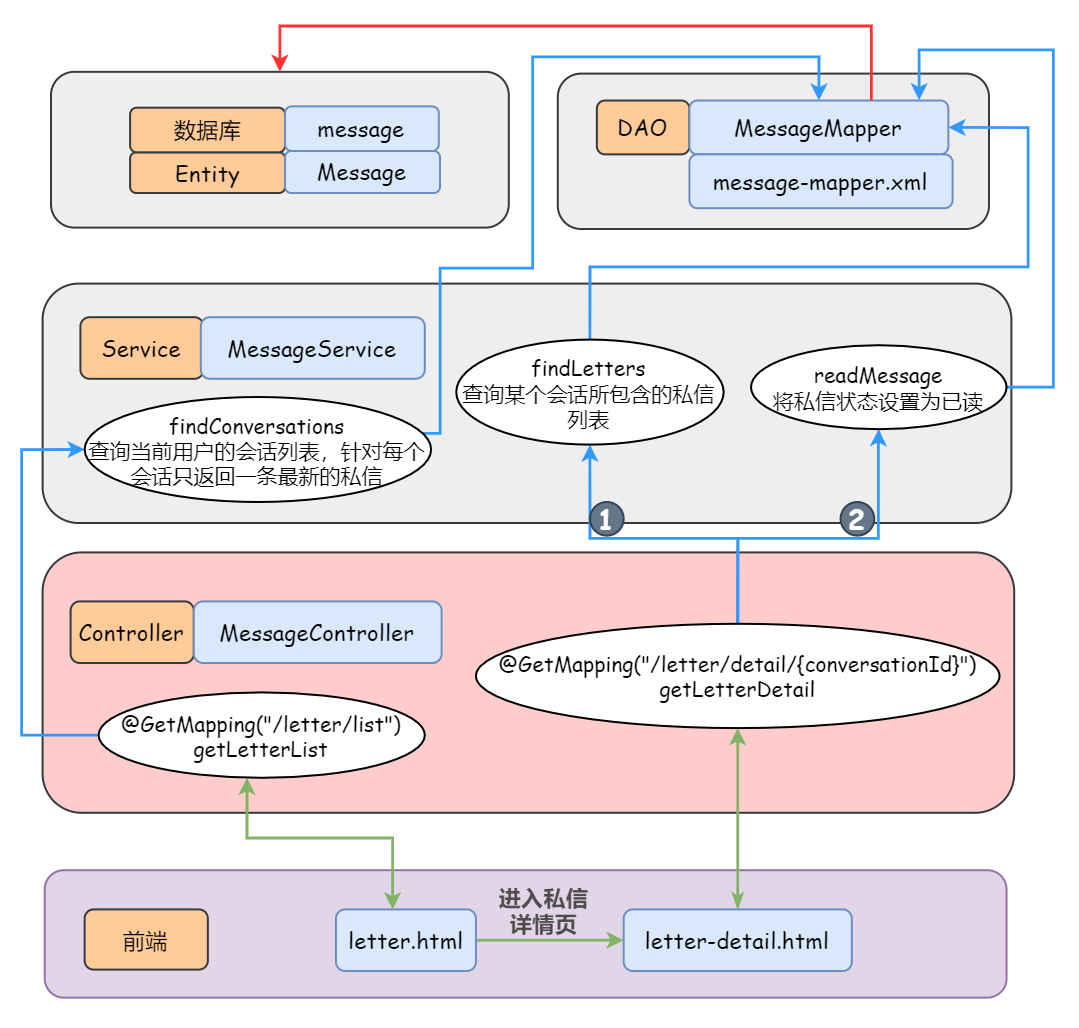

### 私信列表和详情页

|

||||

|

||||

|

||||

|

||||

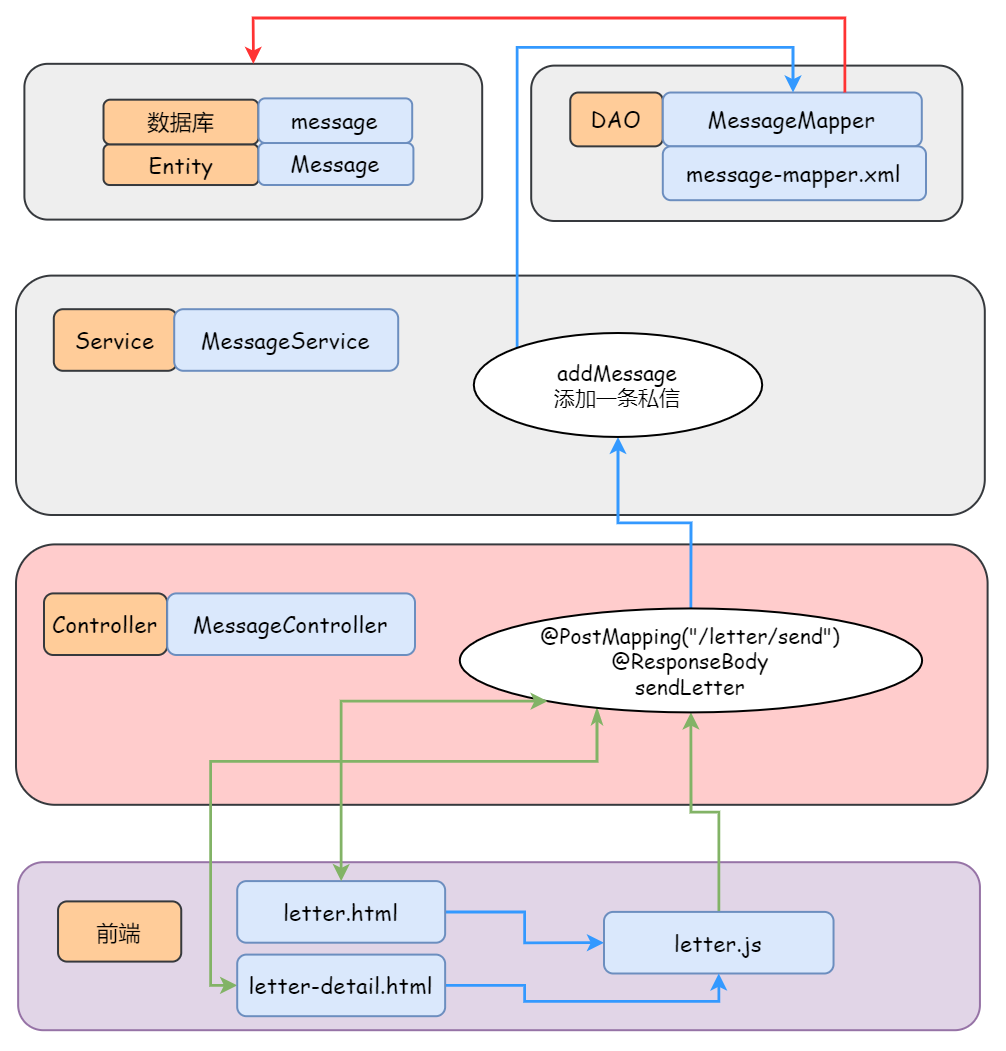

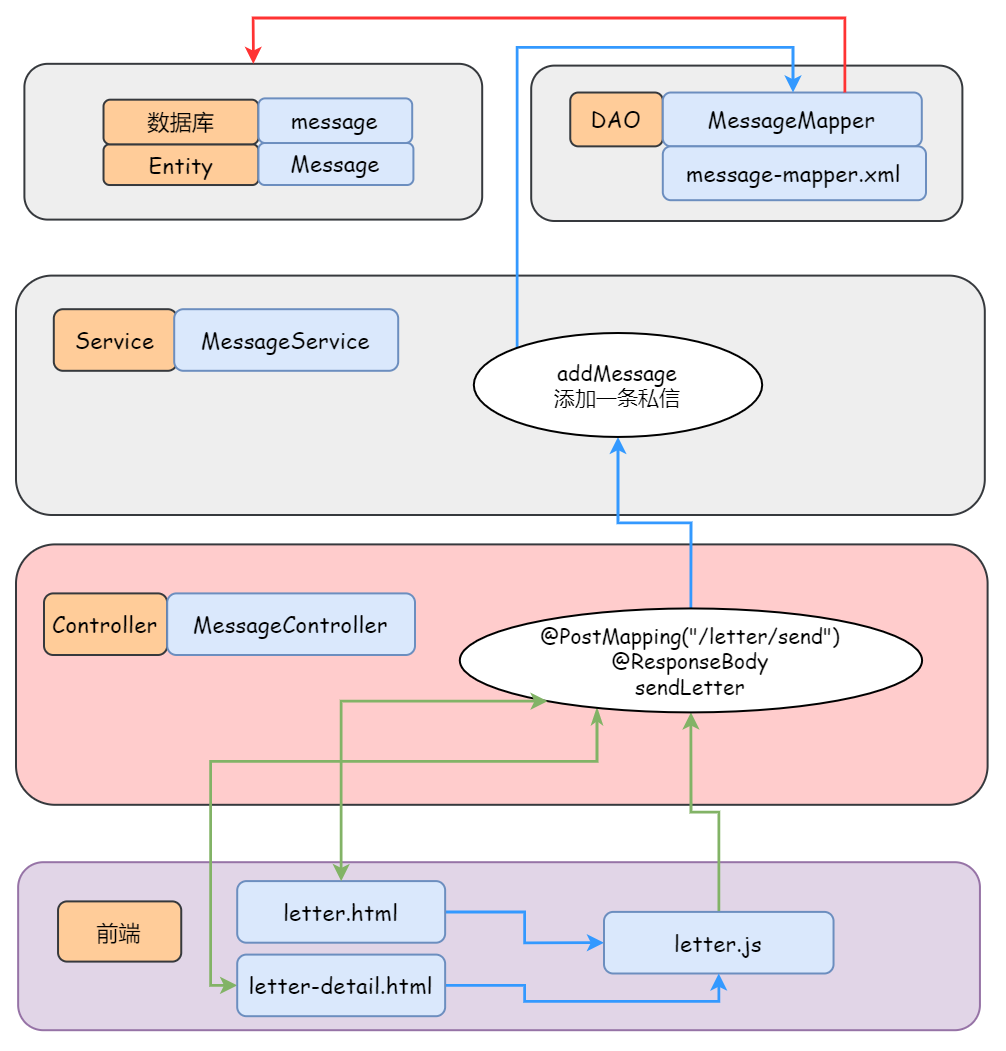

### 发送私信(异步请求)

|

||||

|

||||

|

||||

|

||||

### 点赞(异步请求)

|

||||

|

||||

将点赞相关信息存入 Redis 的数据结构 set 中。其中,key 命名为 `like:entity:entityType:entityId`,value 即点赞用户的 id。比如 key = `like:entity:2:246` value = `11` 表示用户 11 对实体类型 2 即评论进行了点赞,该评论的 id 是 246

|

||||

|

||||

某个用户的获赞数量对应的存储在 Redis 中的 key 是 `like:user:userId`,value 就是这个用户的获赞数量

|

||||

|

||||

|

||||

|

||||

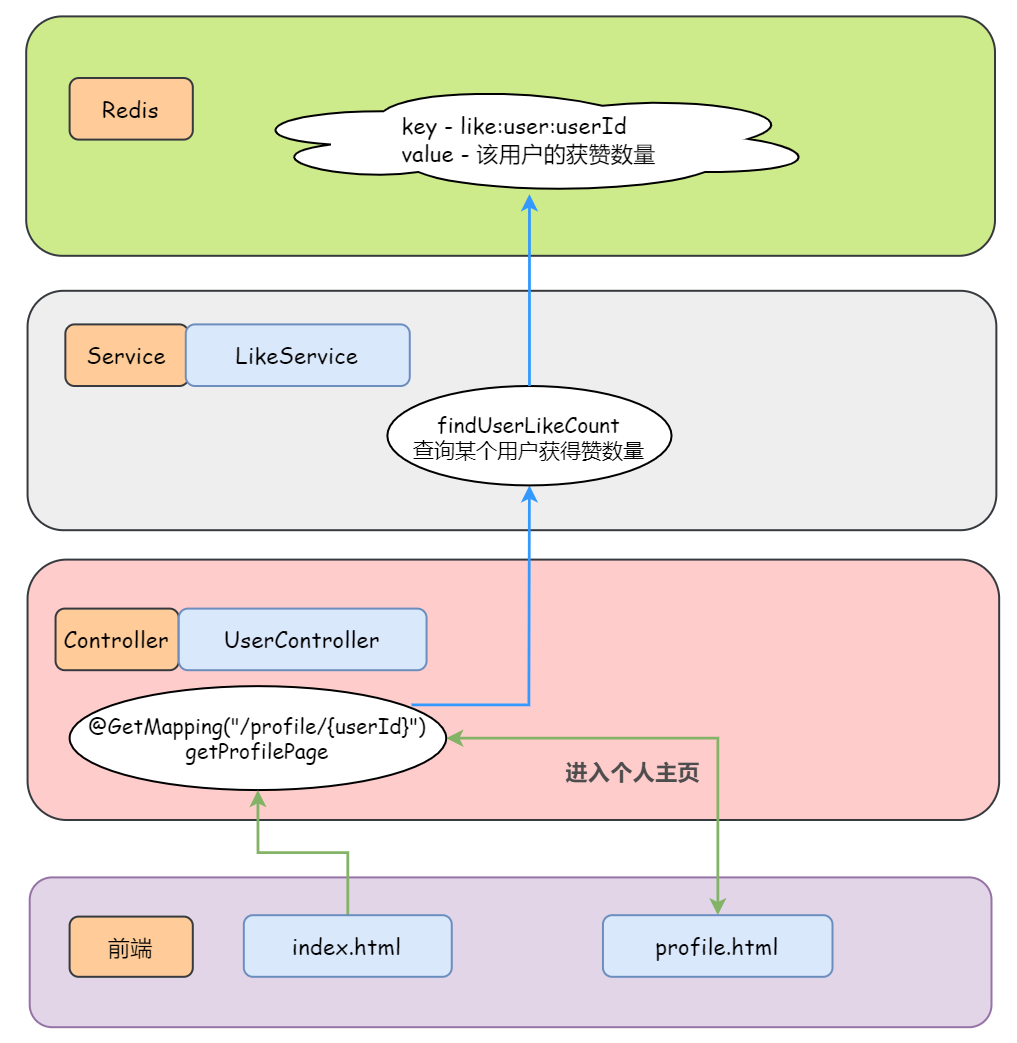

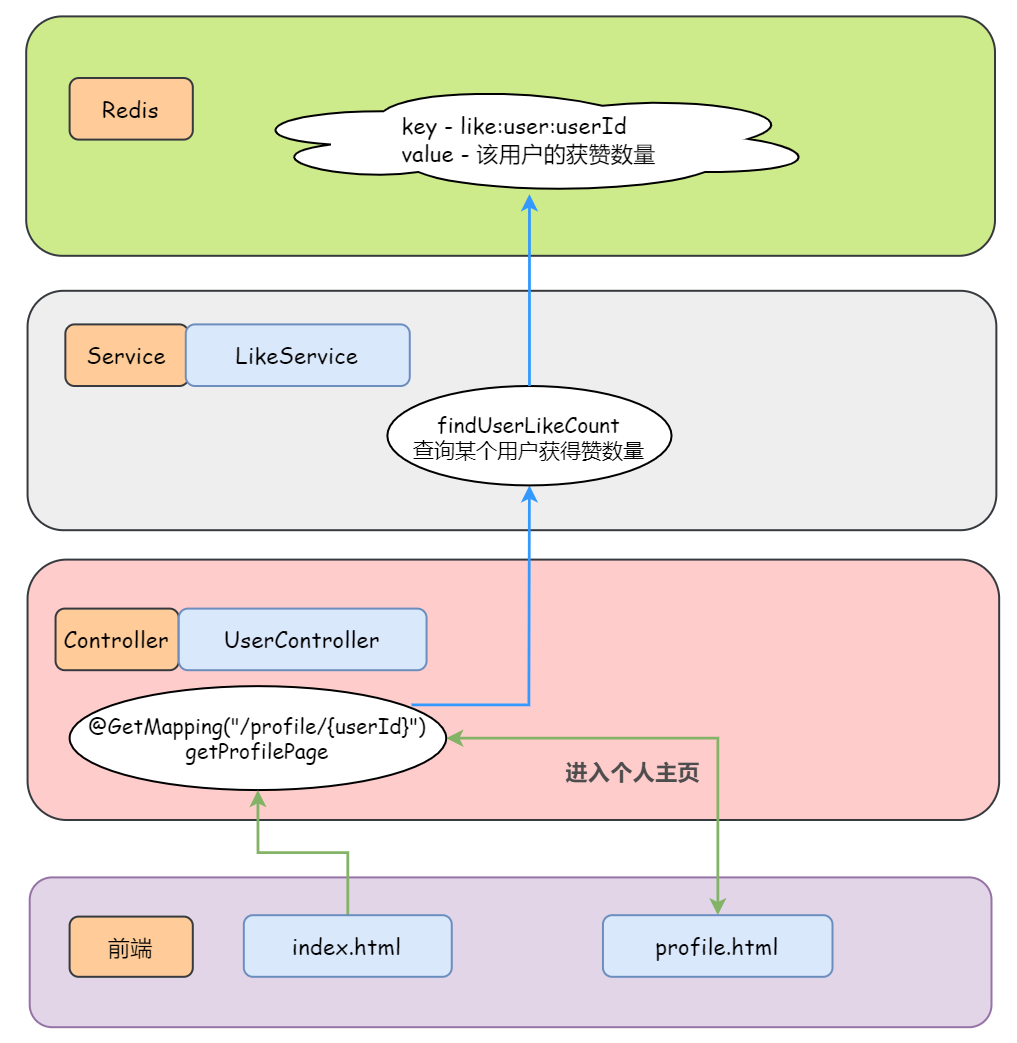

### 我的获赞数量

|

||||

|

||||

|

||||

|

||||

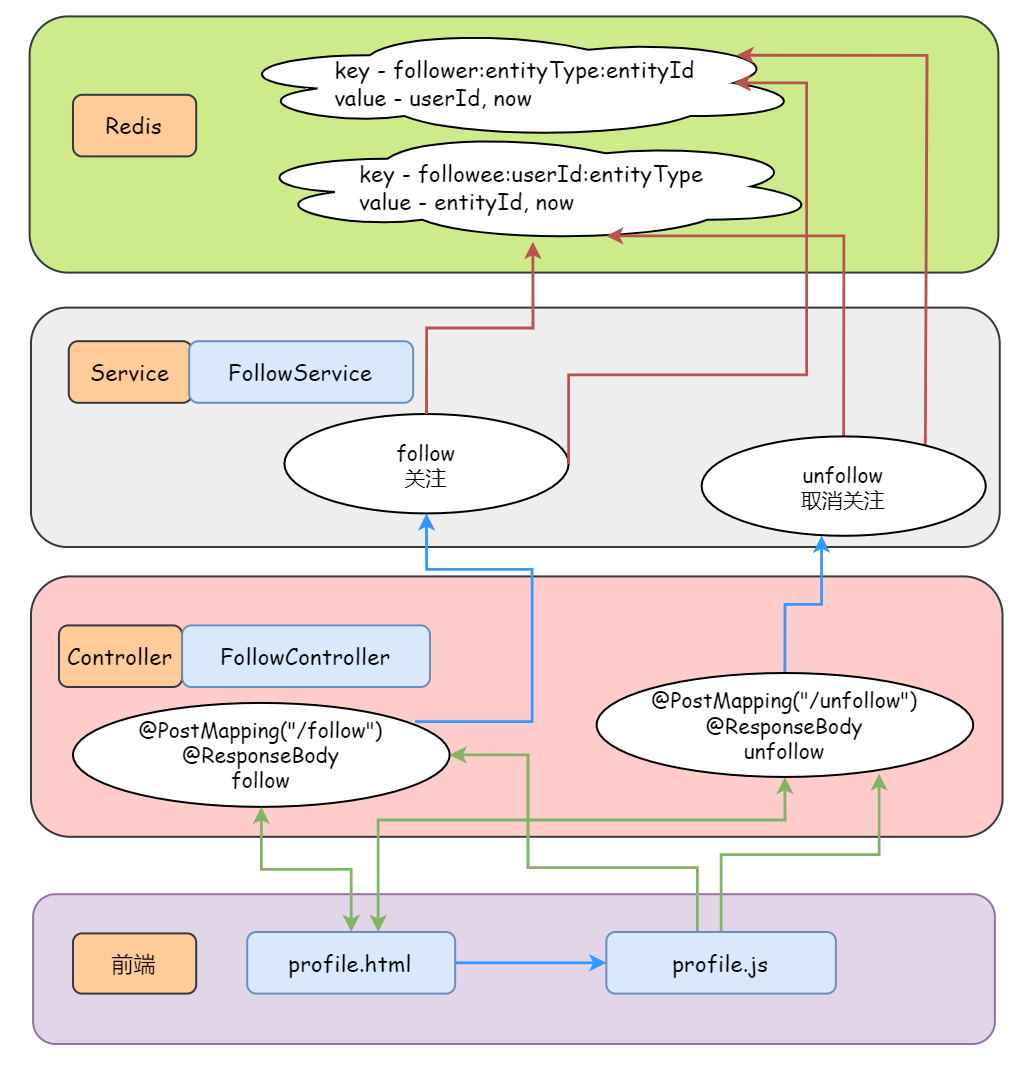

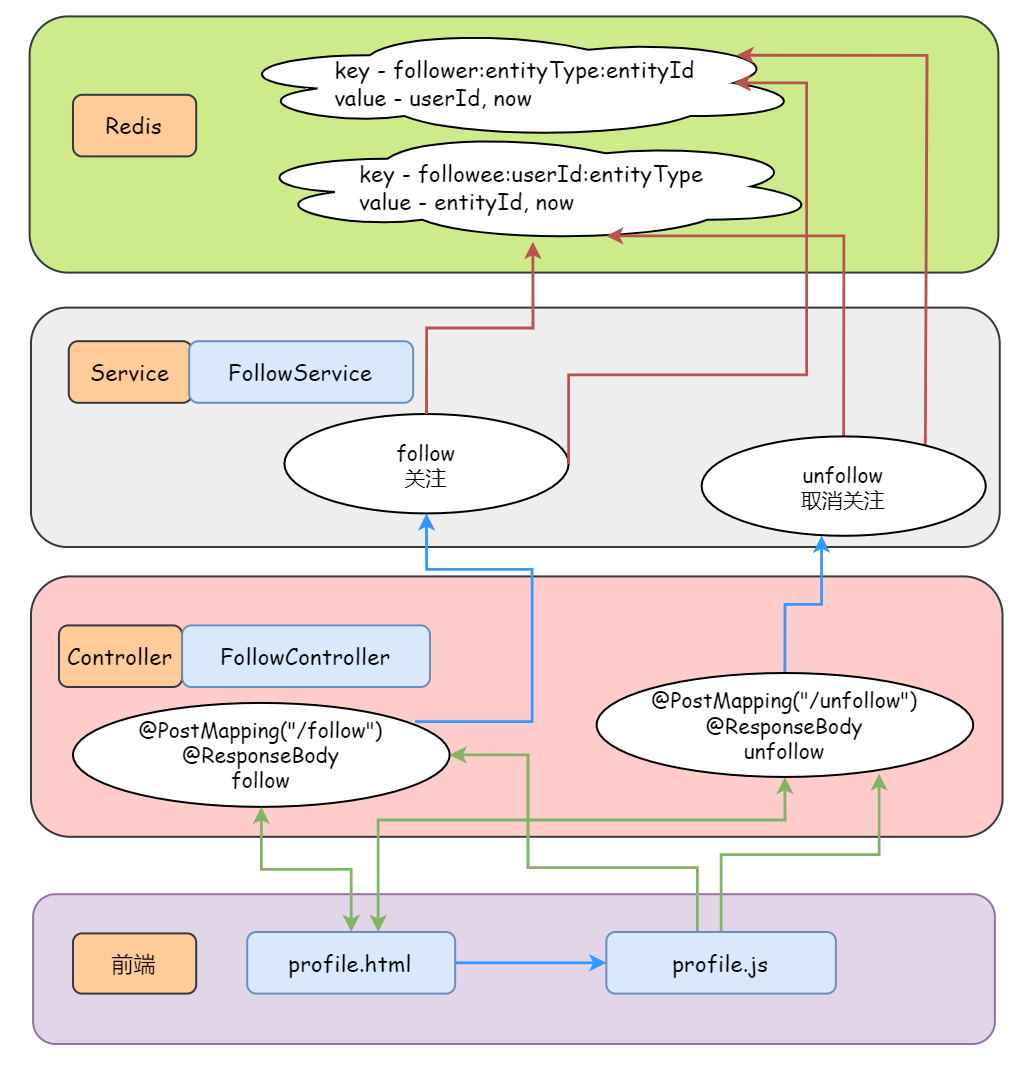

### 关注(异步请求)

|

||||

|

||||

- 若 A 关注了 B,则 A 是 B 的粉丝 Follower,B 是 A 的目标 Followee

|

||||

- 关注的目标可以是用户、帖子、题目等,在实现时将这些目标抽象为实体(目前只做了关注用户)

|

||||

|

||||

将某个用户关注的实体相关信息存储在 Redis 的数据结构 zset 中:key 是 `followee:userId:entityType` ,对应的 value 是 `zset(entityId, now)` ,以关注的时间进行排序。比如说 `followee:111:3` 对应的value `(20, 2020-02-03-xxxx)`,表明用户 111 关注了实体类型为 3 即人(用户),该帖子的 id 是 20,关注该帖子的时间是 2020-02-03-xxxx

|

||||

|

||||

同样的,将某个实体拥有的粉丝相关信息也存储在 Redis 的数据结构 zset 中:key 是 `follower:entityType:entityId`,对应的 value 是 `zset(userId, now)`,以关注的时间进行排序

|

||||

|

||||

|

||||

|

||||

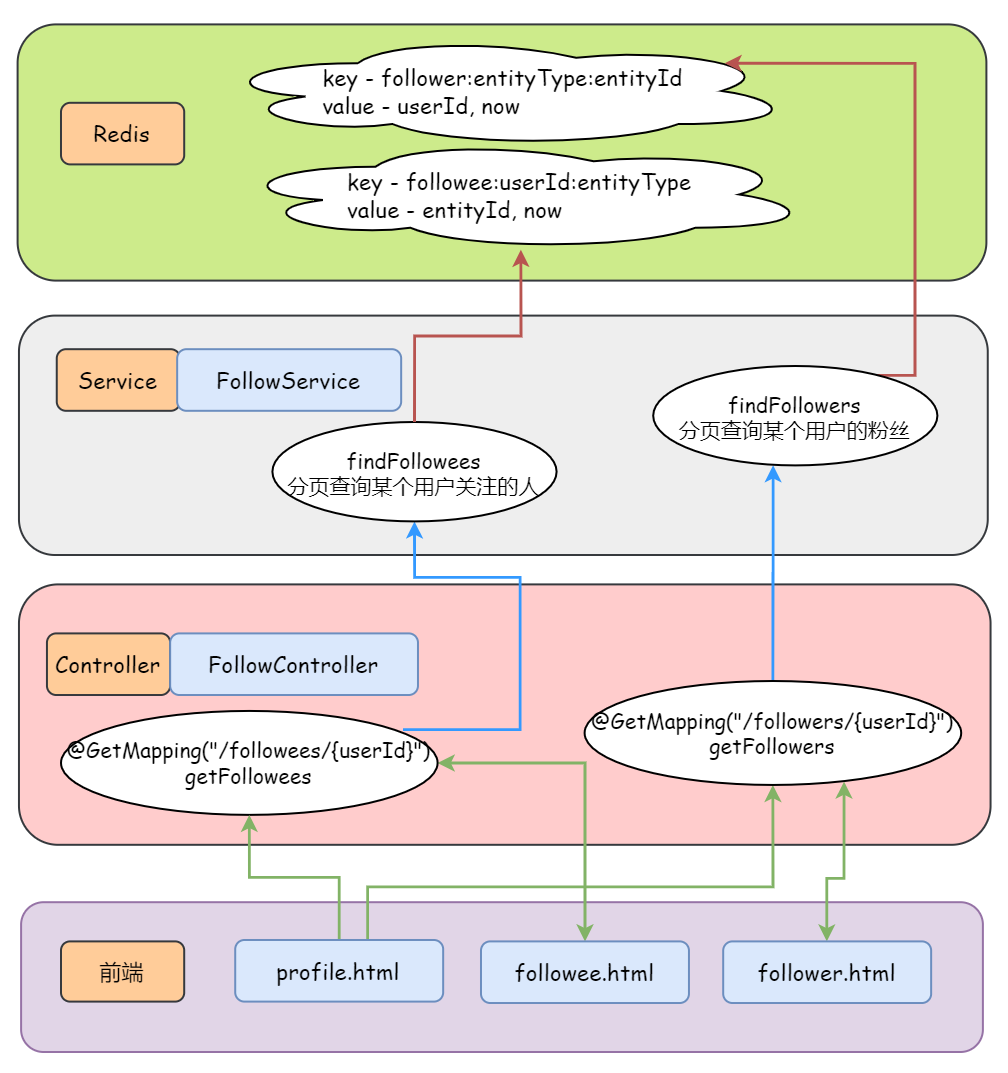

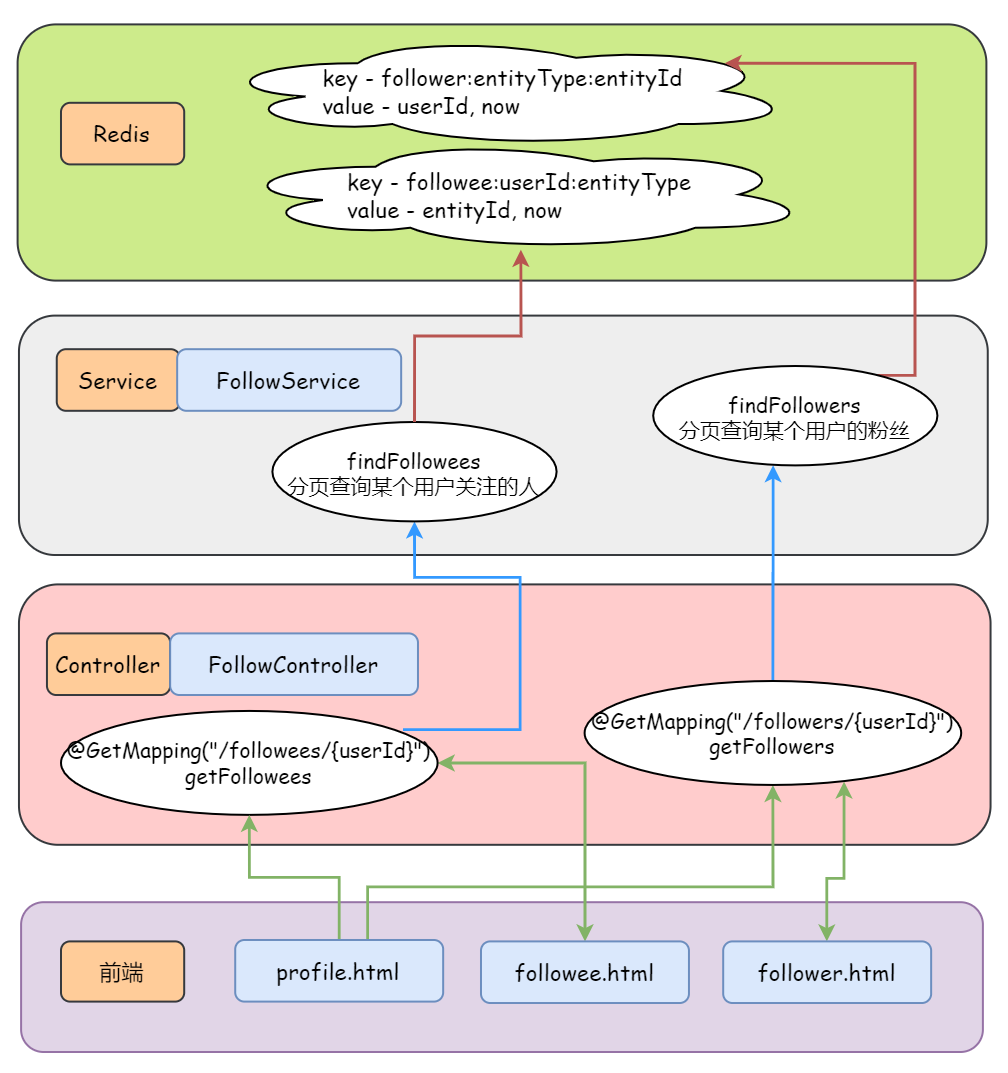

### 关注列表

|

||||

|

||||

|

||||

|

||||

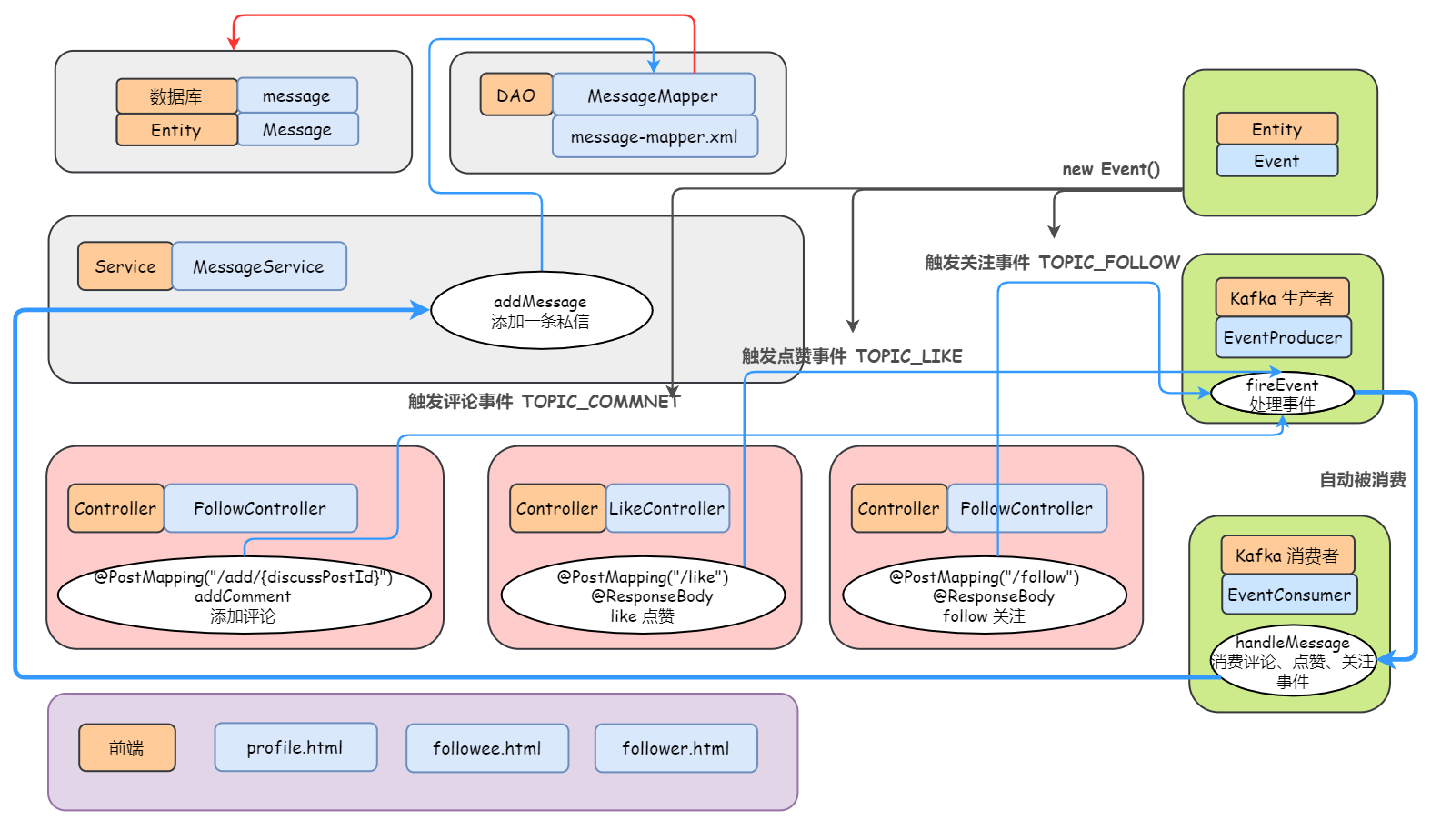

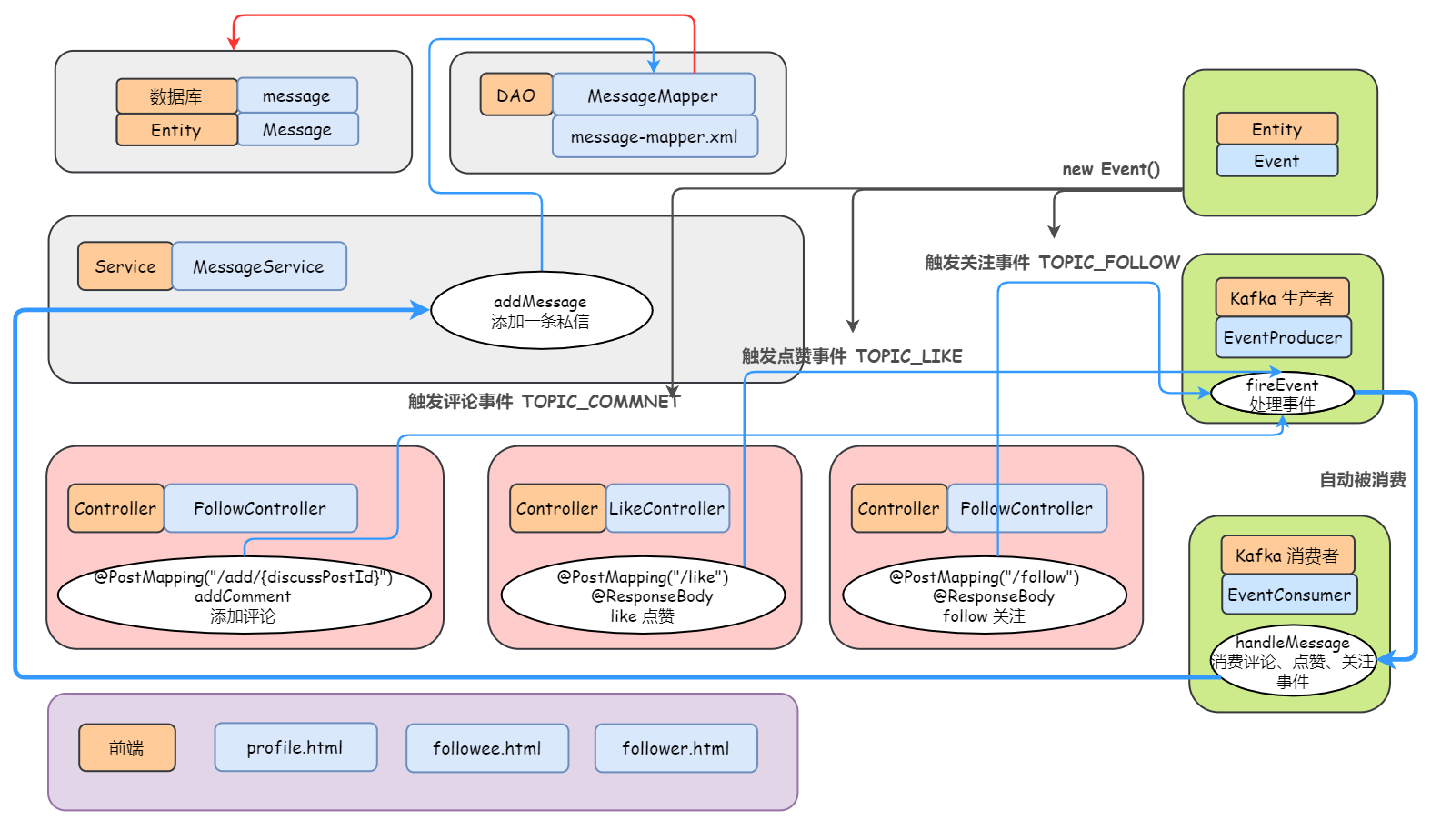

### 发送系统通知

|

||||

|

||||

|

||||

|

||||

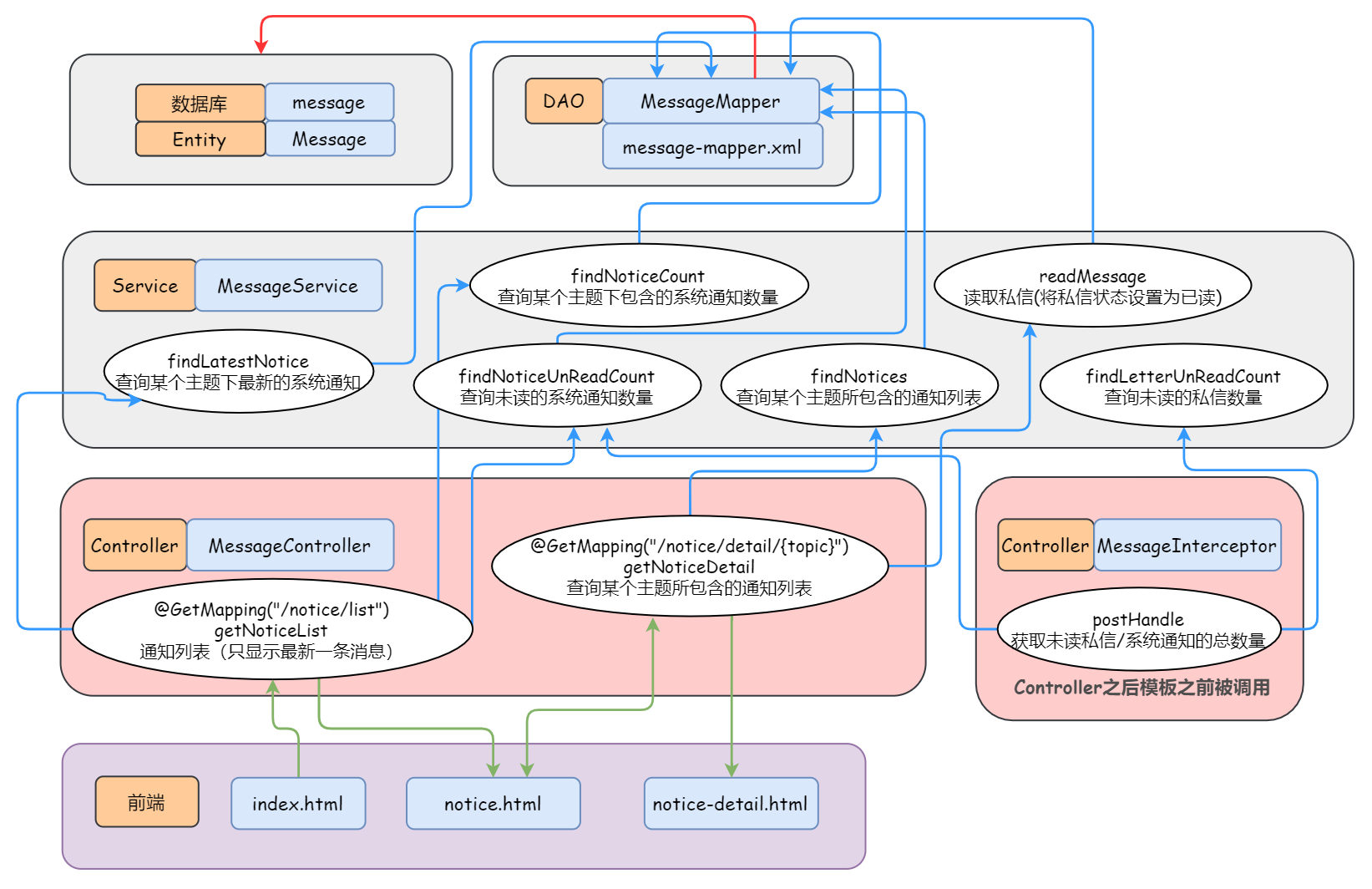

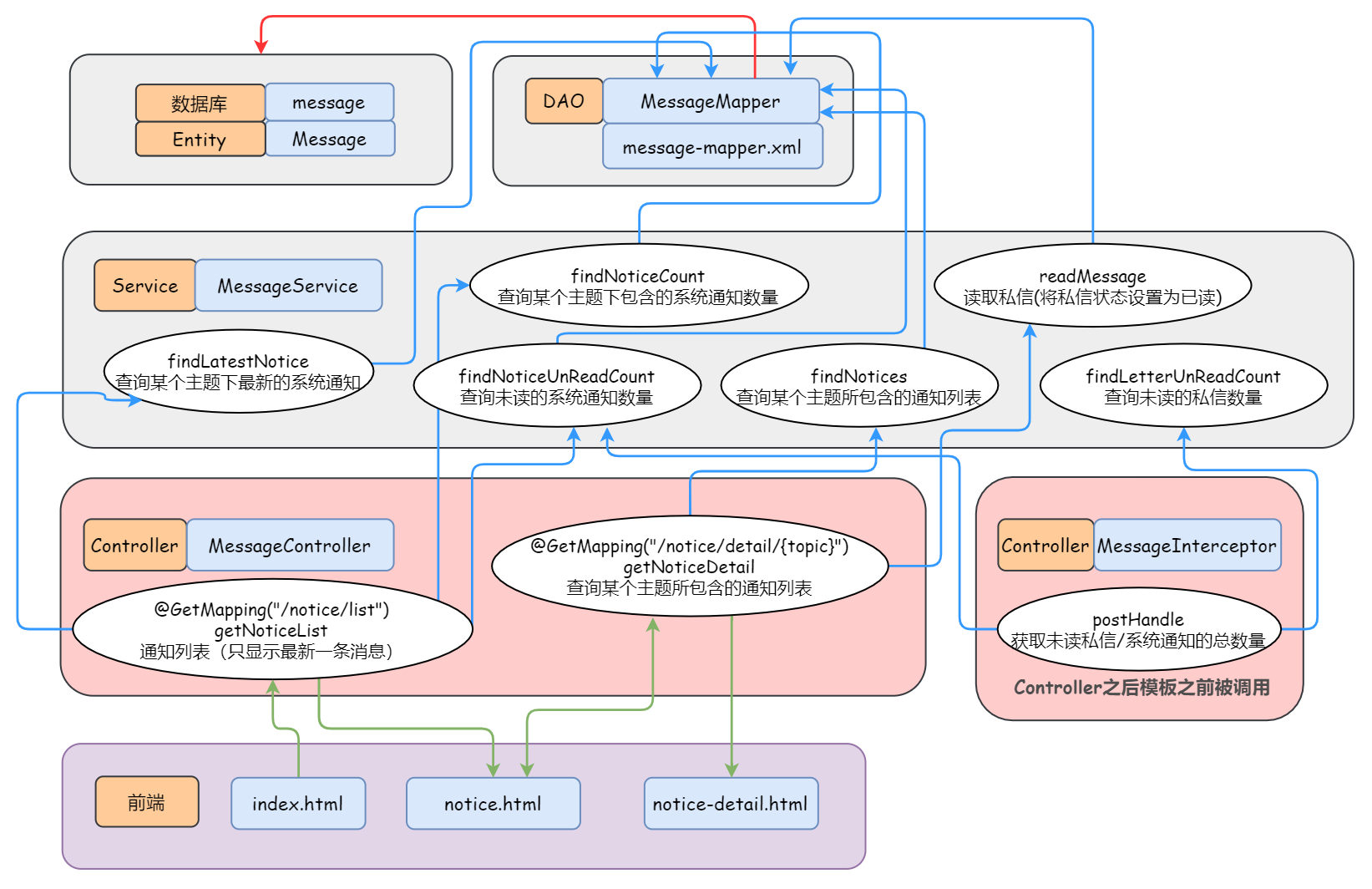

### 显示系统通知

|

||||

|

||||

|

||||

|

||||

### 搜索

|

||||

|

||||

|

||||

|

||||

类似的,置顶、加精也会触发发帖事件,就不再图里面画出来了。

|

||||

|

||||

### 置顶加精删除(异步请求)

|

||||

|

||||

|

||||

|

||||

### 网站数据统计

|

||||

|

||||

|

||||

|

||||

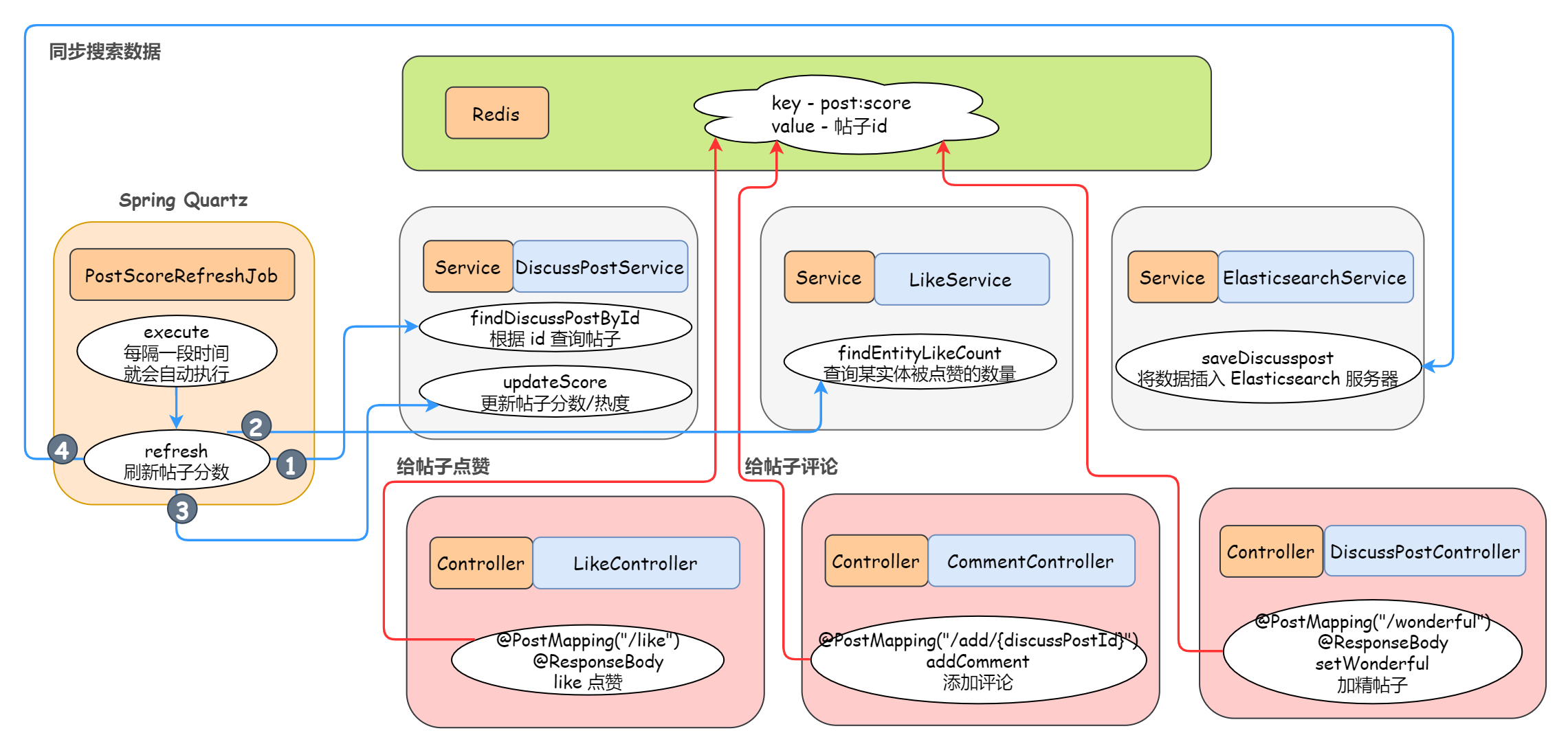

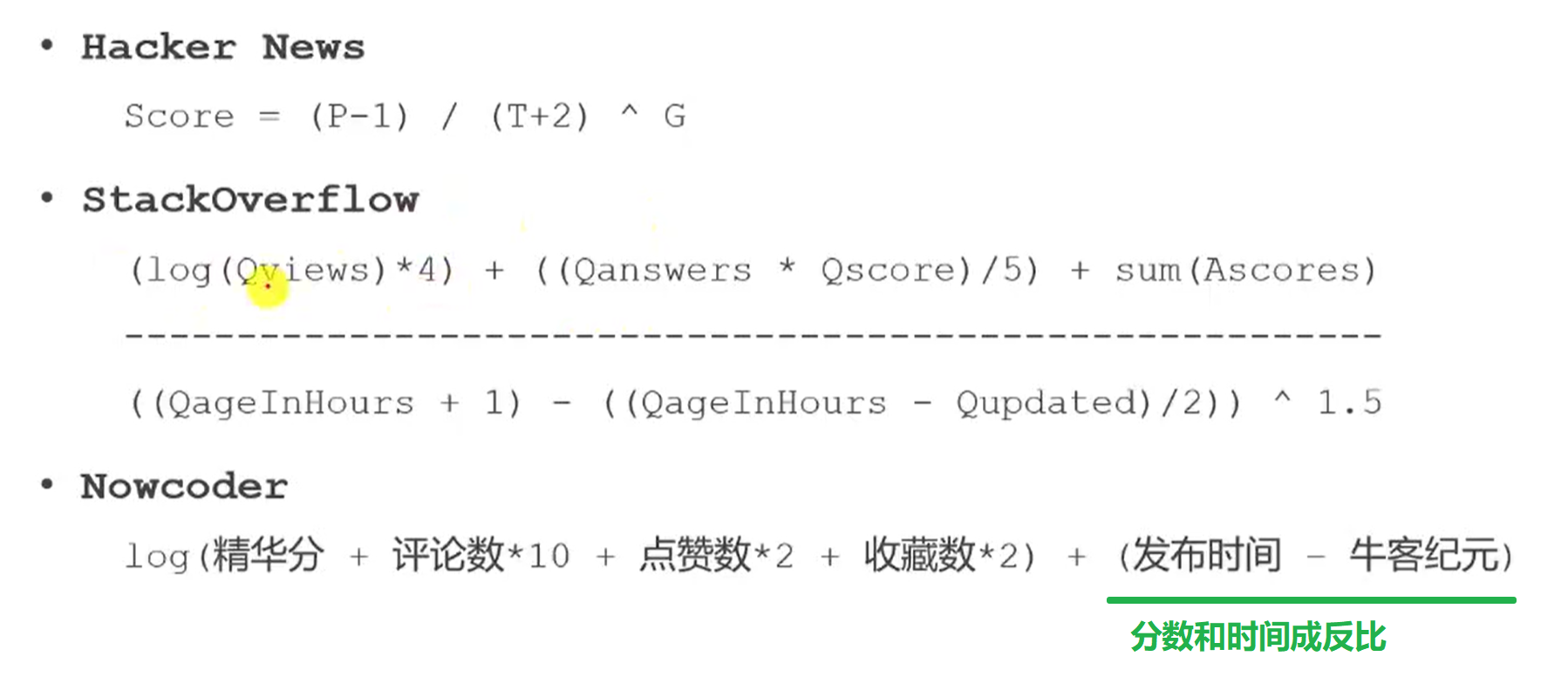

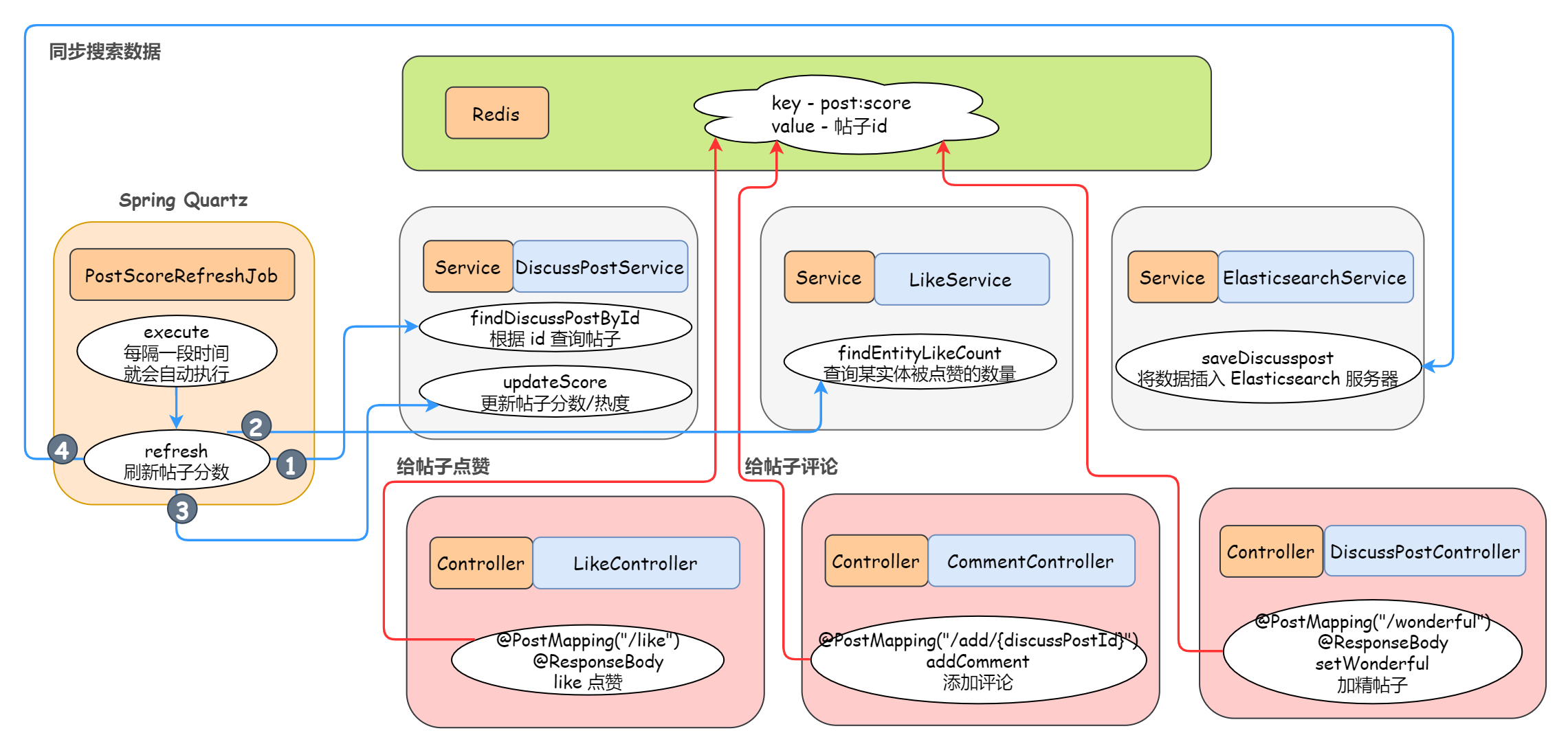

### 帖子热度计算

|

||||

|

||||

每次发生点赞(给帖子点赞)、评论(给帖子评论)、加精的时候,就将这些帖子信息存入缓存 Redis 中,然后通过分布式的定时任务 Spring Quartz,每隔一段时间就从缓存中取出这些帖子进行计算分数。

|

||||

|

||||

帖子分数/热度计算公式:分数(热度) = 权重 + 发帖距离天数

|

||||

|

||||

```java

|

||||

// 计算权重

|

||||

double w = (wonderful ? 75 : 0) + commentCount * 10 + likeCount * 2;

|

||||

// 分数 = 权重 + 发帖距离天数

|

||||

double score = Math.log10(Math.max(w, 1))

|

||||

+ (post.getCreateTime().getTime() - epoch.getTime()) / (1000 * 3600 * 24);

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 📖 配套教程

|

||||

|

||||

想要自己从零开始实现这个项目或者深入理解的小伙伴,可以扫描下方二维码关注公众号『**飞天小牛肉**』,第一时间获取配套教程, 不仅会详细解释本项目涉及的各大技术点,还会汇总相关的常见面试题,目前尚在更新中。

|

||||

|

||||

<img src="https://gitee.com/veal98/images/raw/master/img/20210204145531.png" style="zoom:67%;" />

|

||||

|

||||

## 📞 联系我

|

||||

|

||||

有什么问题也可以添加我的微信,记得备注来意:格式 <u>(学校或公司 - 姓名或昵称 - 来意)</u>

|

||||

|

||||

<img width="260px" src="https://gitee.com/veal98/images/raw/master/img/微信图片_20210105121328.jpg" >

|

||||

|

||||

## 👏 鸣谢

|

||||

|

||||

本项目参考[牛客网](https://www.nowcoder.com/) — Java 高级工程师课程,感谢老师和平台

|

||||

21

docs/.vuepress/config.js

Normal file

21

docs/.vuepress/config.js

Normal file

@ -0,0 +1,21 @@

|

||||

module.exports = {

|

||||

title: '开源社区系统 — Echo', // 设置网站标题

|

||||

description : '一款基于 SpringBoot + MyBatis + MySQL + Redis + Kafka + Elasticsearch + ... 实现的开源社区系统,并提供详细的开发文档和配套教程',

|

||||

themeConfig : {

|

||||

nav : [

|

||||

{

|

||||

text: '仓库地址',

|

||||

items: [

|

||||

{ text: 'Github', link: 'https://github.com/Veal98/Echo' },

|

||||

{ text: 'Gitee', link: 'https://gitee.com/veal98/echo' }

|

||||

]

|

||||

},

|

||||

{ text: '体验项目', link: 'http://1.15.127.74:8080/' },

|

||||

{ text: '配套教程', link: '/error' }

|

||||

],

|

||||

sidebar: 'auto', // 侧边栏配置

|

||||

sidebarDepth : 2,

|

||||

lastUpdated: 'Last Updated', // string | boolean

|

||||

|

||||

}

|

||||

}

|

||||

20

docs/.vuepress/dist/404.html

vendored

Normal file

20

docs/.vuepress/dist/404.html

vendored

Normal file

@ -0,0 +1,20 @@

|

||||

<!DOCTYPE html>

|

||||

<html lang="en-US">

|

||||

<head>

|

||||

<meta charset="utf-8">

|

||||

<meta name="viewport" content="width=device-width,initial-scale=1">

|

||||

<title>VuePress</title>

|

||||

<meta name="generator" content="VuePress 1.8.0">

|

||||

|

||||

<meta name="description" content="">

|

||||

|

||||

<link rel="preload" href="/assets/css/0.styles.d39084ab.css" as="style"><link rel="preload" href="/assets/js/app.b571f5e2.js" as="script"><link rel="preload" href="/assets/js/6.6e8c67be.js" as="script"><link rel="prefetch" href="/assets/js/2.12ad9e69.js"><link rel="prefetch" href="/assets/js/3.9f41a7be.js"><link rel="prefetch" href="/assets/js/4.865692a8.js"><link rel="prefetch" href="/assets/js/5.81254301.js"><link rel="prefetch" href="/assets/js/7.0bbea29c.js">

|

||||

<link rel="stylesheet" href="/assets/css/0.styles.d39084ab.css">

|

||||

</head>

|

||||

<body>

|

||||

<div id="app" data-server-rendered="true"><div class="theme-container"><div class="theme-default-content"><h1>404</h1> <blockquote>How did we get here?</blockquote> <a href="/" class="router-link-active">

|

||||

Take me home.

|

||||

</a></div></div><div class="global-ui"></div></div>

|

||||

<script src="/assets/js/app.b571f5e2.js" defer></script><script src="/assets/js/6.6e8c67be.js" defer></script>

|

||||

</body>

|

||||

</html>

|

||||

1

docs/.vuepress/dist/assets/css/0.styles.d39084ab.css

vendored

Normal file

1

docs/.vuepress/dist/assets/css/0.styles.d39084ab.css

vendored

Normal file

File diff suppressed because one or more lines are too long

1

docs/.vuepress/dist/assets/img/search.83621669.svg

vendored

Normal file

1

docs/.vuepress/dist/assets/img/search.83621669.svg

vendored

Normal file

@ -0,0 +1 @@

|

||||

<?xml version="1.0" encoding="UTF-8"?><svg xmlns="http://www.w3.org/2000/svg" width="12" height="13"><g stroke-width="2" stroke="#aaa" fill="none"><path d="M11.29 11.71l-4-4"/><circle cx="5" cy="5" r="4"/></g></svg>

|

||||

|

After Width: | Height: | Size: 216 B |

1

docs/.vuepress/dist/assets/js/2.12ad9e69.js

vendored

Normal file

1

docs/.vuepress/dist/assets/js/2.12ad9e69.js

vendored

Normal file

File diff suppressed because one or more lines are too long

1

docs/.vuepress/dist/assets/js/3.9f41a7be.js

vendored

Normal file

1

docs/.vuepress/dist/assets/js/3.9f41a7be.js

vendored

Normal file

@ -0,0 +1 @@

|

||||

(window.webpackJsonp=window.webpackJsonp||[]).push([[3],{328:function(t,e,n){},356:function(t,e,n){"use strict";n(328)},364:function(t,e,n){"use strict";n.r(e);var i={functional:!0,props:{type:{type:String,default:"tip"},text:String,vertical:{type:String,default:"top"}},render:function(t,e){var n=e.props,i=e.slots;return t("span",{class:["badge",n.type],style:{verticalAlign:n.vertical}},n.text||i().default)}},r=(n(356),n(42)),p=Object(r.a)(i,void 0,void 0,!1,null,"15b7b770",null);e.default=p.exports}}]);

|

||||

1

docs/.vuepress/dist/assets/js/4.865692a8.js

vendored

Normal file

1

docs/.vuepress/dist/assets/js/4.865692a8.js

vendored

Normal file

@ -0,0 +1 @@

|

||||

(window.webpackJsonp=window.webpackJsonp||[]).push([[4],{329:function(e,t,c){},357:function(e,t,c){"use strict";c(329)},361:function(e,t,c){"use strict";c.r(t);var i={name:"CodeBlock",props:{title:{type:String,required:!0},active:{type:Boolean,default:!1}}},n=(c(357),c(42)),s=Object(n.a)(i,(function(){var e=this.$createElement;return(this._self._c||e)("div",{staticClass:"theme-code-block",class:{"theme-code-block__active":this.active}},[this._t("default")],2)}),[],!1,null,"6d04095e",null);t.default=s.exports}}]);

|

||||

1

docs/.vuepress/dist/assets/js/5.81254301.js

vendored

Normal file

1

docs/.vuepress/dist/assets/js/5.81254301.js

vendored

Normal file

@ -0,0 +1 @@

|

||||

(window.webpackJsonp=window.webpackJsonp||[]).push([[5],{330:function(e,t,o){},358:function(e,t,o){"use strict";o(330)},362:function(e,t,o){"use strict";o.r(t);o(23),o(93),o(65),o(95);var a={name:"CodeGroup",data:function(){return{codeTabs:[],activeCodeTabIndex:-1}},watch:{activeCodeTabIndex:function(e){this.codeTabs.forEach((function(e){e.elm.classList.remove("theme-code-block__active")})),this.codeTabs[e].elm.classList.add("theme-code-block__active")}},mounted:function(){var e=this;this.codeTabs=(this.$slots.default||[]).filter((function(e){return Boolean(e.componentOptions)})).map((function(t,o){return""===t.componentOptions.propsData.active&&(e.activeCodeTabIndex=o),{title:t.componentOptions.propsData.title,elm:t.elm}})),-1===this.activeCodeTabIndex&&this.codeTabs.length>0&&(this.activeCodeTabIndex=0)},methods:{changeCodeTab:function(e){this.activeCodeTabIndex=e}}},c=(o(358),o(42)),n=Object(c.a)(a,(function(){var e=this,t=e.$createElement,o=e._self._c||t;return o("div",{staticClass:"theme-code-group"},[o("div",{staticClass:"theme-code-group__nav"},[o("ul",{staticClass:"theme-code-group__ul"},e._l(e.codeTabs,(function(t,a){return o("li",{key:t.title,staticClass:"theme-code-group__li"},[o("button",{staticClass:"theme-code-group__nav-tab",class:{"theme-code-group__nav-tab-active":a===e.activeCodeTabIndex},on:{click:function(t){return e.changeCodeTab(a)}}},[e._v("\n "+e._s(t.title)+"\n ")])])})),0)]),e._v(" "),e._t("default"),e._v(" "),e.codeTabs.length<1?o("pre",{staticClass:"pre-blank"},[e._v("// Make sure to add code blocks to your code group")]):e._e()],2)}),[],!1,null,"32c2d7ed",null);t.default=n.exports}}]);

|

||||

1

docs/.vuepress/dist/assets/js/6.6e8c67be.js

vendored

Normal file

1

docs/.vuepress/dist/assets/js/6.6e8c67be.js

vendored

Normal file

@ -0,0 +1 @@

|

||||

(window.webpackJsonp=window.webpackJsonp||[]).push([[6],{360:function(t,e,s){"use strict";s.r(e);var n=["There's nothing here.","How did we get here?","That's a Four-Oh-Four.","Looks like we've got some broken links."],o={methods:{getMsg:function(){return n[Math.floor(Math.random()*n.length)]}}},i=s(42),h=Object(i.a)(o,(function(){var t=this.$createElement,e=this._self._c||t;return e("div",{staticClass:"theme-container"},[e("div",{staticClass:"theme-default-content"},[e("h1",[this._v("404")]),this._v(" "),e("blockquote",[this._v(this._s(this.getMsg()))]),this._v(" "),e("RouterLink",{attrs:{to:"/"}},[this._v("\n Take me home.\n ")])],1)])}),[],!1,null,null,null);e.default=h.exports}}]);

|

||||

1

docs/.vuepress/dist/assets/js/7.0bbea29c.js

vendored

Normal file

1

docs/.vuepress/dist/assets/js/7.0bbea29c.js

vendored

Normal file

File diff suppressed because one or more lines are too long

13

docs/.vuepress/dist/assets/js/app.b571f5e2.js

vendored

Normal file

13

docs/.vuepress/dist/assets/js/app.b571f5e2.js

vendored

Normal file

File diff suppressed because one or more lines are too long

@ -1,411 +0,0 @@

|

||||

# 开发社区首页

|

||||

|

||||

---

|

||||

|

||||

|

||||

|

||||

## DiscussPost 讨论帖

|

||||

|

||||

### Entity

|

||||

|

||||

### DAO

|

||||

|

||||

- Mapper 接口 `DiscussPostMapper`

|

||||

- 对应的 xml 配置文件 `discusspost-mapper.xml`

|

||||

|

||||

`@Param` 注解用于给参数起别名,**如果只有一个参数**,并且需要在 `<if>` 里使用,则必须加别名

|

||||

|

||||

```java

|

||||

@Mapper

|

||||

public interface DiscussPostMapper {

|

||||

|

||||

/**

|

||||

* 分页查询讨论贴信息

|

||||

*

|

||||

* @param userId 当传入的 userId = 0 时查找所有用户的帖子

|

||||

* 当传入的 userId != 0 时,查找该指定用户的帖子

|

||||

* @param offset 每页的起始索引

|

||||

* @param limit 每页显示多少条数据

|

||||

* @return

|

||||

*/

|

||||

List<DiscussPost> selectDiscussPosts(int userId, int offset, int limit);

|

||||

|

||||

/**

|

||||

* 查询讨论贴的个数

|

||||

* @param userId 当传入的 userId = 0 时计算所有用户的帖子总数

|

||||

* 当传入的 userId != 0 时计算该指定用户的帖子总数

|

||||

* @return

|

||||

*/

|

||||

int selectDiscussPostRows(@Param("userId") int userId);

|

||||

}

|

||||

```

|

||||

|

||||

对应的 Mapper:

|

||||

|

||||

```xml

|

||||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<!DOCTYPE mapper

|

||||

PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN"

|

||||

"http://mybatis.org/dtd/mybatis-3-mapper.dtd">

|

||||

<mapper namespace="com.greate.community.dao.DiscussPostMapper">

|

||||

|

||||

<sql id = "selectFields">

|

||||

id, user_id, title, content, type, status, create_time, comment_count, score

|

||||

</sql>

|

||||

|

||||

<!--分页查询讨论贴信息-->

|

||||

<!--不显示拉黑的帖子, 按照是否置顶和创建时间排序-->

|

||||

<select id = "selectDiscussPosts" resultType="DiscussPost">

|

||||

select <include refid="selectFields"></include>

|

||||

from discuss_post

|

||||

where status != 2

|

||||

<if test = "userId!=0">

|

||||

and user_id = #{userId}

|

||||

</if>

|

||||

order by type desc, create_time desc

|

||||

limit #{offset}, #{limit}

|

||||

</select>

|

||||

|

||||

<!--查询讨论贴的个数-->

|

||||

<select id = "selectDiscussPostRows" resultType="int">

|

||||

select count(id)

|

||||

from discuss_post

|

||||

where status != 2

|

||||

<if test = "userId != 0">

|

||||

and user_id = #{userId}

|

||||

</if>

|

||||

</select>

|

||||

|

||||

</mapper>

|

||||

```

|

||||

|

||||

### Service

|

||||

|

||||

关于自动注入 Mapper 报错问题:可参考 [关于IDEA中@Autowired 注解报错~图文](https://www.cnblogs.com/taopanfeng/p/10994075.html)

|

||||

|

||||

```java

|

||||

@Service

|

||||

public class DiscussPostSerivce {

|

||||

|

||||

@Autowired

|

||||

private DiscussPostMapper discussPostMapper;

|

||||

|

||||

|

||||

/**

|

||||

* 分页查询讨论帖信息

|

||||

*

|

||||

* @param userId 当传入的 userId = 0 时查找所有用户的帖子

|

||||

* 当传入的 userId != 0 时,查找该指定用户的帖子

|

||||

* @param offset 每页的起始索引

|

||||

* @param limit 每页显示多少条数据

|

||||

* @return

|

||||

*/

|

||||

public List<DiscussPost> findDiscussPosts (int userId, int offset, int limit) {

|

||||

return discussPostMapper.selectDiscussPosts(userId, offset, limit);

|

||||

}

|

||||

|

||||

/**

|

||||

* 查询讨论贴的个数

|

||||

* @param userId 当传入的 userId = 0 时计算所有用户的帖子总数

|

||||

* 当传入的 userId != 0 时计算该指定用户的帖子总数

|

||||

* @return

|

||||

*/

|

||||

public int findDiscussPostRows (int userId) {

|

||||

return discussPostMapper.selectDiscussPostRows(userId);

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

## User

|

||||

|

||||

### Entity

|

||||

|

||||

### DAO

|

||||

|

||||

```java

|

||||

@Mapper

|

||||

public interface UserMapper {

|

||||

|

||||

/**

|

||||

* 根据 id 查询用户

|

||||

* @param id

|

||||

* @return

|

||||

*/

|

||||

User selectById (int id);

|

||||

|

||||

/**

|

||||

* 根据 username 查询用户

|

||||

* @param username

|

||||

* @return

|

||||

*/

|

||||

User selectByName(String username);

|

||||

|

||||

/**

|

||||

* 根据 email 查询用户

|

||||

* @param email

|

||||

* @return

|

||||

*/

|

||||

User selectByEmail(String email);

|

||||

|

||||

/**

|

||||

* 插入用户(注册)

|

||||

* @param user

|

||||

* @return

|

||||

*/

|

||||

int insertUser(User user);

|

||||

|

||||

/**

|

||||

* 修改用户状态

|

||||

* @param id

|

||||

* @param status 0:未激活,1:已激活

|

||||

* @return

|

||||

*/

|

||||

int updateStatus(int id, int status);

|

||||

|

||||

/**

|

||||

* 修改头像

|

||||

* @param id

|

||||

* @param headerUrl

|

||||

* @return

|

||||

*/

|

||||

int updateHeader(int id, String headerUrl);

|

||||

|

||||

/**

|

||||

* 修改密码

|

||||

* @param id

|

||||

* @param password

|

||||

* @return

|

||||

*/

|

||||

int updatePassword(int id, String password);

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

对应的 mapper.xml:

|

||||

|

||||

```xml

|

||||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<!DOCTYPE mapper

|

||||

PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN"

|

||||

"http://mybatis.org/dtd/mybatis-3-mapper.dtd">

|

||||

<mapper namespace="com.greate.community.dao.UserMapper">

|

||||

|

||||

<sql id = "insertFields">

|

||||

username, password, salt, email, type, status, activation_code, header_url, create_time

|

||||

</sql>

|

||||

|

||||

<sql id = "selectFields">

|

||||

id, username, password, salt, email, type, status, activation_code, header_url, create_time

|

||||

</sql>

|

||||

|

||||

<!--根据 Id 查询用户信息-->

|

||||

<select id = "selectById" resultType = "User">

|

||||

select <include refid="selectFields"></include>

|

||||

from user

|

||||

where id = #{id}

|

||||

</select>

|

||||

|

||||

<!--根据 Username 查询用户信息-->

|

||||

<select id="selectByName" resultType="User">

|

||||

select <include refid="selectFields"></include>

|

||||

from user

|

||||

where username = #{username}

|

||||

</select>

|

||||

|

||||

<!--根据 email 查询用户信息-->

|

||||

<select id="selectByEmail" resultType="User">

|

||||

select <include refid="selectFields"></include>

|

||||

from user

|

||||

where email = #{email}

|

||||

</select>

|

||||

|

||||

<!--插入用户信息(注册)-->

|

||||

<insert id="insertUser" parameterType="User" keyProperty="id">

|

||||

insert into user (<include refid="insertFields"></include>)

|

||||

values(#{username}, #{password}, #{salt}, #{email}, #{type}, #{status}, #{activationCode}, #{headerUrl}, #{createTime})

|

||||

</insert>

|

||||

|

||||

<!--修改用户状态-->

|

||||

<update id="updateStatus">

|

||||

update user set status = #{status} where id = #{id}

|

||||

</update>

|

||||

|

||||

<!--修改用户头像-->

|

||||

<update id="updateHeader">

|

||||

update user set header_url = #{headerUrl} where id = #{id}

|

||||

</update>

|

||||

|

||||

<!--修改密码-->

|

||||

<update id="updatePassword">

|

||||

update user set password = #{password} where id = #{id}

|

||||

</update>

|

||||

|

||||

</mapper>

|

||||

```

|

||||

|

||||

### Service

|

||||

|

||||

```java

|

||||

@Service

|

||||

public class UserService {

|

||||

|

||||

@Autowired

|

||||

private UserMapper userMapper;

|

||||

|

||||

public User findUserById (int id) {

|

||||

return userMapper.selectById(id);

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

## Page 分页

|

||||

|

||||

```java

|

||||

/**

|

||||

* 封装分页相关的信息

|

||||

*/

|

||||

public class Page {

|

||||

|

||||

// 当前的页码

|

||||

private int current = 1;

|

||||

// 单页显示的帖子数量上限

|

||||

private int limit = 10;

|

||||

// 帖子总数(用于计算总页数)

|

||||

private int rows;

|

||||

// 查询路径(用于复用分页链接, 因为我们不只在首页中有分页,其他界面也会有分页)

|

||||

private String path;

|

||||

|

||||

public int getCurrent() {

|

||||

return current;

|

||||

}

|

||||

|

||||

public void setCurrent(int current) {

|

||||

if (current >= 1) {

|

||||

this.current = current;

|

||||

}

|

||||

}

|

||||

|

||||

public int getLimit() {

|

||||

return limit;

|

||||

}

|

||||

|

||||

public void setLimit(int limit) {

|

||||

if (current >= 1 && limit <= 100) {

|

||||

this.limit = limit;

|

||||

}

|

||||

}

|

||||

|

||||

public int getRows() {

|

||||

return rows;

|

||||

}

|

||||

|

||||

public void setRows(int rows) {

|

||||

if (rows >= 0) {

|

||||

this.rows = rows;

|

||||

}

|

||||

}

|

||||

|

||||

public String getPath() {

|

||||

return path;

|

||||

}

|

||||

|

||||

public void setPath(String path) {

|

||||

this.path = path;

|

||||

}

|

||||

|

||||

/**

|

||||

* 获取当前页的起始索引 offset

|

||||

* @return

|

||||

*/

|

||||

public int getOffset() {

|

||||

return current * limit - limit;

|

||||

}

|

||||

|

||||

/**

|

||||

* 获取总页数

|

||||

* @return

|

||||

*/

|

||||

public int getTotal() {

|

||||

if (rows % limit == 0) {

|

||||

return rows / limit;

|

||||

}

|

||||

else {

|

||||

return rows / limit + 1;

|

||||

}

|

||||

}

|

||||

|

||||

/**

|

||||

* 获取分页栏起始页码

|

||||

* 分页栏显示当前页码及其前后两页

|

||||

* @return

|

||||

*/

|

||||

public int getFrom() {

|

||||

int from = current - 2;

|

||||

return from < 1 ? 1 : from;

|

||||

}

|

||||

|

||||

/**

|

||||

* 获取分页栏结束页码

|

||||

* @return

|

||||

*/

|

||||

public int getTo() {

|

||||

int to = current + 2;

|

||||

int total = getTotal();

|

||||

return to > total ? total : to;

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

## Controller

|

||||

|

||||

```java

|

||||

@Controller

|

||||

public class HomeController {

|

||||

|

||||

@Autowired

|

||||

private DiscussPostSerivce discussPostSerivce;

|

||||

|

||||

@Autowired

|

||||

private UserService userService;

|

||||

|

||||

@GetMapping("/index")

|

||||

public String getIndexPage(Model model, Page page) {

|

||||

// 获取总页数

|

||||

page.setRows(discussPostSerivce.findDiscussPostRows(0));

|

||||

page.setPath("/index");

|

||||

|

||||

// 分页查询

|

||||

List<DiscussPost> list = discussPostSerivce.findDiscussPosts(0, page.getOffset(), page.getLimit());

|

||||

// 封装帖子和该帖子对应的用户信息

|

||||

List<Map<String, Object>> discussPosts = new ArrayList<>();

|

||||

if (list != null) {

|

||||

for (DiscussPost post : list) {

|

||||

Map<String, Object> map = new HashMap<>();

|

||||

map.put("post", post);

|

||||

User user = userService.findUserById(post.getUserId());

|

||||

map.put("user", user);

|

||||

discussPosts.add(map);

|

||||

}

|

||||

}

|

||||

model.addAttribute("discussPosts", discussPosts);

|

||||

return "index";

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

🚩 小 Tip:这里不用把 Page 放入 model(`model.addAttribute("page", page);`

|

||||

|

||||

因为在方法调用之前,Spring MVC 会自动实例化 Model 和 Page,并将 Page 注入 Model,所以,在 Thymeleaf 中可以直接访问 Page 对象中的数据

|

||||

|

||||

## 前端界面 index.html

|

||||

|

||||

```html

|

||||

th:each="map:${discussPosts}"

|

||||

```

|

||||

|

||||

表示将每次遍历 `discussPosts` 取出的变量称为 `map`

|

||||

|

||||

215

docs/100-发布帖子.md

215

docs/100-发布帖子.md

@ -1,215 +0,0 @@

|

||||

# 发布帖子

|

||||

|

||||

---

|

||||

|

||||

<img src="https://gitee.com/veal98/images/raw/master/img/20210122102856.png" style="zoom: 33%;" />

|

||||

|

||||

## Util

|

||||

|

||||

工具类:将服务端返回的消息封装成 JSON 格式的字符串

|

||||

|

||||

```java

|

||||

/**

|

||||

* 将服务端返回的消息封装成 JSON 格式的字符串

|

||||

* @param code 状态码

|

||||

* @param msg 提示消息

|

||||

* @param map 业务数据

|

||||

* @return 返回 JSON 格式字符串

|

||||

*/

|

||||

public static String getJSONString(int code, String msg, Map<String, Object> map) {

|

||||

JSONObject json = new JSONObject();

|

||||

json.put("code", code);

|

||||

json.put("msg", msg);

|

||||

if (map != null) {

|

||||

for (String key : map.keySet()) {

|

||||

json.put(key, map.get(key));

|

||||

}

|

||||

}

|

||||

return json.toJSONString();

|

||||

}

|

||||

|

||||

// 重载 getJSONString 方法,服务端方法可能不返回业务数据

|

||||

public static String getJSONString(int code, String msg) {

|

||||

return getJSONString(code, msg, null);

|

||||

}

|

||||

|

||||

// 重载 getJSONString 方法,服务端方法可能不返回业务数据和提示消息

|

||||

public static String getJSONString(int code) {

|

||||

return getJSONString(code, null, null);

|

||||

}

|

||||

|

||||

/**

|

||||

* 测试

|

||||

* @param args

|

||||

*/

|

||||

public static void main(String[] args) {

|

||||

Map<String, Object> map = new HashMap<>();

|

||||

map.put("name", "Jack");

|

||||

map.put("age", 18);

|

||||

// {"msg":"ok","code":0,"name":"Jack","age":18}

|

||||

System.out.println(getJSONString(0, "ok", map));

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

## DAO

|

||||

|

||||

`DiscussPostMapper`

|

||||

|

||||

```java

|

||||

/**

|

||||

* 插入/添加帖子

|

||||

* @param discussPost

|

||||

* @return

|

||||

*/

|

||||

int insertDiscussPost(DiscussPost discussPost);

|

||||

```

|

||||

|

||||

对应的 `mapper.xml`

|

||||

|

||||

```xml

|

||||

<!--插入/添加帖子-->

|

||||

<insert id="insertDiscussPost" parameterType="DiscussPost" keyProperty="id">

|

||||

insert into discuss_post (<include refid="insertFields"></include>)

|

||||

values(#{userId}, #{title}, #{content}, #{type}, #{status}, #{createTime}, #{commentCount}, #{score})

|

||||

</insert>

|

||||

```

|

||||

|

||||

## Service

|

||||

|

||||

```java

|

||||

/**

|

||||

* 添加帖子

|

||||

* @param discussPost

|

||||

* @return

|

||||

*/

|

||||

public int addDiscussPost(DiscussPost discussPost) {

|

||||

if (discussPost == null) {

|

||||

throw new IllegalArgumentException("参数不能为空");

|

||||

}

|

||||

|

||||

// 转义 HTML 标记,防止在 HTML 标签中注入攻击语句

|

||||

discussPost.setTitle(HtmlUtils.htmlEscape(discussPost.getTitle()));

|

||||

discussPost.setContent(HtmlUtils.htmlEscape(discussPost.getContent()));

|

||||

|

||||

// 过滤敏感词

|

||||

discussPost.setTitle(sensitiveFilter.filter(discussPost.getTitle()));

|

||||

discussPost.setContent(sensitiveFilter.filter(discussPost.getContent()));

|

||||

|

||||

return discussPostMapper.insertDiscussPost(discussPost);

|

||||

}

|

||||

```

|

||||

|

||||

转义 HTML 标记,防止在 HTML 标签中注入攻击语句,比如 `<script>alert('哈哈')</script>`

|

||||

|

||||

## Controller

|

||||

|

||||

```java

|

||||

@Controller

|

||||

@RequestMapping("/discuss")

|

||||

public class DiscussPostController {

|

||||

|

||||

@Autowired

|

||||

private DiscussPostSerivce discussPostSerivce;

|

||||

|

||||

@Autowired

|

||||

private HostHolder hostHolder;

|

||||

|

||||

/**

|

||||

* 添加帖子(发帖)

|

||||

* @param title

|

||||

* @param content

|

||||

* @return

|

||||

*/

|

||||

@PostMapping("/add")

|

||||

@ResponseBody

|

||||

public String addDiscussPost(String title, String content) {

|

||||

User user = hostHolder.getUser();

|

||||

if (user == null) {

|

||||

return CommunityUtil.getJSONString(403, "您还未登录");

|

||||

}

|

||||

|

||||

DiscussPost discussPost = new DiscussPost();

|

||||

discussPost.setUserId(user.getId());

|

||||

discussPost.setTitle(title);

|

||||

discussPost.setContent(content);

|

||||

discussPost.setCreateTime(new Date());

|

||||

|

||||

discussPostSerivce.addDiscussPost(discussPost);

|

||||

|

||||

// 报错的情况将来会统一处理

|

||||

return CommunityUtil.getJSONString(0, "发布成功");

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 前端

|

||||

|

||||

```java

|

||||

<button data-toggle="modal" data-target="#publishModal"

|

||||

th:if="${loginUser != null}">我要发布</button>

|

||||

|

||||

<!-- 弹出框 -->

|

||||

<div class="modal fade" id="publishModal">

|

||||

标题:<input id="recipient-name">

|

||||

正文:<textarea id="message-text"></textarea>

|

||||

<button id="publishBtn">发布</button>

|

||||

</div>

|

||||

|

||||

<!-- 提示框 -->

|

||||

<div class="modal fade" id="hintModal">

|

||||

......

|

||||

</div>

|

||||

```

|

||||

|

||||

对应的 js:

|

||||

|

||||

```js

|

||||

$(function(){

|

||||

$("#publishBtn").click(publish);

|

||||

});

|

||||

|

||||

function publish() {

|

||||

$("#publishModal").modal("hide");

|

||||

// 获取标题和内容

|

||||

var title = $("#recipient-name").val();

|

||||

var content = $("#message-text").val();

|

||||

// 发送异步请求

|

||||

$.post(

|

||||

CONTEXT_PATH + "/discuss/add",

|

||||

{"title": title, "content": content},

|

||||

// 处理服务端返回的数据

|

||||

function (data) {

|

||||

// String -> Json 对象

|

||||

data = $.parseJSON(data);

|

||||

// 在提示框 hintBody 显示服务端返回的消息

|

||||

$("#hintBody").text(data.msg);

|

||||

// 显示提示框

|

||||

$("#hintModal").modal("show");

|

||||

// 2s 后自动隐藏提示框

|

||||

setTimeout(function(){

|

||||

$("#hintModal").modal("hide");

|

||||

// 刷新页面

|

||||

if (data.code == 0) {

|

||||

window.location.reload();

|

||||

}

|

||||

}, 2000);

|

||||

|

||||

}

|

||||

)

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

```js

|

||||

var title = $("#recipient-name").val();

|

||||

```

|

||||

|

||||

获取选择框 id = recipient-name 里面的值

|

||||

|

||||

@ -1,41 +0,0 @@

|

||||

# 帖子详情页

|

||||

|

||||

---

|

||||

|

||||

## DAO

|

||||

|

||||

## Service

|

||||

|

||||

## Controller

|

||||

|

||||

```java

|

||||

/**

|

||||

* 进入帖子详情页

|

||||

* @param discussPostId

|

||||

* @param model

|

||||

* @return

|

||||

*/

|

||||

@GetMapping("/detail/{discussPostId}")

|

||||

public String getDiscussPost(@PathVariable("discussPostId") int discussPostId, Model model) {

|

||||

// 帖子

|

||||

DiscussPost discussPost = discussPostSerivce.findDiscussPostById(discussPostId);

|

||||

model.addAttribute("post", discussPost);

|

||||

// 作者

|

||||

User user = userService.findUserById(discussPost.getUserId());

|

||||

model.addAttribute("user", user);

|

||||

|

||||

return "/site/discuss-detail";

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

## 前端

|

||||

|

||||

```html

|

||||

<span th:utext="${post.title}"></span>

|

||||

|

||||

<div th:utext="${user.username}"></div>

|

||||

|

||||

<div th:utext="${post.content}"></div>

|

||||

```

|

||||

|

||||

193

docs/120-显示评论.md

193

docs/120-显示评论.md

@ -1,193 +0,0 @@

|

||||

# 显示评论

|

||||

|

||||

---

|

||||

|

||||

解释一下评论表中的三个字段:

|

||||

|

||||

- `entity_type`: 可以对帖子进行评论,也可以对该帖子的评论进行评论(回复) ,该字段就是用来表明评论目标的类别:1 帖子;2 评论

|

||||

- `entity_id`: 评论目标的 id。比如对 id 为 115 的 帖子进行评论,对 id 为 231 的评论进行评论

|

||||

- `target_id`: 指明我们这个回复是针对哪个用户的评论的(该字段只对回复生效,默认是 0)

|

||||

|

||||

## DAO

|

||||

|

||||

```java

|

||||

@Mapper

|

||||

public interface CommentMapper {

|

||||

|

||||

/**

|

||||

* 根据评论目标(类别、id)对评论进行分页查询

|

||||

* @param entityType 评论目标的类别

|

||||

* @param entityId 评论目标的 id

|

||||

* @param offset 每页的起始索引

|

||||

* @param limit 每页显示多少条数据

|

||||

* @return

|

||||

*/

|

||||

List<Comment> selectCommentByEntity(int entityType, int entityId, int offset, int limit);

|

||||

|

||||

/**

|

||||

* 查询评论的数量

|

||||

* @param entityType

|

||||

* @param entityId

|

||||

* @return

|

||||

*/

|

||||

int selectCountByEntity(int entityType, int entityId);

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

对应的 `maaper.xml`

|

||||

|

||||

```xml

|

||||

<mapper namespace="com.greate.community.dao.CommentMapper">

|

||||

|

||||

<sql id = "selectFields">

|

||||

id, user_id, entity_type, entity_id, target_id, content, status, create_time

|

||||

</sql>

|

||||

|

||||

<sql id = "insertFields">

|

||||

user_id, entity_type, entity_id, target_id, content, status, create_time

|

||||

</sql>

|

||||

|

||||

<!--分页查询评论-->

|

||||

<!--不查询禁用的评论, 按照创建时间升序排序-->

|

||||

<select id = "selectCommentByEntity" resultType="Comment">

|

||||

select <include refid="selectFields"></include>

|

||||

from comment

|

||||

where status = 0

|

||||

and entity_type = #{entityType}

|

||||

and entity_id = #{entityId}

|

||||

order by create_time asc

|

||||

limit #{offset}, #{limit}

|

||||

</select>

|

||||

|

||||

<!--查询评论的个数-->

|

||||

<select id = "selectCountByEntity" resultType="int">

|

||||

select count(id)

|

||||

from comment

|

||||

where status = 0

|

||||

and entity_type = #{entityType}

|

||||

and entity_id = #{entityId}

|

||||

</select>

|

||||

|

||||

</mapper>

|

||||

```

|

||||

|

||||

## Service

|

||||

|

||||

```java

|

||||

@Service

|

||||

public class CommentService {

|

||||

|

||||

@Autowired

|

||||

private CommentMapper commentMapper;

|

||||

|

||||

/**

|

||||

* 根据评论目标(类别、id)对评论进行分页查询

|

||||

* @param entityType

|

||||

* @param entityId

|

||||

* @param offset

|

||||

* @param limit

|

||||

* @return

|

||||

*/

|

||||

public List<Comment> findCommentByEntity(int entityType, int entityId, int offset, int limit) {

|

||||

return commentMapper.selectCommentByEntity(entityType, entityId, offset, limit);

|

||||

}

|

||||

|

||||

|

||||

/**

|

||||

* 查询评论的数量

|

||||

* @param entityType

|

||||

* @param entityId

|

||||

* @return

|

||||

*/

|

||||

public int findCommentCount(int entityType, int entityId) {

|

||||

return commentMapper.selectCountByEntity(entityType, entityId);

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

## Controller

|

||||

|

||||

在上篇的帖子详情中进行添加(复用分页组件)

|

||||

|

||||

```java

|

||||

/**

|

||||

* 进入帖子详情页

|

||||

* @param discussPostId

|

||||

* @param model

|

||||

* @return

|

||||

*/

|

||||

@GetMapping("/detail/{discussPostId}")

|

||||

public String getDiscussPost(@PathVariable("discussPostId") int discussPostId, Model model, Page page) {

|

||||

// 帖子

|

||||

DiscussPost discussPost = discussPostSerivce.findDiscussPostById(discussPostId);

|

||||

model.addAttribute("post", discussPost);

|

||||

// 作者

|

||||

User user = userService.findUserById(discussPost.getUserId());

|

||||

model.addAttribute("user", user);

|

||||

|

||||

// 评论分页信息

|

||||

page.setLimit(5);

|

||||

page.setPath("/discuss/detail/" + discussPostId);

|

||||

page.setRows(discussPost.getCommentCount());

|

||||

|

||||

// 存储帖子的评论

|

||||

List<Comment> commentList = commentService.findCommentByEntity(

|

||||

ENTITY_TYPE_POST, discussPost.getId(), page.getOffset(), page.getLimit());

|

||||

|

||||

List<Map<String, Object>> commentVoList = new ArrayList<>(); // 封装对帖子的评论和评论的作者信息

|

||||

if (commentList != null) {

|

||||

for (Comment comment : commentList) {

|

||||

// 对帖子的评论

|

||||

Map<String, Object> commentVo = new HashMap<>();

|

||||

commentVo.put("comment", comment);

|

||||

commentVo.put("user", userService.findUserById(comment.getUserId()));

|

||||

|

||||

// 存储评论的评论(不做分页)

|

||||

List<Comment> replyList = commentService.findCommentByEntity(

|

||||

ENTITY_TYPE_COMMENT, comment.getId(), 0, Integer.MAX_VALUE);

|

||||

List<Map<String, Object>> replyVoList = new ArrayList<>(); // 封装对评论的评论和评论的作者信息

|

||||

if (replyList != null) {

|

||||

// 对评论的评论

|

||||

for (Comment reply : replyList) {

|

||||

Map<String, Object> replyVo = new HashMap<>();

|

||||

replyVo.put("reply", reply);

|

||||

replyVo.put("user", userService.findUserById(reply.getUserId()));

|

||||

User target = reply.getTargetId() == 0 ? null : userService.findUserById(reply.getUserId());

|

||||

replyVo.put("target", target);

|

||||

|

||||

replyVoList.add(replyVo);

|

||||

}

|

||||

}

|

||||

commentVo.put("replys", replyVoList);

|

||||

|

||||

// 对某个评论的回复数量

|

||||

int replyCount = commentService.findCommentCount(ENTITY_TYPE_COMMENT, comment.getId());

|

||||

commentVo.put("replyCount", replyCount);

|

||||

|

||||

commentVoList.add(commentVo);

|

||||

}

|

||||

}

|

||||

|

||||

model.addAttribute("comments", commentVoList);

|

||||

|

||||

return "/site/discuss-detail";

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

## 前端

|

||||

|

||||

```html

|

||||

<li th:each="cvo:${comments}">

|

||||

<div th:utext="${cvo.comment.content}"></div>

|

||||

|

||||

<li th:each="rvo:${cvo.replys}">

|

||||

<span th:text="${rvo.reply.content}"></span>

|

||||

```

|

||||

|

||||

`cvoStat` 固定表达:循环变量名 + Stat 表示每次的循环对象

|

||||

|

||||

`cvoStat.count` 表示当前是第几次循环

|

||||

149

docs/130-添加评论.md

149

docs/130-添加评论.md

@ -1,149 +0,0 @@

|

||||

# 添加评论

|

||||

|

||||

---

|

||||

|

||||

事务管理(在添加评论失败的时候进行回滚)

|

||||

|

||||

- 声明式事务(推荐,简单)

|

||||

- 编程式事务

|

||||

|

||||

<img src="https://gitee.com/veal98/images/raw/master/img/20210123142151.png" style="zoom: 50%;" />

|

||||

|

||||

## DAO

|

||||

|

||||

`DiscussPostMapper`

|

||||

|

||||

```java

|

||||

/**

|

||||

* 修改帖子的评论数量

|

||||

* @param id 帖子 id

|

||||

* @param commentCount

|

||||

* @return

|

||||

*/

|

||||

int updateCommentCount(int id, int commentCount);

|

||||

```

|

||||

|

||||

对应的 xml

|

||||

|

||||

```xml

|

||||

<!--修改帖子的评论数量-->

|

||||

<update id="updateCommentCount">

|

||||

update discuss_post

|

||||

set comment_count = #{commentCount}

|

||||

where id = #{id}

|

||||

</update>

|

||||

```

|

||||

|

||||

`CommentMapper`

|

||||

|

||||

```java

|

||||

/**

|

||||

* 添加评论

|

||||

* @param comment

|

||||

* @return

|

||||

*/

|

||||

int insertComment(Comment comment);

|

||||

```

|

||||

|

||||

对应的 xml

|

||||

|

||||

```xml

|

||||

<!--添加评论-->

|

||||

<insert id = "insertComment" parameterType="Comment">

|

||||

insert into comment(<include refid="insertFields"></include>)

|

||||

values(#{userId}, #{entityType}, #{entityId}, #{targetId}, #{content}, #{status}, #{createTime})

|

||||

</insert>

|

||||

```

|

||||

|

||||

## Service

|

||||

|

||||

`DiscussPostService`

|

||||

|

||||

```java

|

||||

/**

|

||||

* 修改帖子的评论数量

|

||||

* @param id 帖子 id

|

||||

* @param commentCount

|

||||

* @return

|

||||

*/

|

||||

public int updateCommentCount(int id, int commentCount) {

|

||||

return discussPostMapper.updateCommentCount(id, commentCount);

|

||||

}

|

||||

```

|

||||

|

||||

`CommentService`

|

||||

|

||||

```java

|

||||

/**

|

||||

* 添加评论(需要事务管理)

|

||||

* @param comment

|

||||

* @return

|

||||

*/

|

||||

@Transactional(isolation = Isolation.READ_COMMITTED, propagation = Propagation.REQUIRED)

|

||||

public int addComment(Comment comment) {

|

||||

if (comment == null) {

|

||||

throw new IllegalArgumentException("参数不能为空");

|

||||

}

|

||||

|

||||

// Html 标签转义

|

||||

comment.setContent(HtmlUtils.htmlEscape(comment.getContent()));

|

||||

// 敏感词过滤

|

||||

comment.setContent(sensitiveFilter.filter(comment.getContent()));

|

||||

|

||||

// 添加评论

|

||||

int rows = commentMapper.insertComment(comment);

|

||||

|

||||

// 更新帖子的评论数量

|

||||

if (comment.getEntityType() == ENTITY_TYPE_POST) {

|

||||

int count = commentMapper.selectCountByEntity(comment.getEntityType(), comment.getEntityId());

|

||||

discussPostSerivce.updateCommentCount(comment.getEntityId(), count);

|

||||

}

|

||||

|

||||

return rows;

|

||||

}

|

||||

```

|

||||

|

||||

## Controller

|

||||

|

||||

**前端的 name = "xxx" 和 `public String addComment(Comment comment) {` Comment 实体类中的字段要一一对应**,这样即可直接从前端传值。

|

||||

|

||||

```java

|

||||

@Controller

|

||||

@RequestMapping("/comment")

|

||||

public class CommentController {

|

||||

|

||||

@Autowired

|

||||

private HostHolder hostHolder;

|

||||

|

||||

@Autowired

|

||||

private CommentService commentService;

|

||||

|

||||

/**

|

||||

* 添加评论

|

||||

* @param discussPostId

|

||||

* @param comment

|

||||

* @return

|

||||

*/

|

||||

@PostMapping("/add/{discussPostId}")

|

||||

public String addComment(@PathVariable("discussPostId") int discussPostId, Comment comment) {

|

||||

comment.setUserId(hostHolder.getUser().getId());

|

||||

comment.setStatus(0);

|

||||

comment.setCreateTime(new Date());

|

||||

commentService.addComment(comment);

|

||||

|

||||

return "redirect:/discuss/detail/" + discussPostId;

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

## 前端

|

||||

|

||||

```html

|

||||

<form method="post" th:action="@{|/comment/add/${post.id}|}">

|

||||

<input type="text" class="input-size" name="content" th:placeholder="|回复${rvo.user.username}|"/>

|

||||

<input type="hidden" name="entityType" value="2">

|

||||

<input type="hidden" name="entityId" th:value="${cvo.comment.id}">

|

||||

<input type="hidden" name="targetId" th:value="${rvo.user.id}">

|

||||

</form>

|

||||

```

|

||||

355

docs/140-私信列表.md

355

docs/140-私信列表.md

@ -1,355 +0,0 @@

|

||||

# 私信列表

|

||||

|

||||

---

|

||||

|

||||

本节实现的功能:

|

||||

|

||||

- 私信列表:

|

||||

- 查询当前用户的会话列表

|

||||

- 每个会话只显示一条最新的私信

|

||||

- 支持分页显示

|

||||

- 私信详情

|

||||

- 查询某个会话所包含的私信

|

||||

- 支持分页显示

|

||||

- 访问私信详情时,将显示的私信设为已读状态

|

||||

|

||||

`message` 表中有个字段 `conservation_id` ,这个字段的设计方式是:比如用户 id 112 给 113 发消息,或者 113 给 112 发消息,这两个会话的 `conservation_id` 都是 `112_113`。当然,这个字段是冗余的,我们可以通过 from_id 和 to_id 推演出来,但是有了这个字段方便于后面的查询等操作

|

||||

|

||||

注意:`from_id = 1` 代表这是一个系统通知,后续会开发此功能

|

||||

|

||||

## DAO

|

||||

|

||||

```java

|

||||

@Mapper

|

||||

public interface MessageMapper {

|

||||

|

||||

/**

|

||||

* 查询当前用户的会话列表,针对每个会话只返回一条最新的私信

|

||||

* @param userId 用户 id

|

||||

* @param offset 每页的起始索引

|

||||

* @param limit 每页显示多少条数据

|

||||

* @return

|

||||

*/

|

||||

List<Message> selectConversations(int userId, int offset, int limit);

|

||||

|

||||

/**

|

||||

* 查询当前用户的会话数量

|

||||

* @param userId

|

||||

* @return

|

||||

*/

|

||||

int selectConversationCount(int userId);

|

||||

|

||||

/**

|

||||

* 查询某个会话所包含的私信列表

|

||||

* @param conversationId

|

||||

* @param offset

|

||||

* @param limit

|

||||

* @return

|

||||

*/

|

||||

List<Message> selectLetters(String conversationId, int offset, int limit);

|

||||

|

||||

/**

|

||||

* 查询某个会话所包含的私信数量

|

||||

* @param conversationId

|

||||

* @return

|

||||

*/

|

||||

int selectLetterCount(String conversationId);

|

||||

|

||||

/**

|

||||

* 查询未读私信的数量

|

||||

* @param userId

|

||||

* @param conversationId conversationId = null, 则查询该用户所有会话的未读私信数量

|

||||

* conversationId != null, 则查询该用户某个会话的未读私信数量

|

||||

* @return

|

||||

*/

|

||||

int selectLetterUnreadCount(int userId, String conversationId);

|

||||

|

||||

/**

|

||||

* 修改消息的状态

|

||||

* @param ids

|

||||

* @param status

|

||||

* @return

|

||||

*/

|

||||

int updateStatus(List<Integer> ids, int status);

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

对应的 `mapper.xml`

|

||||

|

||||

```xml

|

||||

<mapper namespace="com.greate.community.dao.MessageMapper">

|

||||

|

||||

<sql id="selectFields">

|

||||

id, from_id, to_id, conversation_id, content, status, create_time

|

||||

</sql>

|

||||

|

||||

<!--查询当前用户的会话列表(针对每个会话只返回一条最新的私信)-->

|

||||

<select id="selectConversations" resultType="Message">

|

||||

select <include refid="selectFields"></include>

|

||||

from message

|

||||

where id in (

|

||||

select max(id)

|

||||

from message

|

||||

where status != 2

|

||||

and from_id != 1

|

||||

and (from_id = #{userId} or to_id = #{userId})

|

||||

group by conversation_id

|

||||

)

|

||||

order by id desc

|

||||

limit #{offset}, #{limit}

|

||||

</select>

|

||||

|

||||

<!--查询当前用户的会话数量-->

|

||||

<select id="selectConversationCount" resultType="int">

|

||||

select count(m.maxid) from (

|

||||

select max(id) as maxid

|

||||

from message

|

||||

where status != 2

|

||||

and from_id != 1

|

||||

and (from_id = #{userId} or to_id = #{toId})

|

||||

group by conversation_id

|

||||

) as m

|

||||

</select>

|

||||

|

||||

<!--查询某个会话所包含的私信列表-->

|

||||

<select id="selectLetters" resultType="Message">

|

||||

select <include refid="selectFields"></include>

|

||||

from message

|

||||

where status != 2

|

||||

and from_id != 1

|

||||

and conversation_id = #{conversationId}

|

||||

order by id desc

|

||||

limit #{offset}, #{limit}

|

||||

</select>

|

||||

|

||||